Successful web scrapers follow some web scraping practices that make them successful in the field. If you want to go far in web scraping, you have to follow these best practices. Come in now to learn about them.

As a newbie in the game of web scraping, you will think your small script can get a task at any scale, but sooner or later, you will not only discover that your script is just a proof of concept, you will discover how naïve you were.

You will discover here is more to web scraping than you know. You will discover that you need to deal with a good number of anti-scraping techniques for you to be able to scrape some websites, and you will know that just like every other field, web scraping has its own best practices that you must adhere to succeed.

In this article, you are going to be learning about the best practices when scraping a site. You will also learn how to handle common problems you will encounter when web scraping and how to solve them.

Common Pitfalls in Web Scraping

As a web scraper, you need to know that are some pitfalls that you must experience in your web scraping exercise. Some of them happen often – some occur less frequently. Regardless of the frequency of occurrence, you need to know about them. The common ones are discussed below.

-

Change in the HTML of a Page

I decided to start with this point because, in most cases, it has nothing to do with a website trying to prevent you from scraping. However, it is one of the most popular reasons while web scraping scripts stop working. Most sites usually change their layout after some time, and when this happens, the HTML will have to change.

This then means that your code will break and stop working. You need a system that reports to you immediately a change has been discovered on a page so that you can get it fixed. Some sites that use pagination change the layout after some pages to get scrapers to break – you have to put this into consideration too.

-

Mistakenly Scrapping the Wrong Data

Another common pitfall you are bound to experience as a web scraper is scrapping the wrong data. Usually, this might not happen when you are scraping a few pages and can quickly go through the scraped data, and as such, you can tell if there is a problem with any of the scraped data.

- How to Scrape Data from Linkedin Using Proxies

- The Ultimate Guide to Scraping Craigslist Data with Software

- Using Proxies to Scrape Whois Domain Data

However, when the size of the data to be scrapped is much, and you can’t go through it, then you need to think of the integrity and quality of the whole data scraped. This is because some of the data might not meet your quality guidelines. For this, you need to subject data to a test case before adding it to the database.

-

Anti-Scraping Techniques

Websites do not want their data scrapped, and if they want, they will provide you an API for that. Most complex websites have anti-spam systems in place to prevent web scrapers, crawlers, and other automation bots from accessing their content.

These involve some anti-scraping techniques such as IP tracking and ban, honeypot traps, Captchas, Ajaxifying a site, browser fingerprinting, and many others. You will be learning how to solve all of these problems in the section after this.

-

The Problem of Scraping at a Large Scale

If you are a newbie in the field of web scraping, you will think that scraping a site of 10,000 pages is the same as scraping a site of 2 million pages. However, the more data you have to scrape, the more careful and planning you need. Generally, you need to know that the more data you need to scrape, the more time it will take.

Usually, developing your scraper to scrape concurrently and distributing the work among different computers/servers will make the whole process faster. Also, your database system needs to be scalable, fast, secure, and reliable. Else, you risk wasting a lot of time trying to query the database. Amazon Web Services (AWS) is one of the best choices in the market.

Web Scraping Best Practices

Just like I stated earlier, every worthwhile activity has its own best practices, and web scraping is not an exception. This part of the article will be used to describe those best practices.

-

Respecting a Website’s Robots.txt File

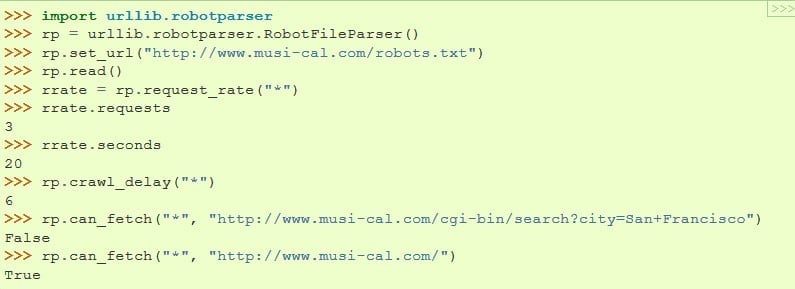

Most websites have a robots.txt which they use in communicating with automation bots such as crawlers and scrapers on pages to scrape and not to scrape. They can also give other commands such as frequency of crawling and timing between requests, among other things. One thing I have discovered about most web scrapers excluding the ones owned by search engines is that the robots.txt files for websites are not respected – they are completely ignored. In fact, some web scrapers see robots.txt as obsolete.

However, it is among the best practices to consider a website’s robots.txt. Usually, even if you do not want to follow it disallow rules that dictate paths you shouldn’t follow, you can at least respect the crawl delay instruction in other to be gentle on web servers. You can find how to parse the robots.txt file in your preferred programming language and scraping framework. For python programmers, they can get that done using the urllib.robotparser module.

-

Spoofing the User-Agent and Other HTTP Headers

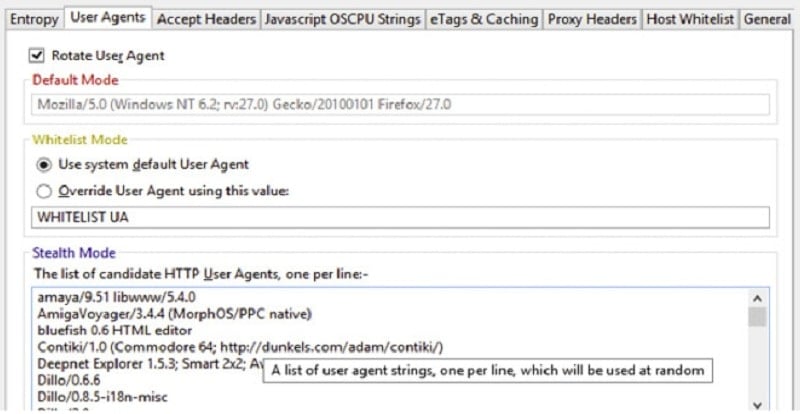

When browsers send a request to a web server, it sends details such as User-Agent, which is a string identifying the browser. Alongside the User-Agent, other information sent includes Accept, Accept-Language, Accept-Encoding, and Referrer, among other data.

Web scrapers also have to submit this information else; some sites will deny them access. Now, some sites automatically block certain crawlers and scrapers using their User-Agent to identify them.

If you do not want your bot identified as a web scraper, you need to spoof the User-Agent by replacing it with that of a popular web browser. It is even much better if you can rotate the User-Agent, but you have to make sure that the site does not present different layouts to different User-Agents else;

the code will break if there is a layout change you did not account for in your code. When using the User-Agent string of a popular browser, you have to make sure that other HTTP headers correspond with it. Make sure you also provide a value for the referrer header so that it looks more natural.

-

Dealing with Logins and Session Cookies

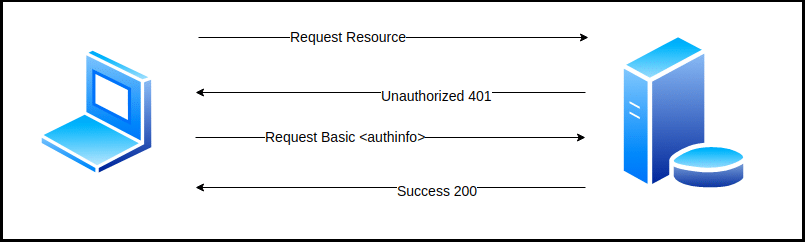

Some data you might be interested in are hidden behind a login page. When you faced with this, you just have to plan carefully as monitoring your activities becomes easier for a website.

But how do you even login and maintain a session cookie in the first place?

While this might seem to be a difficult task, it is actually not difficult if you know what you are doing. You just have to create a session, then send a request to the login URL with your authentication details as the payload. When your request is successful, you will get a response with the session cookies attached.

With the session cookie returned, you can attach it to each of your requests, and you won’t be asked to login again as sites use cookies to identify their users. For you to discover the login URL and name of form inputs to be used for the payload, you need to inspect the form in a browser environment by right-clicking and click on the Inspect element option.

The value of the form action is the login URL. For the payload, inspect the form element and pull out the correct name of the username and password field – with the other field, if any.

-

Handling Hidden (But Required) Security Fields on POST Forms

The above method of login may not work for some sites that have hidden security fields to prevent hackers and spammers from accessing their sites. This is because if you try sending just the username and password without data for the hidden field, the request won’t be successful.

Aside from login, many POST forms also have security fields such as the csrf_token hidden from regular users and automatically populated with a hash value you can’t reproduce.

For you to get the data for this field, you need to create a session, visit the page, and pull out the value from this hidden page. After this, you can then send the request with the value for the hidden field in the request payload.

-

Slowing Down Your Requests to Avoid Overwhelming a Website

Web scraping involves sending a good number of requests to websites that you do not own. This means that you are naturally adding to the cost of maintaining the site – without adding any value to the site. If you cannot add value to a site you are scraping, then try as much to be polite by setting a delay between requests so that you do not overwhelm the website’s server. Some websites even state the optimal crawling delay for web crawlers and scrapers in their robots.txt file.

Even without stating it, it is part of the best practices and ethics for you to avoiding hammering a site with too many requests in a short while. This is to avoid slowing the site. It is also important you scrape a site in the night or early in the morning when people are not active – this is to ensure your actions do not affect other users by making the site slow.

-

Distribute Your Requests Across Multiple IPs

The truth is, this point shouldn’t even be part of the best practices as using proxies when scraping is a must. Each website has a request limit it allows from a single IP for a given period of time. If an IP tries to exceed this, such IP will be blocked for sometimes.

So, if you are looking forward to scraping at a reasonable scale, then you need to make use of proxies. With proxies, you can distribute your requests across multiple IPs and make them look like they are getting to the website from different devices.

Using a proxy pool is the best for this. This is because they have many IPs in their pool, and you do not have to worry about IP rotation and taking care of bad IPs. When it comes to the type of proxies, residential proxies are the best for this. However, for some selected sites, datacenter proxies work quite great.

- Rotating proxies to Request with Multiple IPs for web scraping

- How To Generate A Random IP Address For Each Session

-

Handling Missing HTML Tags

When it comes to web scraping, you need to know that the HTML code of pages cannot be trusted, and it is for a reason – they are being changed every now and then.

Because of this, it is important you always check for the existence of an element before trying to manipulate it or pull data from it. When you try to pull data from a missing HTML tag, some parsing libraries will return None, while others will throw an exception.

It is advisable you always use the if statement to check for the availability of tag before trying to work on it. And if an element is missing, the web scraper should log it and notify you so that you know that something has changed on the page in other for you to work on it.

-

Handling Network Errors

You are writing the code for your web scraper, and you are not thinking of network errors, right?

Well, it might interest you to know that it often happens, much more than you think. It could be as a result of a problem from your own end, a problem from the web server you are sending requests to, or from your proxy provider.

The rule of thumb is never to trust that network will behave as you expect it to. Problems will arise, and as such, you should write your code in such a way that you factor in the possibility of network errors and handle them accordingly.

Make sure every part of your code where you have to send web requests has exception handling attached, try:

requests.get(https://www.google.com) except requests.exceptions.RequestException: # code for handling the exception here.

In handling the error, you can retry, and after a few trials, you can then move to the next URL and log that particular URL and error so that you can get that done manually. Also, make sure you only start parsing out data when the HTTP status code returned is 200.

-

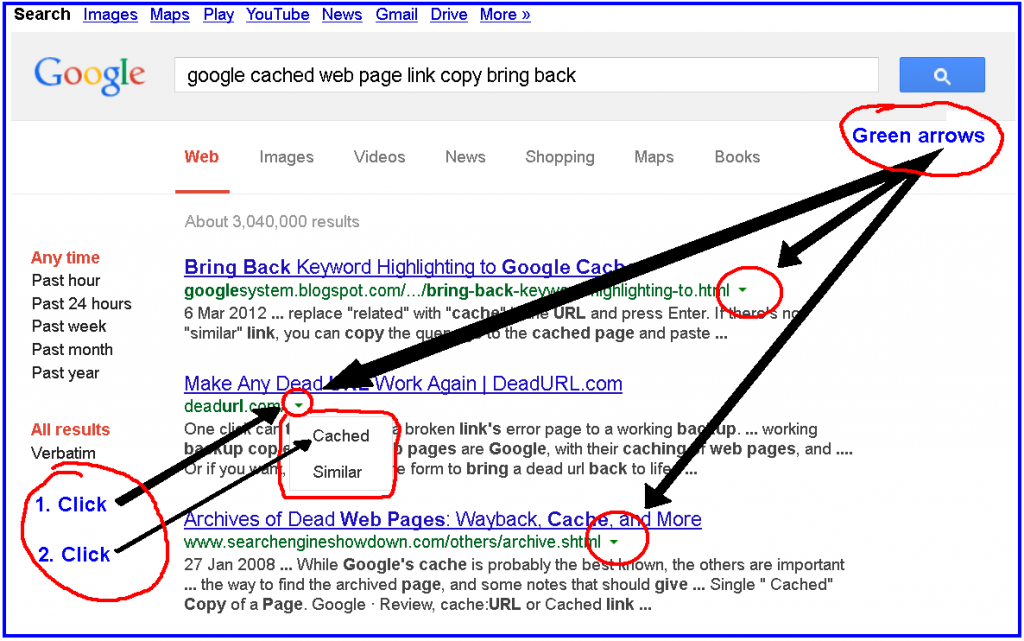

Scrape Google Cache for Non-Time Sensitive Data

Is the time you are trying to scrape not time-sensitive? Then you might as well leave the website alone and scrape its data by scraping copies on Google Cache. It might interest you to know that most of the pages you are trying to scrape have already been scraped by Google, and you can scrape directly from Google Cache, especially when you are dealing with historical data. You can get the whole HTML of a page, including it, pictures, and other files from Google Cache.

- Proxies for Preventing Bans and Captchas When Scraping Google

- The Best Google Proxies for SERP data & Never Get Google Blocked

Google is a very large website and can take any number of request you wish to send to its server anytime of the day without your web scraper impacting it in any negative way.

Such can’t be said of other websites that their server can easily get overwhelmed with requests. Even Scrapy advises that web scrapers should scrape historical data from Google Cache instead of hitting a website directly.

Conclusion

Web scraping is a serious business that requires a good amount of planning and careful execution, especially if you are going into it on a reasonable scale. As you are planning, there are some key best practices in web scraping that you have to consider. Some of these have been discussed above.