Are you new to the world of web crawling and web crawlers? Then come in now and discover what they are in this introductory article on web crawlers. You’re going to be learning how they work and many more.

The world wide web is filled with automation bots that help automates tasks on the Internet. While there are many web bots available, some of the most popular ones that have proven to be quite useful not only to their owners but to the Internet community at large are known as web crawlers.

In this article, you’re going to be learning a lot about web crawlers. These include how they work, application, problems crawler developers face, crawler identification, and how to develop them. Take this as a beginner guide to web crawling and web crawlers. Let start with what web crawlers are.

What is a Web Crawler?

A web crawler is a computer program written to help automate the process of visiting websites on the Internet for the purpose of web indexing or collecting some specific data. They are also known as web spiders, robots, or simply bots. But calling them web crawlers or spiders makes them more specific as there are a good number of other programs that are not web crawlers such as scrapers that can also be called robots and bots.

They carry out their tasks through a process known as web crawling. The Internet is a network of links, and web crawlers will have to crawl known links (URLs) for unknown URLs in search of the specific data points.

Web crawlers are incredibly important to search engines as all of the search engines in the market have their own unique web crawlers that go round the Internet, visiting web pages and creating an index of them so that when you send in queries, the search engines know where the required information you are requesting can be found on the Internet.

Some web crawlers are very much specialized in their tasks. While web crawlers are seen as good and beneficial, they can also be harmful as it is in the case of blackhat web crawlers built for sinister motives.

How Does Web Crawlers Work?

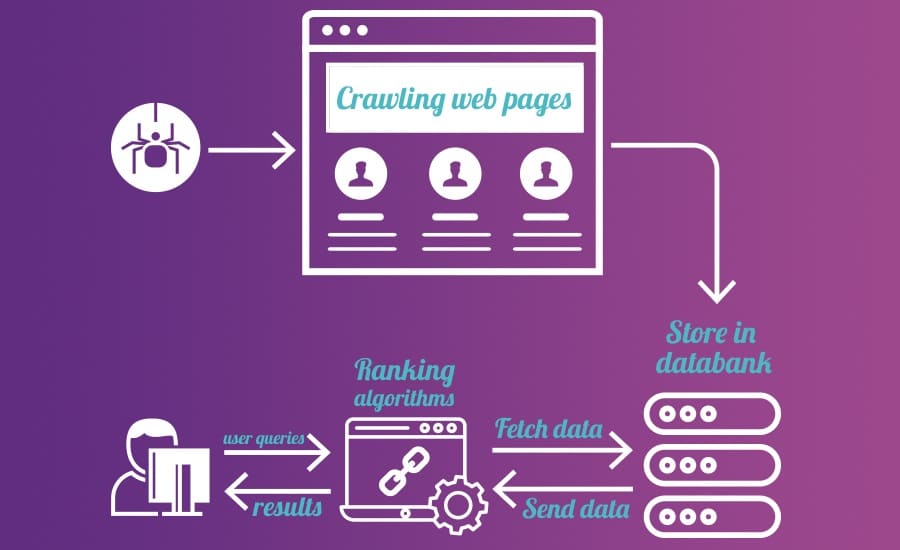

While the working mechanism of web crawlers can differ a lot depending on what its specific goals are, we can reach an agreement on a general note on how web crawlers work. At first, web crawlers do not have a list of all the URLs on the Internet or the section of the Internet it is targeting. Interestingly, it would need to crawl them for collecting data it requires. How then does it get this done?

Web crawlers start with a list of known URLs, otherwise known as seed URLs. What it does is that it visits these URLs and collect two classes of data – URLs and specific data of interest. The URLs are collected so that they will be crawled, and they are added to the list of URLs to be crawled.

The specific data points, such as index, which are the real reason for the crawling tasks is saved in a database or used for creating an index – still require saving in a database. After every crawling of a page, the crawler looks at the list of URLs to be crawled and choose the next one to visit.

The Internet is quite huge, and web crawlers, especially those owned by search engines and the ones meant for crawling the whole Internet will be very inefficient if they scrape all the links they come in contact with and in the order of discovery.

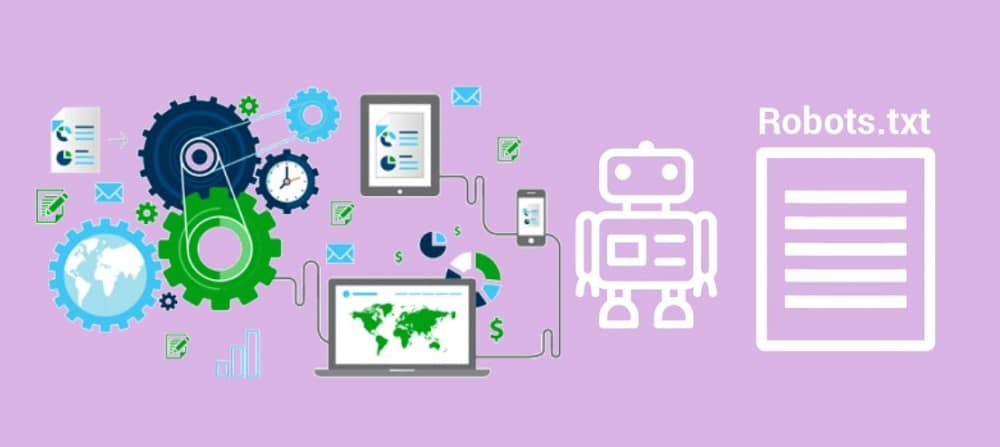

Because of this, web crawlers have to decide which link to crawl next after every page crawl using some policies. This includes how important a URL is, defined by how many URLs are linking to it, when last the page was crawled, and the directives in a website’s robots.txt. In the end, not all URLs get scraped.

Crawler Identification

How Do Crawlers Identify Themselves

Interaction on the Internet is not entirely different from our day to day interaction. When a browser, a web scraper, web crawler, or any other computer program sends a web request to a web server, it needs to identify itself with a string known as “User-Agent.”

This string usually carries the name of the computer program – some have their version included and other information that will show provide other specific information to the web servers. It is this User-Agent string that websites use in specifying the version of a web page and layout to return as a response.

For web crawlers, they need to identify themselves to websites so that the website will treat them in the way they deserve. It is required web crawlers use names which the administrators of websites will be able to use to trace the owner/developers of the crawlers so that if the website admin needs to communicate anything to the team behind a specific crawler, things become easier. With a unique, distinguishable name, it will be easy to fish out requests sent by specific crawlers. Websites through their robots.txt can communicate to specific crawlers on the rules of engagement as far as crawling its web pages is a concern.

While it is ethical for crawlers to identify themselves, they usually do not identify themselves as they should because of crawler bias. Crawler bias is a scenario where websites on the Internet favor some selected crawlers and allow them to crawl their pages while depriving others.

Googlebot, owned by Google, enjoys this more than any other automation bot on the Internet. In fact, most websites only allow Google to crawl their pages while preventing others. Because of this, crawlers do not identify themselves as they should, and they end up using the User-Agent string of Googlebot or some of the popular web browsers such as Google Chrome, and Firefox.

Application of Web Crawling and Crawlers

Web crawlers have a good number of applications, and in some instances, its application overlaps with that of web scrapers. Below are some of the uses of web crawlers.

-

Web Indexing

Have you ever imagined the Internet without search engines? If you have, then you will know that without them, the knowledge of the existence of many websites will be limited. Well, from the beginning, I stated that search engines all have crawlers. These crawlers crawl the Internet, collecting snapshots of web pages and creating web index so that when you send in your queries, it becomes easier to search through the index and point you to relevant pages.

Aside from web indexing, the other application of web crawlers is collecting some specific data from websites. This is the area they overlap with web scrapers. However, unlike web scrapers that are more specific and have prior knowledge of the web URLs to be visited, wen crawlers do not – they start from the known to the unknown. Some of the popular data collected include contact details for market prospecting, price data collection, social media data extraction, and much more.

-

Exploit Detection

For hackers, crawlers can be incredibly useful for exploit detection. While having a specific target can be helpful, in some instances, they do not have a specific target. And as such, they make use of web crawlers that goes around the Internet visiting web pages using some checklist to detect exploits opportunities. Ethical hackers do this to help keep the Internet safe while the bad ones do this to exploit the detected loopholes in negative ways.

-

Specialized Tool Development

Aside from exploits detection programs, web crawling is quite important in many specialized tools such as Search Engine Optimization tools that crawl specific websites for analysis or the ones that go around building link network for backlink data purposes.

Problems Faced by Web Crawlers

Web crawlers are not without their own problems. Some of the problems faced by web crawlers and their developers are discussed below.

-

Websites Anti-Bot Systems

The thing is, aside from Googlebot and crawlers owned by search engines, websites do not want any other automation bot in the form of either a web crawler or scraper to access its pages. Automated access is frown upon by many websites because of content theft and hijacking.

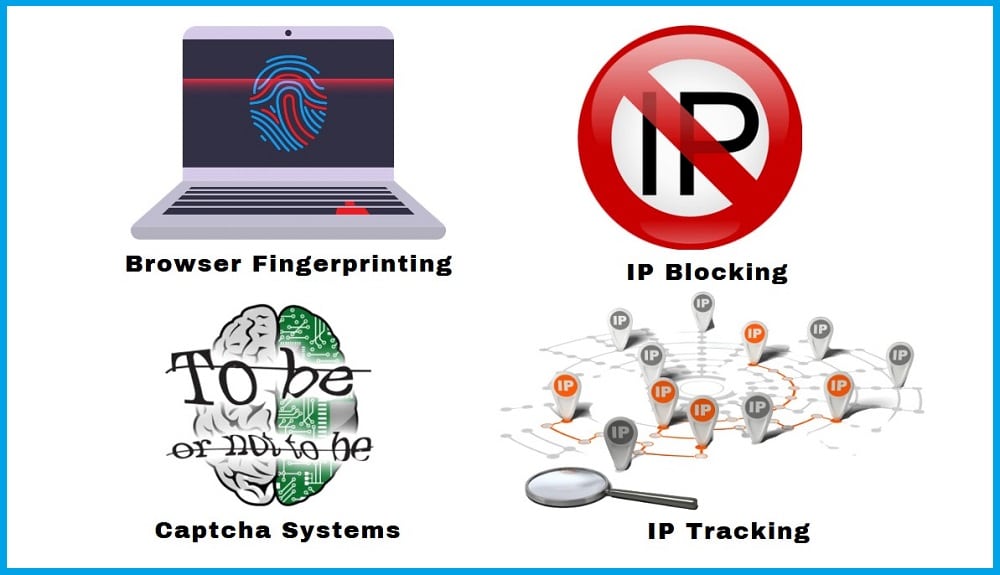

Some do not like crawlers as they slow down servers and increase running costs. Because of this, websites put up some anti-bot techniques such as IP tracking and blocking, Captcha systems, and even browser fingerprinting. If a crawler is not among the allowed crawlers on a website, it has to circumvent these anti-bot techniques to access the website content.

-

The Deep Web

The thing is, no matter how a crawler tries to crawl the Internet, there are still sections of the Internet that are not accessible by web crawlers, especially the ones without prior knowledge of the pages it will visit. In fact, according to some reports, only about 40 – 70 percent of the web pages on the Internet are accessible using crawlers.

These are publicly available web pages. Other pages, including new and orphaned pages not linked to by any other web pages, won’t be discovered by web crawlers. Also, private pages such as the ones behind paywall and logins are left out.

-

JavaScript Rich Pages

Just in case you do not know, most crawlers on the Internet are useless when you hit an Ajaxified web page. This is because traditionally, websites have been designed to be static, and the model is simple – send a web request, and an HTML file with the requested content is returned.

Crawlers have been designed for this model, and all they have to do is parse out the required data. The coming of AJAX now has changed this as content on a page shows and load after certain JavaScript events. Because web crawlers are not built for this, they find it difficult to extract data from these web pages. However, some web crawlers make use of screen scraping and other methods to solve this.

- Headless browsers for automation testing

- Use Chrome Headless and Dedicated Proxies to Scrape Any Website

- Web Scraping API to Help Scrape & Extract Data

How Web Crawlers Differs from Web Scrapers

Web crawlers and scrapers or their associated processes (crawling and scraping) are used interchangeably that many people think they are the same. While both web crawlers and scrapers deal with extracting data from web pages through automated means, they are not the same. Web crawlers usually do not have a complete list of URLs it will visit – it only has seed URLs, and from there, it finds other URLs it needs to visit. However, web scrapers are completely aware of the pages they need to visit and have a complete knowledge of the page it will scrape from.

Web scrapers are completely hated by websites as they are seen as content thieves, while many web crawlers, especially search engine crawlers, are loved by websites.

While web scrapers have to parse to the finest detail, the specific data it requires, web crawlers might not be that specialized. With all these being said, you need to have it at the back of your mind that web crawling in the strictness of the term requires some level of web scraping.

How to Build Web Crawlers

When you try building a simple web crawler that crawls a few thousand pages, you will have the notion that building web scrapers is an easy task.

However, when you need to build a web scraper that works at a large scale, scrape millions of web pages it knows nothing about, and do so continuously every day, then you will know that it is not an easy task. You will need to think of its engineering, prevent database, and I/O issues.

Generally, web crawlers can be built with any programming language you wish. While Python seems to be the language of choice among many small crawler developers, C and C++ are used heavily by the big players. The specific requirements of your project will determine the features you will incorporate in your crawler. But you need to keep a list of URLs to be visited and constantly add to the list any time you encounter a new URL.

However, make sure you normalize the URLs to make sure your list does not contain duplicate URLs. Crawlers can never be efficient using a single thread. It has to be multithreaded, and you make sure that it does not cause any problem to the database.

For ethical reasons, it is important you respect the robots.txt and avoid crawling websites that do not want to be crawled. This is not compulsory, though, as web crawling is generally legal – it can, however, be illegal depending on what you use the data for. So it is best you consult the service of a lawyer. Providing your crawler with a unique User-Agent is also a good practice, but for some obvious reasons, you might want to mimic regular browsers.

Conclusion

Web crawlers are an important part of the modern web, and whether you like them or want to admit they are important or not, the Internet has come to live with them – and they have come to stay. Without them, there won’t be any efficient search engine to help us discover content online. However, some of them can be unethical with their practices.