Are you looking for the best proxy API for web scraping and crawling? Come in now and discover the best ones in the market. You will also be learning about why you should use them and their downsides.

Are you new to web scraping and proxy management? Chances are there that your web scraper keeps getting blocked and requesting for Captcha to be solved. If this happens often, then you might want to drop using general proxies all together and switch to using proxy APIs, which are optimized for web scraping. Even though most proxy providers will claim that their proxies are optimized for web scraping, only a few are. Most of them are general-purpose proxies with little consideration for the unique requirements of web scraping.

Proxy APIs for web scraping put into consideration the requirements for a successful scraping. While some providers are strictly providers of these APIs, others are web scraping services that allow people to use their private proxy pool. Generally, providers of proxy APIs for scraping do not disclose much about their proxy pool – you wouldn’t know whether their proxies are self-built or they rent from proxy providers. However, their pricing is much flexible compared to that of regular proxy services, as it is based on the number of successful requests sent.

What is a Proxy API for Scraping?

Proxy APIs for web scraping is specialized scraping proxy systems that do not only take care of proxies but also takes care of headless browsers for you. Some proxy APIs go as far as helping out in handling Captcha.

While regular proxies are priced either based on bandwidth usage or port, proxy APIs are priced based on the number of successful requests. They are quite useful when you want to delegate the tasks of managing proxies. They are effective in doing that as they make use of an IP rotation system that make sure blocks are avoided.

Why You Should Use a Proxy API for Scraping

I don’t know about you, but I wouldn’t just rush into using a proxy API for all of my web scraping jobs until it is required. So, what are those reasons why people use them? Let take a look at a few of these reasons below.

-

Good for New Proxy Users

At first, you will have the thought that using proxies is an easy task, especially if you are carried away by the marketing gimmicks of proxy providers. However, when you start using proxies at a reasonable scale, you will get to know that proxy management is not an easy task. As a newbie in using proxies, you might things mixed up and get overwhelmed.

To avoid all of these, you can use proxy APIs as they are proxy newbie-friendly, When using a proxy API, you give URLs to those Scraping Proxy API, then get web page data back.

If you're looking for proxies for scraping, I recommend using NutNut's mobile proxies. It's a great choice I recently discovered.

NetNut Mobile Proxies for Web Scraping

- Proxy Pool Size: Over 52 million mobile IPs worldwide

- Supports Geotargeting: Yes

- Cost: Starts at $300 for 20GB

- Free Trials: 7-day free trial available

- Special Functions: Target country-level restrictions with an extensive location list

NetNut's proxies are a difference maker in advertising services and digital marketing agencies seeking fast and reliable internet connections. With these mobile proxies, you can access restricted content, avoid CAPTCHAs and IP bans, and bypass even the smartest geo-restrictions mechanism effortlessly.

NetNut offers an extensive pool of over 52 million IPs worldwide, offering targeted locations at the country level. No matter where you are, you'll enjoy an optimal IP pool to support your data collection needs. Say no to interruptions because NetNut's high uptime is a guarantee, ensuring continuous connectivity for your critical tasks business tasks.

NetNut's proxy network utilizes real mobile phone IPs, unlike others, resulting in efficient and effective web scraping. The automatic mobile IP rotation is what guarantees smooth data collection without CAPTCHAs or IP blocks, enhancing your productivity and efficiency. With speedy 4G/LTE, you can extract data rapidly and stay ahead of your rivals.

For companies and professionals who like to be in control of their own processes, NetNut grants you the flexibility of custom rotation for residential and mobile proxies. This feature is perfect for managing ad campaigns, fine-tuning data extraction processes, and bolstering privacy measures, giving companies and managers greater control over their operations.

Finally, NetNut City-Level Precision Targeting! This gives companies the capability to reach unmatched accuracy with NetNut's mobile proxies. Whether it's for precise geo-targeted campaigns, local market research, or regional brand protection, the city-level targeting feature allows you to fine-tune your data extraction processes, this makes it ideal for executives and internet companies seeking precise data insights.

We love a free trial and NetNut didn’t disappoint in that regard. You can start experiencing NetNut’s mobile proxy capabilities with a 7-day free trial today.

NetNut offers impressive pricing plans for their mobile proxy services that caught our attention. Starting with a 50GB plan at $950 per month, their packages scale up significantly, providing more bandwidth with minimal price increases. In fact, the larger plans allow you to enjoy over 400% and 900% increase in bandwidth without a corresponding surge in price percentages. This makes NetNut's pricing structure highly attractive for companies with varying data requirements.

-

They are Equipped with Scraping Specialized Functions

One of the things that proxy APIs handle is headless browser automation, and you will agree with me that handling headless browsers yourself is not an easy task. You will appreciate this when you need to scale a headless chrome grid, which requires a lot of engineering time and knowledge – there’s also a financial cost attached to this. Some of the proxy APIs also have support for solving Captcha.

-

You only Pay for Successful Requests

One of the major reasons why you should use a proxy API is that the pricing is based on the number of successful requests. Because of this, the providers are always fine-tuning their system to increase their success rate. This makes a lot of sense and might be the reason they have a high success rate. However, you have to know that your subscription has an expiry date attached to it.

Best Proxy APIs for Web Scraping

There are many proxy APIs in the market optimized for web scraping. Most are paid while a few others have free plans with some limitations. We do not advise our users to use free proxy APIs as they won’t be effective and come with some disadvantages. For the paid ones in the market, below are the best 5 right now.

Apify

- Proxy Pool Size: Tens of thousands

- Supports Geotargeting: Yes

- Cost: Starts at $49 for $49 platform credits

- Free Trials: $5 monthly platform credits and 30-day trial of proxies

- Special Functions: JavaScript-based data extraction and automation platform

Apify is a software platform that allows developers to build data extraction and automation tools called actors. Actors can be private or public, with a range of ready-made public actors on Apify Store and full custom solutions available to enterprise customers. Freelance Apify-approved developers can even charge a monthly rental fee for their actors to earn passive income. All data scraped from sites like Facebook, Twitter, Instagram or Google Maps using Apify tools can be downloaded in JSON, CVS, XLS, or HTML.

Apify Proxy offers a huge pool of both datacenter and residential proxies with intelligent IP rotation. Also offers Google SERP proxies to allow you to download and extract data from Google Search Engine Result Pages (SERPs). Apify has a specific tool that works with the Anti Captcha service to solve CAPTCHAs.

ScrapingBee

- Proxy Pool Size: Not disclosed

- Supports Geotargeting: Yes

- Cost: Starts at $29 for 250,000 API credits

- Free Trials: 1,000 API calls

- Special Functions: Handles headless browser for JavaScript rendering

ScrapingBee is a scraping API that, unlike Crawlera, it handles both rotating proxies and headless browsers. With ScrapingBee headless Chrome, you can render JavaScript pages and scrape the needed data from them. It executes custom JavaScript snippets and will wait for all JS code to execute.

They make use of the latest version of Chrome in headless mode for rendering and executing JavaScript. They have a large pool and provide support for geo-targeting. For sites such as Google and Instagram, they have already made APIs that will return JSON formatted content for you.

Crawlera

- Proxy Pool Size: Not specific – tens of thousands

- Supports Geotargeting: Yes

- Cost: Starts at $99 for 200,000 requests

- Free Trials: 10,000 requests within 14 days

- Special Functions: Avoid Captchas

The team behind Crawlera is Scrapinghub, the team behind the development of Scrapy, a popular scraping framework for Python. Crawlera is one of the best proxy API in the market. Its proxy pool isn’t much as it is just between a few thousand to tens of thousands. However, you can be assured that their system works.

While they do not have a Captcha solver, they make use of an in-house procedure to bypass Captcha. When you need to make use of a headless browser, you can make use of Splash, proprietary software of Crawlera– but you will have to pay for it separately.

- How to Build a Simple Web Scraper with Python

- Scrapy Vs. Beautifulsoup Vs. Selenium for Web Scraping

- Use Chrome Headless and proxies to Scrape Any Website

Scraper API

- Proxy Pool Size: over 40 million

- Supports Geotargeting: depend on the plan chosen

- Cost: Starts at $29 for 250,000 API calls

- Free Trials: 1,000 API calls

- Special Functions: Solves Captcha and handles browsers

When it comes to the number of requests that Scraper API handles in a month, the number is put at 5 billion, making it one of the most popular Proxy API for scraping in the market.

Scraper API is different from the two above. While the above takes care of proxies and headless browsers and try to avoid tripping off Captcha, Scraper API can actually handle Captcha for you. With just a simple API call, you will get the whole HTML of a page returned. They have over 40 million IPs in their pool – comprising of datacenter, residential, and mobile proxies.

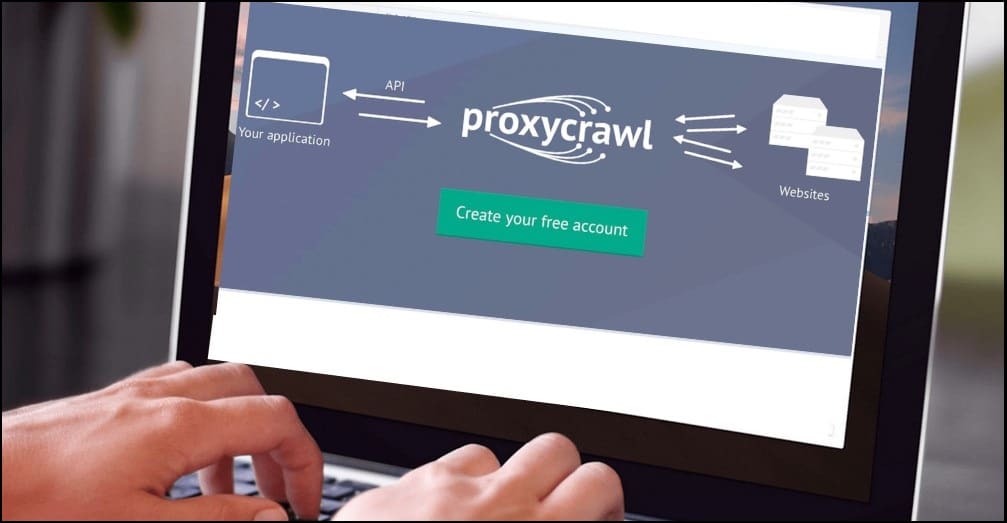

Proxycrawl

- Proxy Pool Size: Not disclosed

- Supports Geotargeting: Yes, but limited

- Cost: $21 for 10,000

- Free Trials: 1,000 requests

- Special Functions: Avoid Captchas

Proxycrawl is another web scraping service provider with a Proxy API you can use to evade blocks and unlock restrictions. They have a mixed IP pool with residential proxies and data center proxies in it – this is good for a good number of web scraping tasks. It can also help you handle Captcha and also render JavaScript codes. The number of sites Proxycrawl Proxy API supports is more than a million, including all the popular websites on the Internet. With just a call of their API, you can a whole page downloaded for you.

Zenscrape

- Proxy Pool Size: Over 30 million

- Supports Geotargeting: Yes, but limited

- Cost: $8.99 for 50,000

- Free Trials: 1,000 requests

- Special Functions:handles headless Chrome

Zenscrape is another proxy API that’s perfect for web scraping. With Zenscrape, you only need to worry about parsing data as a simple API call will return the content of a page for you. Most importantly, all requests are executed using the latest version of Chrome, making sure that you see the right data – and JavaScript rendering is handled. Zenscrape has a proxy pool with 30 million IPs in it. Zenscrape has friendly pricing and just like the others above, it has a free trial plan for new users to test before making a monetary commitment.

Downsides of Using Proxy APIs

While there is no doubt that proxy APIs are quite helpful to beginners, and when you do not want to worry about blocks and managing of proxy servers, they also have their downsides. Some of them are disclosed below.

-

They are Expensive

The number one downside to using proxy APIs is that they are expensive. While the cost is justifiable because it takes over the work of proxy management, handling browsers, and in some providers, Captcha solving, it is still expensive and can be termed over-priced. Take, for instance, sending 200,000 requests that will exhaust your $99 Crawlera Starter plan subscription. For some web scraping jobs, this plan will be exhausted in a few hours.

-

Content Returned Might Not Be What You Expect

One other problem associated with proxy APIs is that they might return the wrong kind of data. Take, for instance, some proxy APIs that have the problem of not returning images and videos. Some can even return the wrong data when it comes to geotargeted content. Because of this, it is advisable for you to make use of the provider's free trials first and see if it works as you want. Also, you can avoid some of these problems by encoding URLs correctly, using the wait parameter so JS codes will finish executing. Using premium_true = True can also help.

-

Privacy is of Major Concern

This problem is not only applicable to proxy APIs only. Any proxy network you use can monitor your traffic, and as such, the issue of data privacy can’t be ruled out. That’s is why you need to make sure you are using a trusted provider that has been proven beyond a reasonable doubt that it does not sniff on its users' traffic.

FAQs About Proxy APIs

-

Are There Free Proxy APIs in the market?

Yes, there are free proxy APIs in the market, but we always advise our users against using free proxy networks, and proxy APIs are not excluded.

-

Are Proxy APIs Unblockable?

Forget about what you hear; Proxy APIs are not unblockable. But to a large extent, they have proven to work better in avoiding blocks, and when it occurs, they have their ways around, such as in the case of handling Captchas. However, there are some cases that they will still fail and report back to you after so many trials.

-

How Do Proxy API Providers Get Their Proxies?

Proxy API providers do disclose the source of their proxies. Some of them might be buying proxies from the regular proxy providers in the market while others build their proxy pools themselves. Whichever the case may be, you do not have to worry as you only pay for successful requests. And if you are unable to get them to work for you, you can simply ask for a refund.

Conclusion

Proxy APIs can help you avoid thinking of blocks and handling of browsers and Captcha. They serve as smart downloaders and will return a whole page for you with just an API call. Above are some of the best proxy APIs you can use for web scraping. However, be sure you are ready to spend more on them than you would on regular proxies.