When many people think of web proxies, they think of borderline illegal activities. There’s something of a bad reputation generated by spambots, black hat SEOs, and 4chan hackers. When proxies are associated with DDOS attacks and spam, it’s hard to talk about them openly.

There are plenty of legitimate uses for proxies, though, and one of them is data mining. The web is packed full of awesome data resources, but a lot of them are hidden behind gates through which you need to make requests. Some of them, of course, put paywalls in these gates to make money. Others simply use them as a way to filter traffic to prevent excessive access.

These gates are the bane of every data miner. You want a large quantity of data from the publicly accessible database. You try to access it, and the first dozen or so requests work fine. Then you find your page timing out or your requests bouncing due to rate limits and excessive use bans.

Avoiding IP and Account Bans

When a server like this bans a user for excessive use, it’s either an account ban or an IP ban. Account bans are difficult to deal with. After all, you need to make a new account and jump through all of the hoops to verify it.

I don’t recommend trying to use the proxy method to get around this, though if you have a script to fill out and verify profiles, you can do so easily enough. Excessive account creation and usage in the sequence are likely to lead to an IP ban anyways.

An IP ban, obviously enough, blocks the IP address you’re using to connect to the Internet. If you want to get around it, you need to access the offending website from a different IP address. This is where web proxies come in.

The point of a web proxy, I’m sure you know, is to anonymize your traffic by shuttling it through a third party server. It’s like using a virtual assistant to call for take-out, or a chauffeur to drive you from place to place. You send your traffic request to the proxy, the proxy ferries it on, it gets the results and delivers them back to you.

Rate Limits and Usage Limits

There are a few problems with using proxies in this manner. For one thing, public free proxy lists tend to have either rate limits or usage limits, typically alongside a bandwidth limit.

If you’re making a lot of small requests, or several large requests, your operation is likely to time out partway through the process. This is extremely frustrating, as you might imagine, particularly when trying to complete single large requests that can’t be resumed easily.

Some proxies also render your content in an iframe or use interstitial ads on every third or so request, in order to make some money. Depending on the automatic system you’re using to harvest data, this can add junk fields or break your script.

There are also all of the usual issues with using a proxy server, which is almost always located in a foreign country. This adds latency to the requests, making your operations take longer. Proxies are notoriously fickle and prone to dropping requests, all of which cause issues.

Related: How to avoid Proxies Get banned or blocked.

Backconnect Proxy API & Dedicated Proxies

Using IP rotation API for data mining with CURL

Now more and more the rotating proxy providers are coming to proxies market, lots of those backconnect proxy providers offer the residential proxy network, It's a little like Tor network that gives your p2p internet connection, the proxy providers give you a Gateway server or API to access their proxy network.

Read more: Guide to Backconnect Proxy: How Backconnect Proxies Work?

API with CURL Examples

-

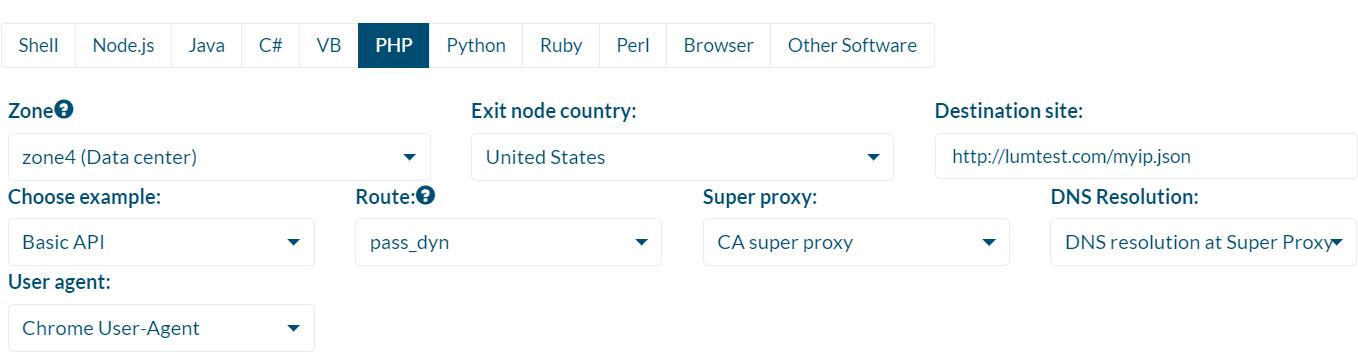

Sample use Luminati.io

<?php

echo 'To enable your free eval account and get CUSTOMER, YOURZONE and '

.'YOURPASS, please contact [email protected]';

$username = 'lum-customer-estvpnserve-zone-zone4-route_err-pass_dyn';

$password = 'qigxme6kr73n';

$port = 22225;

$user_agent = 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36';

$session = mt_rand();

$super_proxy = 'servercountry-CA.zproxy.lum-superproxy.io';

$curl = curl_init('http://lumtest.com/myip.json');

curl_setopt($curl, CURLOPT_USERAGENT, $user_agent);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($curl, CURLOPT_PROXY, "http://$super_proxy:$port");

curl_setopt($curl, CURLOPT_PROXYUSERPWD, "$username-country-us-dns-local-session-$session:$password");

$result = curl_exec($curl);

curl_close($curl);

if ($result)

echo $result;

?>

Learn Brightdata formerly Luminati proxies scaping performance From our review.

-

Sample use oxylabs.io

# In this example a query to `example.com` is performed from a random IP address from Munich, Germany.

<?php

$username = 'USERNAME';

$password = 'PASSWORD';

$country = 'DE';

$city = 'munich';

$proxy = 'pr.oxylabs.io:7777';

$query = curl_init('https://ipinfo.io');

curl_setopt($query, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($query, CURLOPT_PROXY, "http://$proxy");

curl_setopt($query, CURLOPT_PROXYUSERPWD, "customer-$username-cc-$country-city-$city:$password");

$output = curl_exec($query);

curl_close($query);

if ($output)

echo $output;

?>Learn Oxylabs proxies scaping performance from our review.

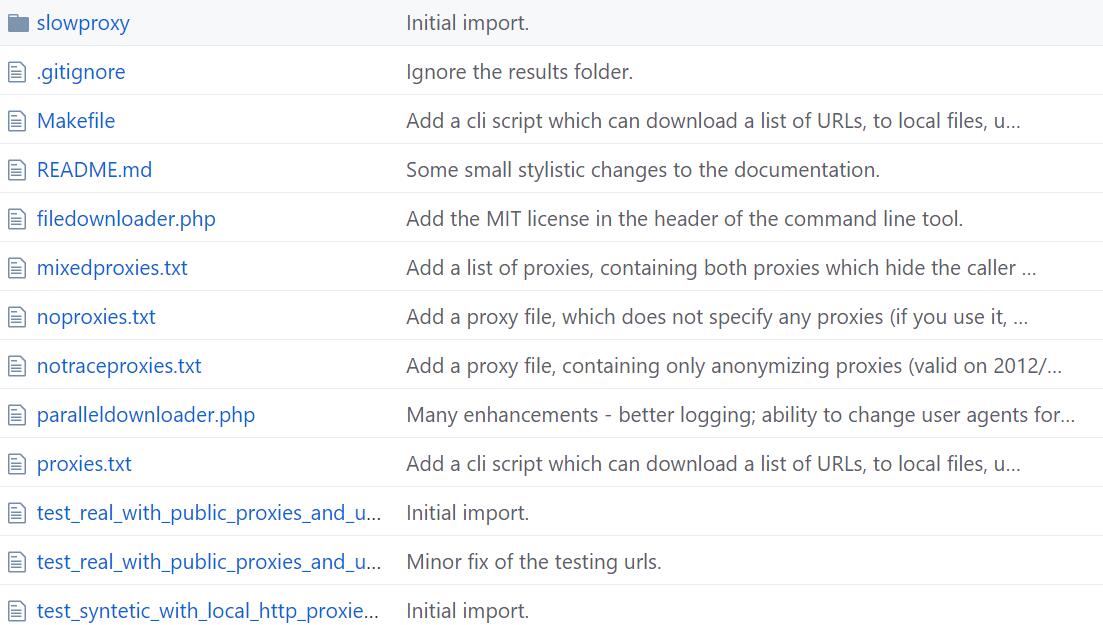

Using dedicated proxies list to retrieve data with CURL

This is where CURL and PHP come together to solve the problem. What you do, in this case, is set up a script that rotates through a variety of proxies in a list. You have a list of requests, a list of proxies, and a target site. You make request A from proxy A, then request B from proxy B, and so on down the list. At some point, say request L, you loop back to proxy A because request A has been done for a while.

Though those are dedicated proxies (A list of Fixed IP:Port proxies). The idea is to have a rotating list of proxies to handle your requests in a more organic, low rate manner. To the database site, it looks as though a dozen or so users are just making normal requests. Not only does this distribute the load of the requests you’re putting on the database; it also cuts the time it takes to process your requests significantly.

This distributes the load throughout numerous IP addresses, so it’s harder if not impossible to ban them all. If one does receive a ban, you just add another proxy to the list to replace it. In no time, your harvesting will be complete and you can leave the poor database alone to contemplate its role in life.

Benefits of Private Proxies (Versus Shared Ones/public)

Private proxy lists alleviate many of these issues. For one thing, you’re typically paying to access the proxies, so those proxies are clean – that is, they don’t have to add advertising to your data stream to make money. They have lower volume and can support a more consistent throughput. You’ll have fewer timeouts, fewer lost requests, and less latency.

Of course, that only alleviates the issues with the proxy; you still have the issues with the IP bans from the site you’re harvesting. You can jump from proxy to proxy each time one gets banned, but that’s tedious. Data mining requires as much automation as possible because the real fun begins with analysis rather than the harvest.

Example Script to Try

I like this particular script, as I said, in PHP and CURL. The script allows you to fill a file with proxy addresses and submit your requests. It also automatically includes retry logic, so when a request fails, it tries again rather than hanging or skipping to the next request. It’s surprisingly efficient the way it’s programmed, so it doesn’t overload your CPU as well.

If you’re using the Mac OS or Linux – a Posix system – you can run the script directly. Windows systems will need a PHP interpreter to run it. You can see examples of the specific usage for downloading files in the readme on the GitHub page.

If you want to do something more advanced than downloading sample files, you’ll need to add a small script to run to make that request. You’re on your own for that one.