You don’t want to get your proxies banned when harvesting data or web scraping, Right? How your proxy IPs are detected and Can we avoid proxies being flagged? of course!

Pretty much any time you’re using high-quality proxies in any significant number, you’re doing it because you want to use some kind of bot. You’re harvesting data, you’re performing bulk search queries, or something of the sort.

This is all perfectly legitimate, of course. It’d be a different story if you were trying to use that proxy list and set of bots to DDoS someone, but that’s just not a good idea. For one thing, it’d be a very mediocre, ineffective DDoS. You really need a botnet for that.

Anyways, the point is, you don’t want to get your proxies banned while you’re in the middle of using them to harvest data. Your data will end up incomplete and, in instances where the data changes frequently, you’ll end up with an unusable table. By the time you’ve set up new proxies to harvest the rest, the first chunk may have changed.

That’s not always the case. Still, it’s universally an annoyance at best when a proxy IP gets banned out from under you. It prevents the smooth operation of your task, it drags you out of whatever else you were doing to fix it, and it wastes time. So, why not take steps to avoid getting those IPs banned in the first place?

To understand ban avoidance, you need to know how proxy IPs are detected in the first place. Think about what, to a site like Google or Amazon, ends up looking like a red flag.

- A bunch of similar queries coming in all at once.

- A bunch of similar queries coming in from the same identified browser.

- A bunch of similar queries coming from irrelevant geolocations.

- A bunch of queries searching using high-risk terms.

These are the sorts of actions that get an IP flagged, but they’re also the sorts of actions you might be performing.

If you wanted to scrap the top 10 pages of Google search results to analyze the titles of blog posts for a certain search term, all on one website, you’d want to use the site: operator, right? Operators like that may eventually trigger captchas, and failure can get an IP blocked.

Let’s talk about the various steps you should take to avoid being flagged, shall we?

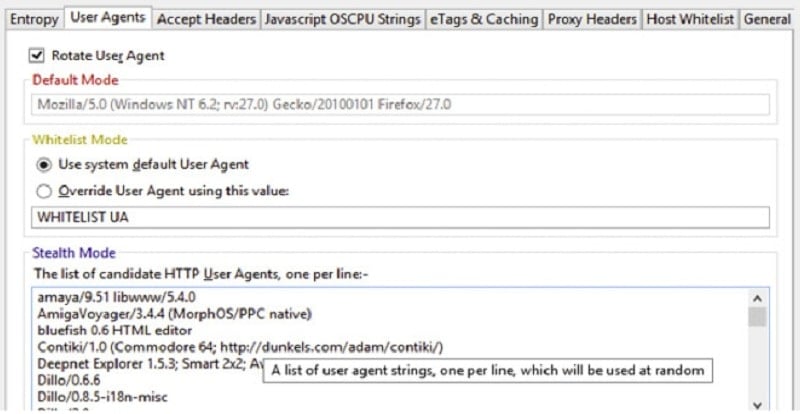

Set a Unique User-Agent for Each IP

A user agent is part of a data string, a header, that accompanies communications from your computer to the server of the website you visit.

The user agent includes some anonymous information about your configuration; essentially, just your language and the browser edition you’re running. They will often include Windows version as well, and sometimes other data.

Someone using an up to date Chrome installation in English will have the same user agent

data as someone else using the same software. Someone using the same version of Chrome but in French will get a slightly different user agent.

The problem with the user agent is that it’s an identifying piece of information, no matter how anonymous it is. If Google sees 10 search queries performed on the same second, all from the same two-updates-back version of Firefox, all looking for the same sort of information, it can reasonably assume that those 10 queries are part of one query made by 10 bots.

User-agent information can vary from connection to connection, and from bot to bot. You can change it up personally to configure each of your proxies to use a different user agent. This further obfuscates the connection between each of them, so it looks more like legitimate traffic. The more you can avoid patterns, the better off you are.

The Electronic Frontier Foundation did an interesting study on how identifying this “anonymous” information can really be. You can see some examples of user-agent strings, and what kind of information they convey, in their post here.

Avoid High-Risk Geolocations

IP addresses are just that; addresses. They are identifying information about the origin of the connection being received. I can tell by the IP address alone what country a user is coming from.

Now, a proxy server filters that, obviously. It changes your IP by essentially becoming a middleman in the communications. I can be in California, sending a connection to New York, but if I use a proxy IP in Algeria, that server in New York will see traffic coming from Algeria. They can’t see beyond the proxy server, so they don’t see that I’m actually in California.

Now, Algeria may seem like a strange source for traffic, and it is. Traffic from strange locations is a warning sign of many things, from proxy usage to fraud. If you’ve ever gotten a phone call claiming to be from your bank, but with a Nigerian Prince on the other end, you know how big of an issue this kind of spoofed communication can be.

The solution to this problem is to use high-quality proxies in non-high-risk countries. Ditch the Russian, the Ukrainian, and the middle eastern proxies.

Instead, opt for proxies that tend to originate from North America or Western Europe. These areas are much more likely to be browsing local sites than people from Russia.

Always try to consider the service area of the site you’re targeting. If you’re trying to harvest data from Google, try to avoid using proxies from a location that has its own version of Google.

Related: Why the Harvester on Your ScrapeBox Isn’t Working?

Yes, a lot of people will still use the main .com version of Google rather than the non-American version, but it’s still one more warning sign. This alone won’t get your proxy banned, most of the time, but combined with other signals it can be a deciding factor.

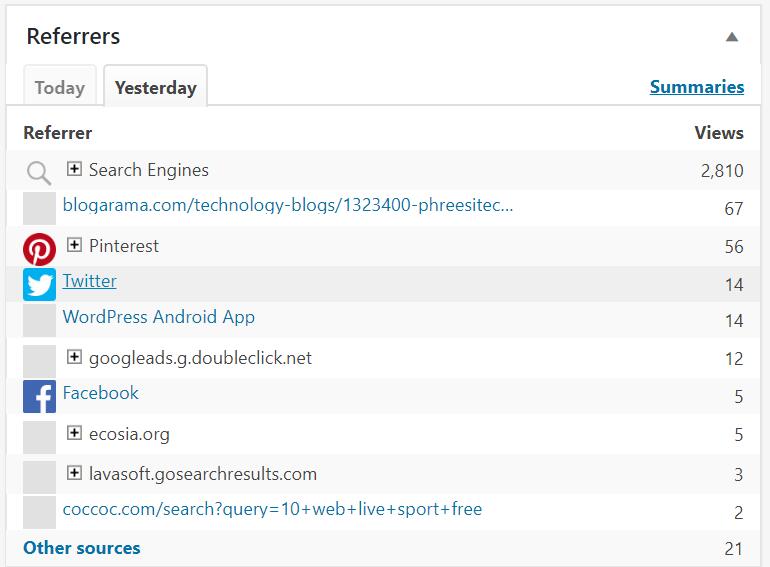

Set a Native Referrer Source

The referrer is a different sort of information, but again, it’s another piece of information you’re giving the site that receives your traffic. As with the above, any information you send can be used to identify what you’re doing.

In this case, a referrer is where the site thinks you came from. If I go to a new tab in my browser and type in www.google.com and hit enter, that shows up as direct traffic with no referrer.

That’s fine, but it works best for just homepages like that. If I type in a full search query string, that’s a lot less plausible. Google would expect the people landing on a results page to be coming from their homepage, so showing it as direct traffic is a warning sign.

Likewise, if you’re scraping data from Amazon, they would expect you to be referred by Amazon, not direct traffic.

The worse issue is if your referrer somehow gets set to some other site or even your own site. Then Google or Amazon or whoever will be able to see a bunch of different queries coming in very quickly, all referred by your site. That makes it painfully easy to identify as bot traffic and makes it very likely that they will block it.

The solution here is to make sure your referrer is set to be native and sensible to the location you’re querying. If you’re sending a bunch of traffic to various search results pages on Google, you want it to look like your traffic is coming from www.google.com, so that’s what you should set.

Set a Rate Limit on Requests

Rate limits are perhaps the number one tip to avoiding having your proxies blocked. One of the dirty little secrets about the internet is that websites, in general, don’t care all that much about bots. There are a lot of bots floating around.

Google’s search spiders are bots, as are all the other spiders for all the other search engines out there. There are bots going around searching for common security holes. There are bots just browsing content and clicking links. There are malicious bots and benign bots, and for the most part, they just exist.

The only time bots become an issue is when they start to cause trouble. Malicious bots attempting to brute force a web login is one example. That right there illustrates why rate limits are a good thing.

Think about it; when a bot is making 10 requests a second, it’s trying to do something either in bulk or very quickly. Legitimate bots like Google’s scrapers don’t need to be in that kind of hurry. Malicious bots can be caught and blocked at any time, so you need to hurry to try to get in first.

With your own data scraping, chances are you’re not trying to be malicious. You want to harvest your data quickly, though, because the longer it takes to harvest the volumes you’re scraping, the longer it takes to complete your project.

By implementing a rate limit, what you’re doing is telling the web server that even if you look like a bot, you’re not trying to do anything malicious. You’re not worth watching. Heck, you might not even be a bot at all. It’s that element of plausible deniability that keeps your proxies safe.

Run Requests Asynchronously

This is the other tip I would say is tied for #1 most useful. If you have 100 queries to make, and you have 10 bots on 10 proxies to do it, you might think that you would just send 1 query per bot per second, have the whole thing over in as long as it takes for the server to respond, and you’re good to go.

From the perspective of the server, though, that’s 10 nearly identical queries arriving instantly. That’s a huge warning sign because no legitimate user browses in that fashion. Real people – what you’re trying to imitate, more or less – browse from one item to the next to the next.

If you have 10 bots, then, what you should be doing is staggering them out so there is a 1-2 second delay in between queries. Ten bots should look like ten individual users with different browsing habits, not as ten identical users offset by a second from each other.

The problem here is one of the patterns. Every form of anti-fraud and anti-bot in the world is attempting to detect how bots are different from people, and generally, that’s patterns.

When your bots are operating in a pattern, particularly if it’s a lot happening in a short span of time, it becomes easier to detect. The more volume, the more rigid the pattern, the easier it is.

Asynchronous requests, combined with rate limits, stretch out those patterns so they get lost in the noise. Change up identifying information so even that’s not the same, and the patterns can almost disappear.

You may be like: What is the best proxy setting for followliker?

Avoid Red Flag Search Operators

Google has a lot of search operators, but some of them require more caution to use than

others. For example, a normal query, you can tab back through pages all day with no issue. Performing site searches can get you hit with a captcha trap, like what happened to this guy searching for LinkedIn resumes. Searching with the intitle or inurl parameters is even worse, typically because those operators are used to find pirated material.

If at all possible, try to avoid using search operators when you’re running bot bulk searches via proxies. They can be a red flag that greatly emphasizes the problems other parts of this list bring up.

If you can’t avoid using search operators, like you’re searching a specific site or searching a specific character string in URLs, you will need to take the previous steps and turn them up to 11.

Use longer timers, run even more asynchronously, pick better locations, and so forth. In fact, it might even be better to use more proxies, so you can use more user agents and different configurations to harvest your data, to further minimize the risk of getting caught.

You may be like: How to Scrape Data from Linkedin Using Proxies

Rotate Through a Proxy List

This is another great tip, because it further minimizes patterns. If it’s hard to detect a pattern across five bots on five proxies, use ten. If it’s harder to detect with ten, use 20. Of course, if you’re trying to use 20 all at once, you run into issues with the volume of similar queries establishing a different pattern. So what you do is run, instead of 20 all at once, one set of 5, then another, then another, then another, then back to the first.

A rotating proxy list, if the list is sufficiently long enough, will minimize the number of duplicates you have, making it even harder to detect. Of course, a sufficiently long proxy list might be expensive, so you have to balance out how much you’re willing to pay for access to a high-quality list versus how much you’re willing to deal with the effects of getting caught.

Speaking of getting caught, different sites have different means of dealing with bots. Google, for example, will time you out for 14 minutes unless you fill out a captcha.

This might be an incentive to use a captcha breaker, and that’s your call. Some of them work, some of them are difficult to get working properly, and some are hit or miss. It’s also difficult to get past Google’s “I am not a robot” checkbox as well.

Honestly, in many cases, it’s best to just wait and watch, keep an eye on your bots, and fix issues when they come up. You can always do the captcha manually and then re-initialize the bot.

Related: How Backconnect & Rotating Proxies Work?

Use a Supplier that Replaces Proxies

Some proxy suppliers don’t care if an IP gets blocked, temporarily or permanently. They have disclaimers about the usage of their proxies.

You see this a lot with public proxy lists in particular; they are used and abused so much that some sites even go out specifically to harvest proxy IPs and ban them before they can be used against them. This is why it can take ages to find a public proxy that works, and finding enough to harvest data is nearly impossible.

Private proxy lists are the better deal here, for two reasons.

One, they don’t have the past usage and prior history. Essentially, you’re not starting on strike 2 like you might be with a public proxy list.

Two, many private proxy list managers will offer you a list of X number of proxies and will keep them rotated to keep them fresh. If a proxy is banned, they will replace the proxy, so that there’s always that selection available. In other words, the lists don’t degrade.

Honestly, it’s not all that difficult to harvest data from a site like Google as long as you set things up properly. It’s only when you don’t put thought into it, when you slam traffic into their face that screams bot, that you end up being blocked. Of course, you can always just use APIs to get your data, but sometimes what you want isn’t available.