Is your web scraper getting blocked and blacklisted by your target websites? That’s because those websites are using some anti-scraping techniques. Come in now to discover those techniques and how to boycott them.

Web scraping has come a long way and has helped a good number of businesses scale up and gain useful insights from data. Provided the data you are interested in can be found online and not behind a paid firewall, you can get it scraped using a web scraper.

Though, the difficulty of scraping varies from website to website depending on the anti-scraping techniques in place to discourage scraping. While some websites can be a pain in the neck of web scrapers, the truth is, you can still scrape them without getting blacklisted provided you know the techniques being used to detect your bot’s traffic.

In this article, you will be learning about how to prevent websites from detecting and blocking your web scrapers. I will be discussing anti-scraping techniques websites uses and how to bypass them in other to get successful at scraping the site of your choice. But before that, let take a look at a brief overview of the robots.txt file.

Robots.txt File – an Overview

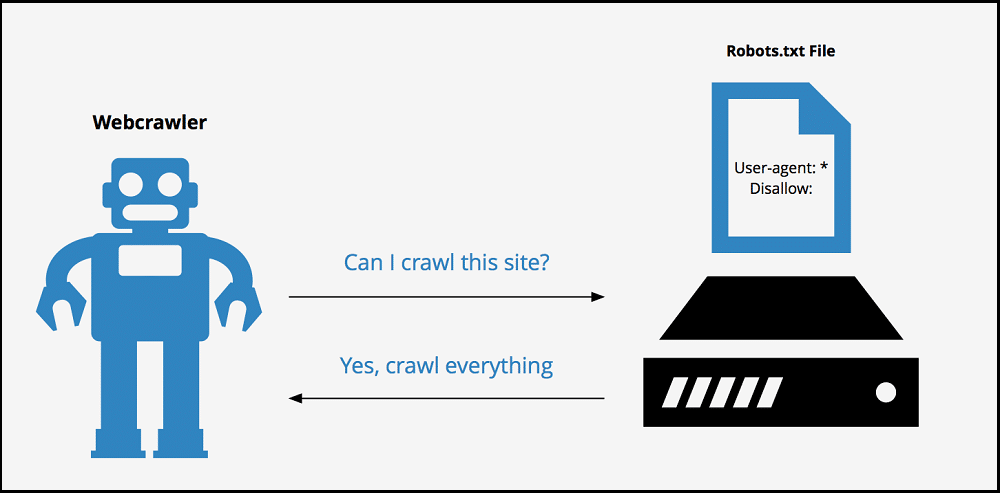

The robots.txt is another name for robots exclusive standard or robots exclusion protocol. It is a file that websites use to communicate to web crawlers, scrapers, and other web automation bots. It specifies areas on the website a bot should access and areas it shouldn’t.

It can also be used to communicate with specific bots. For websites that need to communicate to web robots, they create a robots.txt file at the root of their website. (https://www.luminati.io/robots ). The content of the robots.txt of Luminati, a popular proxy network, is below.

User-Agent: * Disallow: /lum/ Disallow: /lp/* Disallow: /www/*.html Disallow: /use_cases/fintech Host: luminati.io Sitemap: https://luminati.io/sitemap.xml

From the above, you can see that there are URLs/paths; it does not want web crawlers/scrapers to access. It even specifies the link to its sitemap. To learn about the standard of communication using robots.txt file, visit the robots.txt site. Sites that do not want to be scraped communicates that in its robots.txt file.

While search engines such as Google, Bing, Yandex, and some other web scraping businesses respect the robots.txt files of sites, most web scrapers such as email harvesters, and security vulnerability checker bots, and many other bots do not respect instructions in the file. Even if you wouldn’t respect the robots.txt of a website you want to scrape, I will advise you to be polite and gentle – avoid hammering websites with lots of requests within a short time frame.

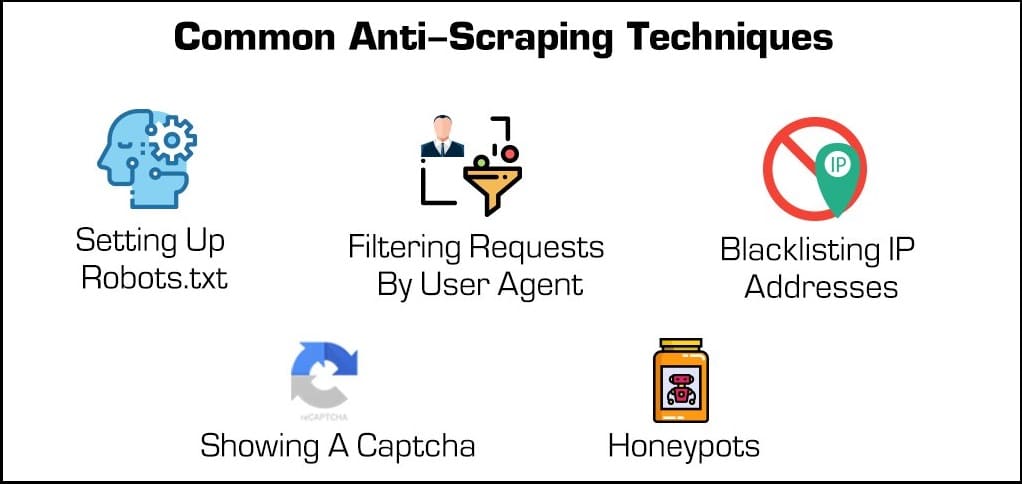

Anti-Scraping Techniques

The anti-scraping techniques used by websites are numerous, with new techniques being actively developed and introduced. Web scrapers, on the other hand, are on top of their game, looking for techniques to bypass these techniques and scrape without getting blacklisted. The list below contains the popular anti-scraping techniques and solutions to them. Some sites use a combination of almost all of them.

-

IP Tracking and Blocking

The most popular anti-scraping technique used by websites is IP tracking. For each request they get on their server, they keep a record of the IP Address used in sending them. When they notice more than enough requests coming from a single IP Address, then such IP Address might be that of a bot.

Most complex websites have request limits, which is usually 10 per minute, 600 per hour. If you send more than this, your traffic will be suspected to be bot traffic, and when this occurs, your IP Address will be blocked from accessing the site.

This block is usually temporal except in a few cases that might be permanent. But as you know, web scraping requires you to send a good number of requests that exceed the number humans can send, and as such, you will definitely exceed these limits. What then do you do to bypass this technique?

How to Bypass

The most effective way to bypass IP blocking is to use proxies. Proxies mask your IP and attach a different IP Address to your requests, thereby allowing you to surf the Internet anonymously. The number of IPs you have determines the scale at which you can scrape.

For web scraping, using a proxy pool is advisable as it facilitates IP rotation and avoids using the same IP over and over again. This should keep you away from IP block. If you can deal with proxy management and rotation yourself, you can use dedicated proxies. For beginners, proxy APIs work better, but they are more expensive.

-

AJAX

AJAX stands for Asynchronous JavaScript and XML. It is a set of techniques used by websites on the client-side to create asynchronous web applications that are responsive and does not require a page refresh to load data from a server – thanks to JavaScript.

This technique has posed a challenge to web scrapers as most of the popular web scraping tools, including Scrapy, do not render JavaScript and, as such, not fit for scraping Ajaxified websites.

This is because Ajaxified websites load data after the HTML has been loaded. If you send requests using Scrapy and it likes, you will get the HTML returned without the required data. For you to scrape a website with AJAX functionality, you need a way to execute and render JavaScript so that you only scrape after the required data has been added to the page.

How to Bypass

For you to scrape data of JavaScript featured websites, your browser needs to have a rendering capability. And a headless browser will give it that. Headless browsers are browsers without a Graphical User Interface that are used for automated testing. Examples of headless browsers include headless Chrome and PhantomJS. For you to automate any of these browsers, you need a browser automation tool such as Selenium. With it, you can control browsers, get them to fill forms, click buttons, and execute JavaScript snippets.

-

Browser Fingerprinting

There are basically 3 ways a website tracks its users; through their IPs, cookies, and browser fingerprints. Just in case you do not know, a website can uniquely identify your web scraper even after switching IP through getting pieces of information about your browser/scraper. Browser fingerprinting uses browser settings and attributes to identify unique devices.

There are basically two types of fingerprinting – static and dynamic. Static fingerprinting gets its footprint data from request headers, while dynamic fingerprinting uses JavaScript to get browser information such as fonts, plugin, color depth, screen size, and much more.

When using a tool like Scrapy or other tools that do not render JavaScript, you can be immune from dynamic fingerprinting because they do not render JavaScript. However, some sites can forbid request if the fingerprinting module does not execute in this case, you have to use Selenium or other browser automation tools.

How to Bypass

For a site that is actively engaging in browser fingerprinting, you have to provide it a fingerprint else, you will be denied access. The best thing to do is to provide fake data. For your request headers, make sure your web scraper sends the User-Agent of one of the popular browsers.

It should also send data for the Accept, and Accept-Language and the values should correspond with that of the User-Agent you are using. The order of the headers you are sending is important. To prevent dynamic browser fingerprinting, use Selenium to rotate among different browsers. However, avoid using PhantomJS as it is detectable.

- Headless Chrome Vs. Firefox Vs. PhantomJS for automation testing

- Playwright Vs. Puppeteer Vs. Selenium: What are the differences?

-

Captcha

The word CAPTCHA is an acronym for completely automated public Turing test to tell computers and humans apart. It is a test given by websites when they suspect traffic as bot originating. Usually, it requires you to recognize the content of an image or group of images. Captchas appear when a website gets too many requests, typical of web crawlers, scrapers, and other web bots.

When your web scraper requests trigger the appearance of Captcha, you have to solve it to continue scraping else; you have to use other techniques that will have to interrupt the process you have already started.

Captcha solving is a difficult task as you need to have skills computer vision, artificial intelligence, and machine learning. In fact, some Captcha has proven to be unsolvable using automated means for now. When it comes to Captchas, it is better to avoid them than to solve them. How do you solve the issues of Captcha?

How to Bypass

One of the solutions to preventing the appearance of Captcha is using virgin IPs. Residential IP pools are some of the best as they are undetectable as proxies, and as such, they leave no room for suspicion. Increasing the timing between requests and, by extension, reducing the number of requests you send in a minute and from a single IP will reduce the occurrence of Captcha.

Even after all of these, Captcha can still occur. When it is triggered, you need a Captcha solver to solve Captchas in other to bypass them. 2Captcha is one of the popular choices in the market. It works quite great, but it is a paid tool.

-

Log In

There are basically two types of content online – those that you can scrape without logging in and those that require log in. Scraping sites that require login is not much different, but it can pose new challenges, and you have to be aware of them. First, how do you deal with login and sessions? You also cannot rotate IP for every request when you’re logged into an account, as that will trigger suspicion and Captcha on you. There is also a limit to the number of requests you can send from a single account before the account is labeled spam.

Also, depending on how important your accounts on the target sites are, the thought of losing them in the process can become a reason for concern. The truth is, scraping data from websites that requires login requires additional care when compared to websites that require no login.

How to Bypass

If you do not know how to log in, check out this article on a GitHub hosted blog. Generally, you need to login and then save the cookies returned. With the cookies saved, you can scrape as it will keep you logged in, and the session will be maintained. For sites like this, it is important you know that proxies that rotate IP after sometimes are the best. Also, you might want to have more than one account in other to rotate accounts, but make sure you keep their cookies and use them accordingly.

-

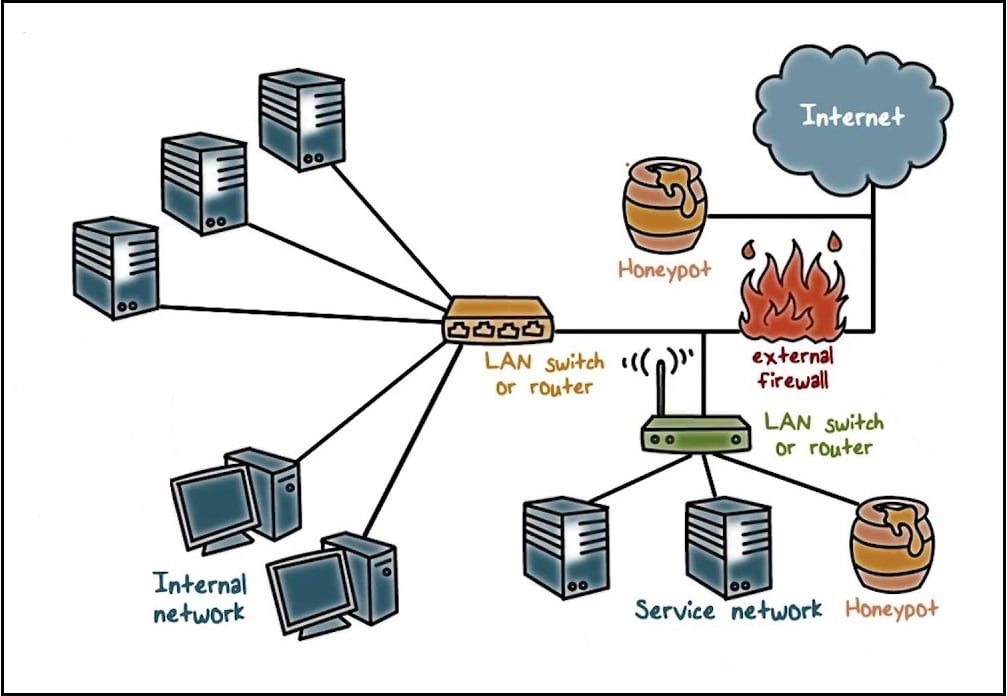

Honeypot Traps

Websites have developed a technique to trick web scrapers and crawlers into visiting URLs not visible to regular web users but accessible by automation bots. This technique is known as the honeypot trap. The technique involves using CSS attributes to hide links on a page to make them not visible to human visitors. This involves either using visibility:hidden or display:none in the styling of the links. Some make the color of the link the same as that of the page background. With this, any visit to the link will automatically label the traffic bot originating and access blocked.

How to Bypass

When writing the code for your web scraper, make sure it checks the links, and it is visible to regular users by checking the vales for visibility and display. Also, check out the color of the link and make sure it is not the same as that of the page background.

FAQs about Request Blocked

-

Is Web Scraping Legal?

The fact that websites are putting in place, anti-scraping techniques means they are not in support of it. However, web scraping, in general, is not an illegal task. However, the technicalities involved and what you intend to achieve could make it illegal. Read this LinkedIn blog to understand how it can be both legal and illegal.

-

Can a Site Be Successful at Preventing Scraping?

The truth is, no site on the Internet can totally prevent scraping. The only thing is that it can make it difficult and unattractive to scrape from its pages. However, with the right tool and technical skill and experience, no site can prevent scraping.

-

Does Captcha Solvers Work?

Captcha solvers work for a good number of Captchas. However, some Captchas are difficult to break without interference and that’s why Captcha solving tools pay Internet users to help them solve those Captchas.

-

How Do Sites Detect Web Scrapers?

If you are wondering how sites can tell the difference between a bot originating traffic and human originating then you need to know that they differ. Websites use a combination of IP tracking, browser fingerprinting, cookies, and honeypots to detect bot traffic. If you can guard against all of these, your bot will be undetectable.

-

Should I Use an Already-Made Bot or custom Develop One for My Web Scraping Task?

Unless you are able to put into consideration all of the things highlighted on this page, using an already existing web scraper is the best. However, you have to think about the cost. The advantage of developing your bot yourself brings is that you can add unique features – and it saves you money.

Conclusion

Websites are beginning difficult nowadays. Unlike in the past that a simple web scraper can get the job done, now you have to deal with IP tracking, cookie tracking, browser fingerprinting, Honeypots, and Captchas. If you know how to bypass all of these challenges, then you will successfully scrape a website without getting blacklisted or request blocked.