The last thing you need when collecting large volumes of data from Google is an IP ban. The second-to-last thing you need is a captcha checking in on your human-ness. Each of these protection measures is employed by the search giant to weed out bots, which is exactly what you’re running if you’ve come to this article.

Before we get into specific ways to prevent these terrible things, I’d like to address the ethical aspect of scraping Google. As a rule of thumb, yes, scraping Google is ethical. Harvesting data in and of itself is a common practice today, so much so that Google does it all the time, pulling the same sort of data you’re looking for from websites across the internet.

Actually, it’s so out in the open that Google has a whole page on its Googlebot, the data scraping tool that crawls the web on Google’s behalf.

The discussion becomes possibly less ethical depending on what you plan to do with the scraped data. I’m not here to judge, so I won’t, but you should know that there a host of very legitimate and universally practiced functions that come from Google scraping, like competitive analysis, keyword crunching, and personal research. Flip the coin and you’ll find an equally large number of illegal functions, like DDoS attacks and unsolicited bulk email marketing.

The ethics of scraping Google’s treasure trove of data is in your hands.

You might also be wondering why Google has IP bans and captchas if so many scraping methods are technically legitimate. Put simply, Google wants humans using its site. Sort of like Terminator, the robots are inherent harbingers of possible death and destruction, and Google wants to safeguard websites on the internet from all those nefarious activities scraping allows. Individual humans can’t bring such evil things to the web, so Google is fine with them.

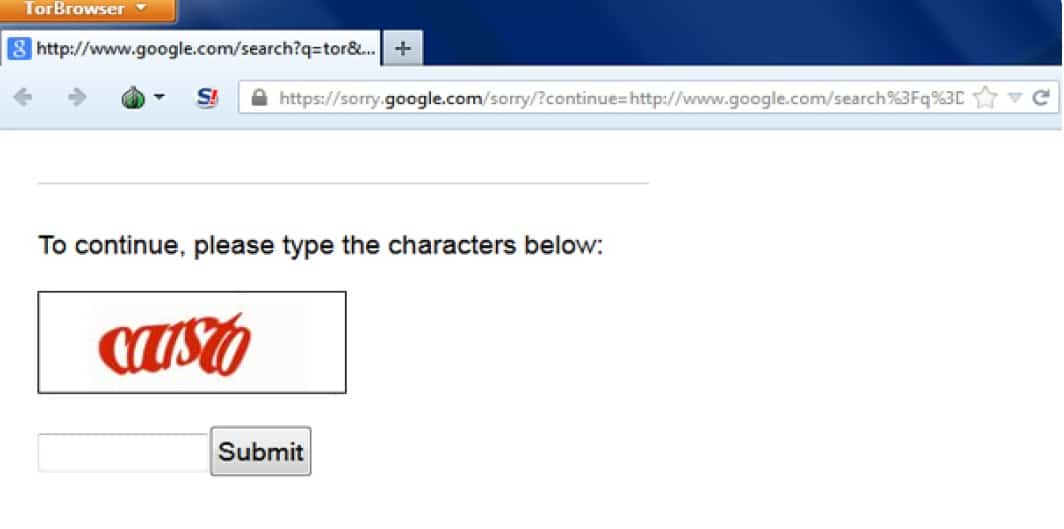

You can see this played out by the two levels of security your IPs will have to go through. Google doesn’t start off by banning your proxy IPs (unless you’ve done something very bad) — no, it starts by presenting a captcha. This is specifically designed to baffle bots. You’ve seen the captcha box before, and if you’re scraping you probably see it often. This is the first tier — the second is a ban, which can be permanent or impermanent, depending on your infraction.

The question remains,

How do you get around those bans and captchas?

We’ve got six methods, listed below.

1. Limit Individual Proxy IP Use

I’ll start with one of the more basic principles of web scraping etiquette. In most scenarios, you or your company will be using large batches of proxies to do the data collecting. This is almost always the case because the whole point is to scrape data in large amounts quickly, and you can’t do that very effectively if you only have 5 proxies.

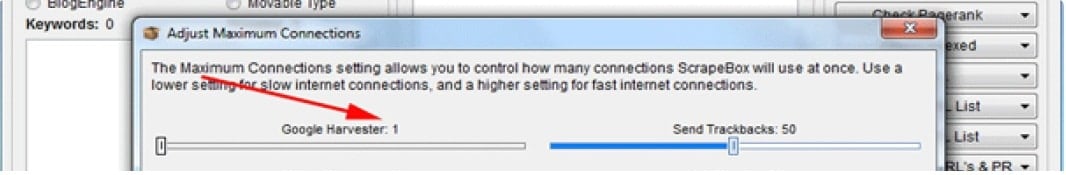

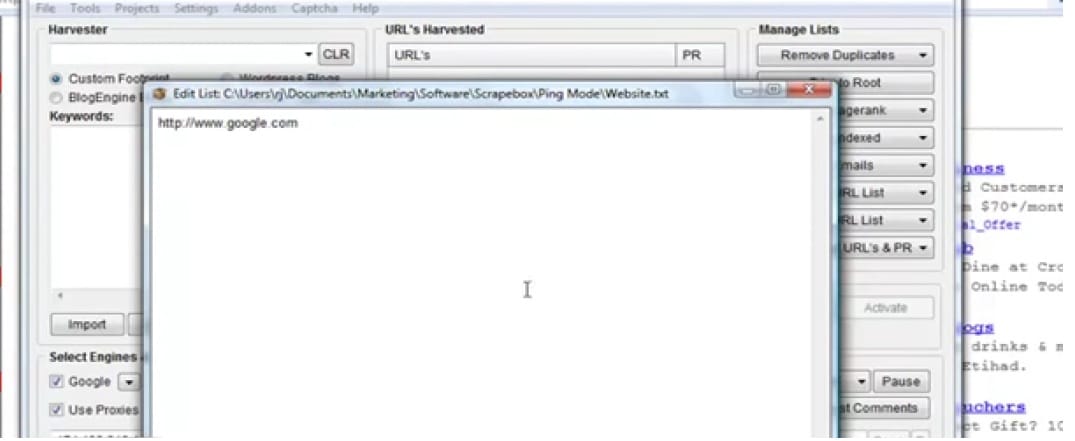

As such, you’ll have a vast number of IPs to use when scraping data. In the software program, you use to scrape (of which there are many, ScrapeBox is a good one), there will likely be a setting for how often a proxy can query or search. You’ll most likely find this in the API (application program interface).

The query frequency will be determined in seconds, or minutes if you’re wanting to be very cautious. I recommend, at the very least, setting an individual proxy IP to be used every 2-5 seconds, potentially more if you’re using combatant operators or keywords (which I’ll get into below).

Let’s say you set your search frequency to 5 seconds. This will make sure that a single IP address, a proxy you are using or rent, won’t make a specific query more often than every 5 seconds. This ties directly into that whole human concept. A real human wouldn’t likely query Google every second for 10 straight minutes. That would mean 600 individual Google searches you conducted, just for the fun of it.

No, that’s what robots do, and precisely what scraping looks like. Set your individual proxy IP query limit to every 2-5 seconds to be safe, or more second to be safer, and you’ll avoid bans and captchas for that specific IP.

2. Set a Proxy Rate Limit

This is pretty much the same concept as the previous example, with a slight twist. Whereas previously I advised limiting a single proxy IP from querying too often, you also want to limit how often all of your proxies start querying for a topic.

The range of time can really vary for this, and you can stagger your proxies to have different rate limits to even further reduce Google’s suspicions. For example, you want to beware of making 5,000 queries about “Social Media Likes” all at once. Even though these requests were sent by different, seemingly unconnected IP addresses, the fact that they have come together triggers Google’s ban and captcha procedures.

At the very least set your proxy rate limit to 1-2 seconds. To be foolproof about this, bump that up to 5 seconds for a third of your proxies, 2 seconds for a third, and 8 seconds for the final third. Doing this in combination with individual IP query limits will significantly reduce the risk of Google banning your proxies.

3. Set Your IP’s Location in Google

Google has a bad habit of incorrectly deciding where your IP is located. It’s a bit of a joke because IPs are often located in specific countries, like the U.S. or U.K., in order to access content and cloak the user in more of a mainstream appearance. When Google incorrectly determines your IP address it may feel like the whole purpose of your IP has deflated. Do not fear, there is a way!

The best way to remedy a Google geo-location redirection is to simply visit http://google.com/ncr , instead of your typical http://google.com . The “ncr” automatically sends you to the U.S. Google (which is the one most people are trying to access), regardless of where your IP is located.

When it comes to avoiding bans and captchas, the purpose of this step is to center your requests from a single country. As I previously mentioned, your proxy software is going to send out many requests to Google in order to search for information. If those similar searches come from fifteen different countries, all of which Google has incorrectly dropped you at, it will send up red flags.

Keep in mind that this really pertains to why you’re scraping data. If you want to scrape data on Japanese green tea harvesting blogs written by Japanese people in Japan, you’ll actually want Google to think you’re in Japan, like a normal Japanese human. Try to get a proxy provider with multiple world-wide locations for this, and if you’re unsure of an IP’s location, ask your provider or check for yourself.

If you want to search the most common market (a.k.a. the U.S.) use the “ncr” trick to make sure your crawlers start searching that version of Google.

4. Set Your Referrer URL

This goes hand in hand with the last step because it’s about making sure you’re starting off your query on the right foot. In order to scrape Google, you’ll need to access a specific part of Google. The most common searches with the biggest nets are often done on https://www.google.com/search , better known as the general search page of Google. This is where most of us type in whatever we want to know about. For those of us using Chrome, we simply type a phrase into the URL bar, and Google Search is employed to give us listings.

This is all how a human searches. Remember that the best way to avoid bans and captchas with Google is to act like a human. Most humans go to google.com to start their search, while Chrome users are automatically using google.com to search.

The issue with bot searches is that, if left alone, the bots will use your search operator or keyword to collect data without visiting google.com at all. They will simply reap from the wheat fields of Google Search as if nobody ever needed google.com for anything. Put in a different way, a robot bypasses actually visiting google.com because it isn’t necessary. A human wouldn’t do that.

The solution is to set your referrer to google.com specifically. Most software programs built for scraping have a specific part of the API that makes this possible. If yours doesn’t, consider using one that does. If you’re writing your own script, make sure to include this as a function.

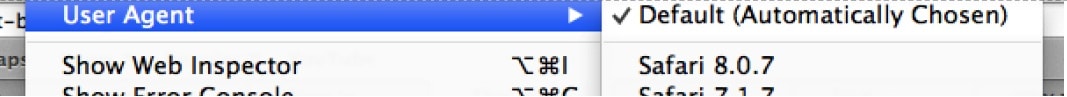

5. Create Unique User Agents for your Proxies

User agents are the technical term for identifying computer settings based on browser information. Not to be mistaken with actual identity, like your passwords or credit card information, user agents are common and there’s typically no real need to hide them.

However, when scraping Google for data, it’s paramount to diversify your user agent information. This principle comes back to the same old reason you need to change most of these settings — Google wants to believe a human is searching.

Even if your IP addresses are different, your countries are lined up, you have unique keywords and operators, and the query times are set, if Google receives 10,000 requests in ten seconds, all of which has 1024 x 768 screen resolution, use the current version of Firefox, and run on Windows 7, it starts to get very suspicious.

Changing user agent information in your browser is simple, especially if you’re using Google Chrome or Firefox. You can do this by installing extensions that allow you to swap bits of user agent information for individual proxies, which will fool Google.

This can get complex and time-consuming if you’re running hundreds (or thousands) of proxies, all of which need to have slight tweaks. Sometimes your proxy provider will include tools to do this in their API — Myprivateproxy does, just ask. Make sure to contact the customer support department of your proxy provider if you’re worried about this step.

6. Avoid Google Search Operators That Raise Red Flags

This is a major one, and an offender that most people use when scraping data on Google. Search operators are terms used to conduct hyper-specific queries on Google. When utilized effectively, they can result in a tremendous amount of highly relevant data for you to sort through.

The most common search operators you’ll see are:

- inurl

- intitle

- intext

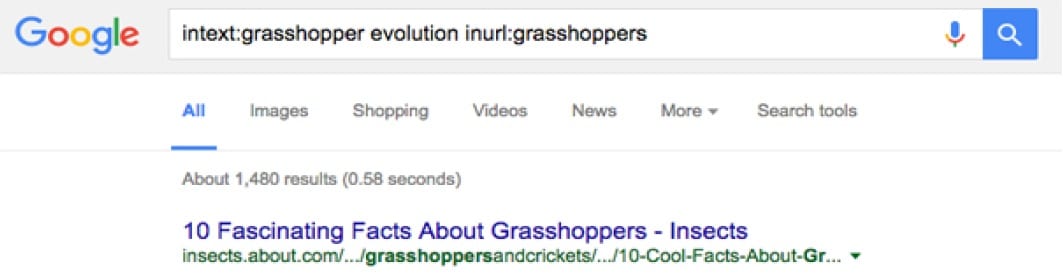

Maybe you’ve used or seen each of those terms like this: “allinurl” and so forth. It’s basically directions for Google to sort types of content, which produces a more specific list of results for you and your bots. Search operators have a lot of rules and are used in a myriad of ways, but when comes to getting banned, they are very important.

Due to their popularity in bot searches, Google simply does not like them. Normal humans, the creatures you’re trying to emulate, do not go to google.com and type in “inurl: grasshoppers” to find websites about grasshoppers. They just type “grasshoppers.”

This is compounded (literally) when you and your bots run queries with multiple search operators. If we continue the above example, running this search — “intext: grasshopper evolution inurl:grasshoppers” — will get even more specific information, like websites with grasshoppers in the URL and text that refers to grasshopper evolution.

To Google, it becomes painfully obvious that you are not a human trying to write a biology paper on grasshoppers. You are, perhaps, a bot search run by a human trying to start their next niche website.

The number and types of search operators are massive, so, first of all, try to stay away from the common ones. Instead, string together multiple keywords for a more unique search, and refer to this list when looking for new ways to query. Also, try to steer of clear of really common keywords in search operators because those have even more red flags around them.

Bans and Captchas Begone

Captchas will slow you down, which can be a detriment to the clients you have or the success of the project you’re working on. IP bans are a whole other headache, and will require you to reach out to your proxy provider. The six tips above will make it so you see fewer bans and captchas, which will increase your effectiveness and reliability in leaps and bounds.

With that said, the most important message I can impart when it comes to Google bans is to do research, and limit the robot-ness of your searches. Sometimes it’s better to walk slow and look like a human than push the envelope and scrape like a robot.

What is more sustainable in the long run?

Related,