Everybody knows Google Chrome is the market leader when it comes to web browsing.

At least, that’s what the statistics say: an overwhelming 61.20 percent of Internet users are browsing with Chrome as of 2018.

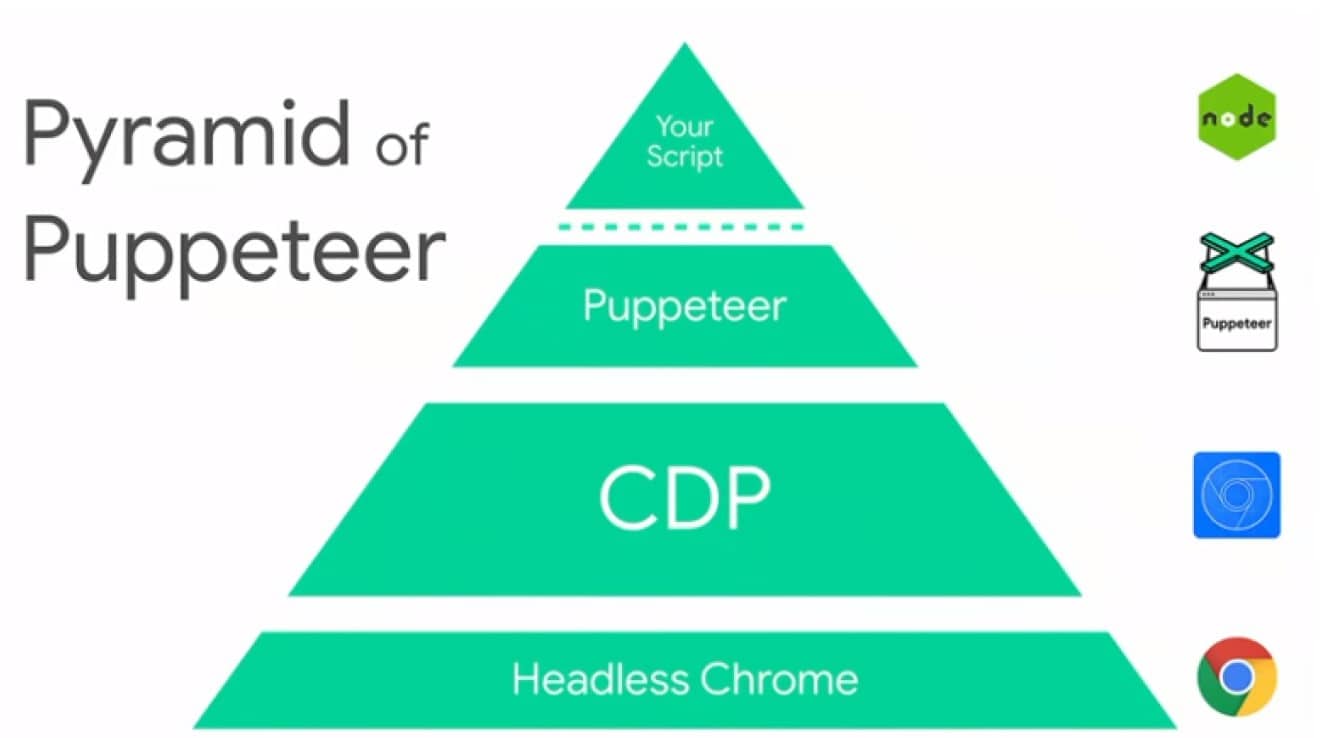

If Chrome is the leading web browser, then it makes sense that Chrome Headless will be the leading browser for automated application testing, web scraping, and more. Google’s release of Puppeteer, the Node.js API that makes automating web actions simple for Chrome users, sets the stage for easy, robust web scraping.

The only problem is there is no built-in privacy or identity protection. You need to be able to use all the great features Chrome offers while being able to automate nearly anything you want and be able to do so anonymously through proxies.

This can prove to be a challenge.

It turns out there are ways to use Chrome Headless with proxies to safely scrape website data without exposing your identity. To find out, we’ll have to start with what makes Chrome what it is, and what makes a headless browser what it is.

What is Chrome Headless?

Google describes Chrome Headless as, “…a way to run the Chrome browser in a headless environment. Essentially, running Chrome without chrome!”

Perfect. Clear as mud.

A better way to think of Chrome Headless is running Chrome without the graphic interface.

That’s actually the definition of a headless browser – instead of clicking on things, you execute code through a command-line interface or network communication.

There are plenty of reasons why you may want to do that. Automating Google Chrome is otherwise very difficult to do, which is why web application developers have historically used other browsers like the now-defunct PhantomJS for the purpose. But if so much of the Internet uses Chrome, it just makes sense to have Headless Chrome in your toolkit.

If you need to scrape data from a variety of websites and need a browser that can handle all the HTML, CSS, and JavaScript without generating error after error, Headless Chrome is the solution for you. Once you learn how to use proxies with it, you’ll be able to scrape just about any website on the Internet with ease and style.

How Chrome Headless Works

The basic environment offered by Chrome Headless should be familiar to anyone who has spent any time using another headless browser. You have a command-line interface perfectly suited to quickly scanning and scraping website data.

What you have in addition to this is Google’s fast, modern JavaScript engine and DevTools API. You have Google engineering ensuring you get support for every website on the Internet.

But controlling Chrome Headless requires using specific libraries. There are lots of options here, and Puppeteer is one of the most popular, since it, too, is a Google product. Although there are libraries that use a variety of languages, NodeJS APIs are generally recommended because that’s the same language as the data interpreted on the webpages you plan on scraping.

If you want to use a simple, no-nonsense API that is designed for web scraping and not much else, you can use NickJS . If you want all-purpose mapping through a complete API that is somewhat similar to Google’s DevTools, go with Puppeteer. You can even use a C++ API if you want.

But in order to fully leverage Chrome Headless for scraping, you need to be able to use it with proxies. As mentioned in the introduction to this article, that can be tricky.

How to Use Proxies with Chrome Headless

If you use a custom proxy that requires authentication, you may have found the Puppeteer library lacking full support. You need to use the page.authenticate() function to pass your log-in credentials into the Chrome login dialog box.

Example code would look like this:

const puppeteerSmme=require(‘puppeteer');

(async() => {

const proxyUrl = ‘http://proxy.example.com:8000';

const username = ‘bob';

const password = ‘password123';const browser = await puppeteer.launch({

args: [‘–proxy-server=${proxyUrl}'],

headless: false,

});const page = await browser.newPage();

await page.authenticate({ username, password });

await page.goto(‘https://www.example.com');

await browser.close();

})();

This is a simple way to use a proxy on Headless Chrome for web scraping. However, it can’t do everything you may need your authenticated proxy browser to do. For instance, there is a chance it will hang up on a page that requires authentication since it’s not clear from the code how the headless browser will handle multiple authentication requests.

In that case, you can use Apify’s proxy-chain package. Essentially, this package ensures that you can anonymize an authenticated proxy through Puppeteer by pushing it through a local proxy server first. It supports HTTP proxy forwarding and tunneling through HTTP CONNECT – so you can also use it when accessing HTTPS and FTP.

Here is what using Apify’s proxy-chain package with Puppeteer looks like in Node.JS:

const puppeteer=require(‘puppeteer');consl

proxyChain = require(‘proxy-chain');

(async() => {

const oldProxyUrl = ‘http://bob:[email protected]:8000';

const newProxyUrl = await proxyChain.anonymizeProxy(oldProxyUrl);

// Prints an IP address console.log(newProxyUrl);

const browser = await puppeteer.launch({

args: [‘–proxy-server=${newProxyUrl}'],

});

const page = await browser.newPage();

await page.goto(‘https://www.example.com');

await page.screenshot({

path: ‘example.png'

});

await browser.close();

})();

This will cover most of the web scraping requests you’re likely to make. There are additional, more advanced options for custom responses and connecting to external APIs, but in many cases, this code will do the job.

You maybe like to read,

- Best Proxy APIs for Scraping

- Building a Web Crawler Using Selenium and Proxies

- Scrapy Vs. Beautifulsoup Vs. Selenium for Web Scraping

Tips for Web Scraping with Chrome Headless

Now that you have the ability to use proxies with Puppeteer, it’s time to look at ways to make your headless scraping experience run smoother and more successfully. Use these headless scraping tips for web scraping more efficient.

Extract Data with jQuery

Why go through the difficulty of setting customized data scraping paths when you can use jQuery for the purpose?

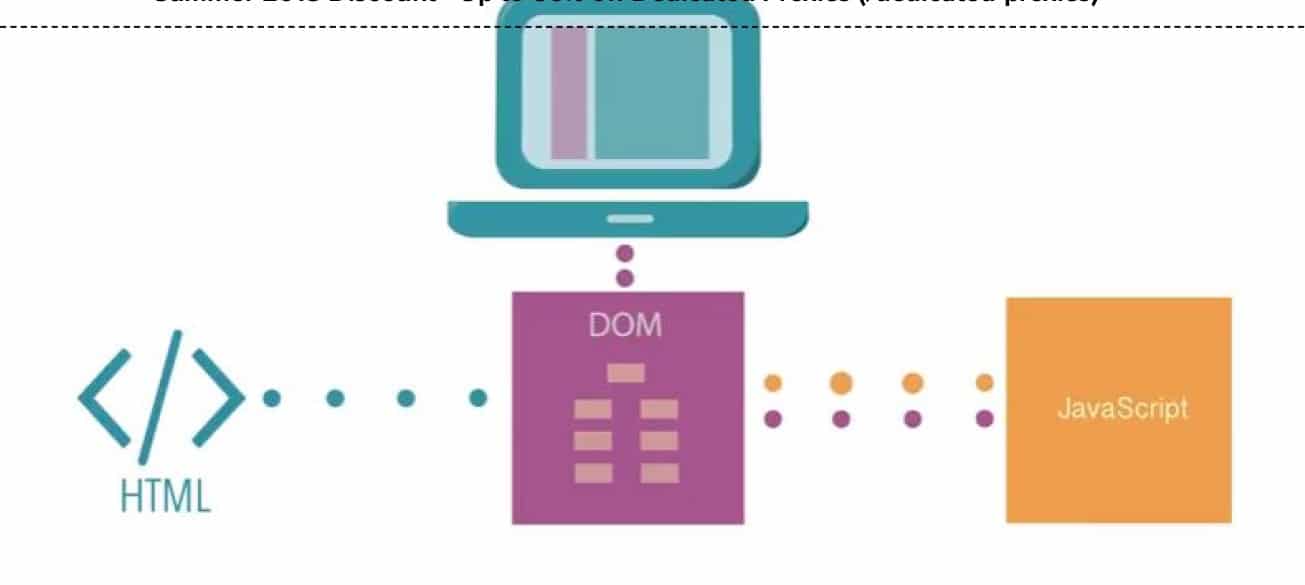

Any website that gives you its Document Object Model (DOM), can be scraped this way, since the DOM is just a structured tree of elements containing all the data on a given page. Use jQuery to scrape that and you have immediate access to the data you’re looking for.

When dealing with websites that already use jQuery, you only need to read through a couple of lines of page data to find what you’re looking for. Otherwise, you can load it yourself.

Solve CAPTCHAs on the Fly

What happens when you’re busy extracting data from various websites and suddenly you get your proxies stuck behind CAPTCHA pages? In most cases, you have to call it quits for a while and wait until the website no longer asks for human authentication. This is the most reliable and recommended solution.

But if you learn how to make an HTTP request between your scraper code and a CAPTCHA solving service, you can solve CAPTCHAs automatically, both using optical character recognition and actual human beings for the purpose (although this method is not recommended).

While the majority of CAPTCHAs can be solved through artificial intelligence, most of these services employ a small number of humans to solve the tougher captchas on a full-time salary. In any case, the toughest ones generally don’t take longer than half a minute to solve.

There are lots of APIs for CAPTCHA solving services available through any Google search, and they generally cost a few dollars for every thousand CAPTCHAs solved. Again, this is not recommended and you’ll end up wasting money in the long run on CAPTCHA solving instead of simply using a larger quantity of proxies.

- Proxies for Preventing Bans and Captchas When Scraping Google

- How to Scrape a Website and Never Get Blacklisted

If you’re using PhantomBusters’ Buster Library, there is a simple way to make automatic calls to multiple CAPTCHA solving services directly from your Chrome Headless.

That kind of automation turns a time-consuming task into a simple one. These few lines of code can potentially turn your proxy script into a real human for thirty seconds or so.

Now You’re Ready to Scrape

As you gain experience in the world of scraping with Chrome Headless, you’ll begin to know where and when you need to set human-like delays to keep your scraper in good graces.