There are a lot of reasons you might want to scrape data from Amazon. As a competing retailer, you might want to keep a database of their pricing data, so you can try to match them. You might want to keep an eye on competitors selling through the Amazon Marketplace. Maybe you want to aggregate review scores from around the Internet, and Amazon is one of the sources you’ll want to use. You could even be selling on Amazon yourself, and using the scraper to keep ahead of others doing the same.

I don’t recommend some of the more black hat uses for data scraping. If you’re scraping product descriptions to use for your own site, all you’re doing is shooting yourself in the foot as far as SEO is concerned. You should avoid basing your business model on scraped Amazon data; more on that later.

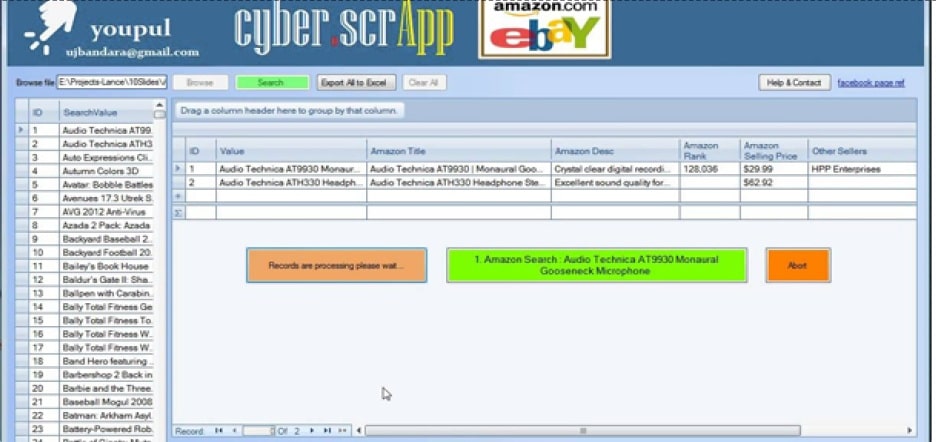

There are a lot of pieces of software out there designed to help you scrape Amazon data, as well as some that are general use screen scraping tools. You can get a lot of mileage out of them, but always exercise caution. You’ll want to be very sure of the validity of a piece of software before you drop $400 on it. Oh, and if the product you’re researching is primarily marketed through low-view YouTube videos with affiliate links in the descriptions, I recommend staying away.

On the other hand, you can use scripts rather than software. Scripts have the benefit of being infinitely configurable, as well as open-source by definition. As long as you have some idea of what you’re doing with the code, you can read it to make sure there’s nothing tricky going on, and you can change it to work exactly the way you want it to. Of course, that relies on you having enough code knowledge to be able to create and change a script. Knowing PHP, CURL, XML, JS and other languages is a good idea.

If you’ve looked into this before, you might ask yourself why you would want to use some third party scraper or a script you barely understand when you could just use Amazon’s API. It’s true that, for some purposes, Amazon’s API is a good alternative. However, it doesn’t provide you with all of the data you might want. The API is primarily designed for affiliates to use it for advertising in a custom way, using some method that isn’t covered by affiliate links or the product box widgets Amazon provides. There’s a lot of data it won’t let you harvest.

Technically, scraping data has been against Amazon’s policy for a long time. It wasn’t until 2012 that they really started enforcing it, however, so a lot of people got away with scraping data for a long time. Finally, when they did, many people considered it an insulting disruption to their business model, never considering how they were never in the right from the start.

The point I’m trying to make here is that if you’re scraping data from Amazon, what you’re doing is against their terms of service. That means you’re always at risk of numerous penalties. Usually, Amazon just shrugs and bans your IP. However, if you’ve been an especially tenacious pest or are using their data in a way they don’t approve of, they are perfectly within their rights to take you to court over it. This is, obviously, something to be avoided.

All of that said, Amazon seems to have slackened up in recent years. This thread from 2014 indicates that Amazon doesn’t bother with enforcing low-scale scraping blocks. They have automated systems that will slap you with a ban if you cross their path, but they aren’t actively and persistently seeking out and banning all data scrapers. It makes sense; a retailer of their size has so much data to filter through on an hourly basis that it would be impossible to ban every single data scraper.

Before you continue, here are seven things you should know about making Amazon the target of your data scraping. By keeping them in mind, you should be able to keep yourself safe from both automated bans and legal action.

1. Amazon is Very Liberal with IP Bans

The first thing to keep in mind if you’re going to be harvesting data from Amazon is that Amazon very much is liberal with their bans. You won’t be harvesting data while logged into an account, at least, not if you’re smart. That means the only way you’ll be able to be banned is through an IP ban.

The nice thing is that IP bans can be circumvented. The bad thing is that an IP ban from Amazon is not a punishment to be taken lightly. As far as I know, such bans are permanent.

There’s a prevailing attitude across the web, at least among tech circles, that IP bans are ineffectual and inefficient. They can also be detrimental to the site that uses them, depending on how broad an IP ban they use. You don’t want to ban a whole IP block; you might be eliminating an entire neighborhood from your potential customer base. At the same time, anyone dedicated to getting around an IP ban can do it with relative ease.

This is, more or less, true. IP bans are easy enough to get around, specifically if you’re expecting and preparing for a ban in the first place. That’s what proxy servers are for.

A proxy server, in case you aren’t aware, is a way to filter your IP address. The website, in this case Amazon, will see your connection as coming from the proxy server rather than your home connection. If they ban you, they ban the proxy, and you can just use a different proxy.

Therefore, in order to maximize your chances of successfully harvesting all of the data you need on an ongoing basis, you’ll need numerous proxy servers. You want to be able to cycle through them to avoid any one IP being flagged for bot-like activity. You also want to have backups in case any of your proxies are banned, so you can keep harvesting without issues.

2. Amazon is Very Good at Detecting Bots

The number one mistake that scrapers make when harvesting data from Amazon, or any other site with a high profile and a plan to ban scrapers, is using scraper software without configuring it properly.

Think about it. If you were tasked with detecting bots and filtering them out from legitimate traffic, what would you look for? There are simple things, like the user agent and whether or not it identifies itself as a bot. Those are easily spoofed, though.

A more accurate way of detecting bots is by their behavior. A poorly programmed bot will try to make as many requests as possible as quickly as possible or will make them on a fixed timer. Bots are, by definition, robotic. They repeat actions, they make the same set of actions in the same order with the same timing again and again.

Amazon is very good at distinguishing between bot actions and human actions. Therefore, to avoid your bots being banned, you need to mimic human behavior as much as possible. Don’t be repetitive. Don’t be predictable. Vary your actions, your timing, and your IP. It’s harder to identify a bot when it only accesses a couple of pages. From your end, you have an unbroken stream of data; from their end, a hundred different users came and performed in a similar way.

Safer for you, harder for them to handle.

3. You Absolutely Must Follow Laws and Keep a Low Profile

There are some regulations that apply to bots of all sorts, including content scrapers. I hesitate to call them laws, because there’s very little actual legal precedent about all of this. One of the big high profile cases, though, is Craigslist versus Padmapper. Padmapper took Craigslist data for real estate listings and laid it over a Google Maps interface. This is a decent business model, and Padmapper still exists today, but Craigslist took offense to the use of their data in a way that didn’t benefit them.

In the end, Craigslist won that case, though it didn’t go to a court judgment. Instead, it was settled out of court. This is a good lesson to take to heart. If you step on the wrong toes – and don’t comply with cease and desist letters immediately – you can be the subject of legal action, and you’re usually in the wrong. You can read about that case and others here.

Data scrapers on their own do not violate any laws unless you’re harvesting private data, or you’re harvesting at a rate that is disruptive to the operation of the site, such as a DDoS attack would do. Your scraper must act as a public visitor and cannot access internal data or data that an account is required to access.

Otherwise, all of the restrictions placed upon you are more about the way you use that data, rather than the way you obtain it.

4. Never Sell Scraped Data or Use it to Make a Profit

I say “don’t use it to make a profit” but what I really mean is don’t use it as a foundation of your business model. Harvesting pricing data so you know what deals exist and what price point you can use to undercut people is fine. Harvesting product data that you use as your own to sell your own products is not.

I mentioned above that you shouldn’t copy product descriptions, because you’ll end up shooting yourself in the foot. This is because of Google’s algorithm, which heavily penalizes copied content. Google knows, obviously, that the product descriptions originated on Amazon. When they see your content, they’ll penalize it, because it’s just low-effort copying from a bigger retailer.

Essentially, there are three core rules you should abide by when using the data you scrape.

1. Never harvest or use data that’s not normally open to the public without an account.

2. Never sell harvested data or make some attempt to profit off of it via a third party.

3. Never base your business model on the data you scrape from any site, Amazon included.

The first rule is very important, because it protects you from the issues that come up with data privacy. That’s the kind of thing that can really get you in trouble if you violate it, and it’s the kind of thing that has actual laws attached.

The second and third are just ways to hide the fact that you scraped Amazon data and to make it less likely that Amazon will target you with any sort of legal action.

5. Always Review Scraping Software Before Using

This is just a general tip for any time you’re getting software from online, particularly in a gray hat or black hat arena. Things like scraping software may not be illegal, but they have a bad reputation, and as such are often the targets of malicious agents.

The first thing you do is, before you buy, investigate. Make sure that there are positive reviews that don’t look like they were paid for by the scraper software developers themselves. You should also look into pricing. There are high-quality scrapers that cost a ton, but there are also pretty good scrapers for cheap or free.

If you’re getting a script or open-source code, you’ll want to look into the code yourself or pay someone to give it an overview for you, so you know what the script is doing. You don’t want to scrape data and save it to a database only to find that the scraper script is also sending the data to a remote location.

This is even more important if you’re using a scraper that requires you yo log in, either with credentials for Amazon or credentials for anything else. Always assume that any password you give to the scraper is stolen, unless you verify yourself that it’s not going to be.

Also, scan the app for viruses. Embedding a virus in a program generally makes antivirus programs block the download, but some will hide it well enough that only a detailed scan will work.

6. Implement a Limit on Queries per Second

This is something else I mentioned earlier, but I’ll go into more detail now. There are two reasons to avoid making too many queries in too short a span.

The first reason is that you’ll be detected as a bot. As I said, it’s very easy to be seen as a bot when you’re making identical requests on different URLs with the same timing, over and over. It’s a sure-fire way to get your proxy banned.

The second reason is to avoid being accused of DDoSing the database you’re harvesting. It’s not very likely that a bot or script you’re using will have the power to even slightly lag Amazon, but that doesn’t mean they won’t filter your requests based on DDoS protection if you make too many too quickly.

You could also consider your own system requirements to be part of the possible issues. There’s only so may operations at a time your computer can handle, and a script making too many requests can overload a network card or modem, or saving all of the data could lag your hard drive if it’s not sufficiently fast.

Of course, there’s also the issue with proxies. They don’t always allow high throughput, or they might not have a significant amount of lag. That’s the problem with using most proxies; they’re located overseas, which adds significantly to response times.

7. Rotate Your Summer Proxies

This is, again, something I mentioned to a minor degree earlier. You’re going to want a number of different proxies, so as to spread out your requests and make them look much less suspicious. That means buying access to lists of proxies, or a site that will give you access to a lot of them at once.

You should also consider using private proxies rather than public proxies. Private proxies don’t have any of the issues that plague public proxies. For one thing, they don’t need to interrupt your browsing with ads in order to make a buck. They’re much less laggy due to the limited number of users allowed to access it.

They’re also much less likely to be banned already. Public proxies are the low-quality solution for people who don’t know what they’re doing. Private proxies are the answer for those who demand higher levels of quality from their scraping.