When most people think of proxies they think of an anonymous agent, something that hides your identity from snooping eyes.

This is an entirely accurate concept. Proxies supplant your unique IP address (the one provided by your Internet Service Provider) with their own. That’s how they mask your identity.

However, it doesn’t paint the whole picture. Really, it just shows you the end result. That result is only valuable if it’s achieved — what happens when a proxy doesn’t work? Then it’s pretty useless, right?

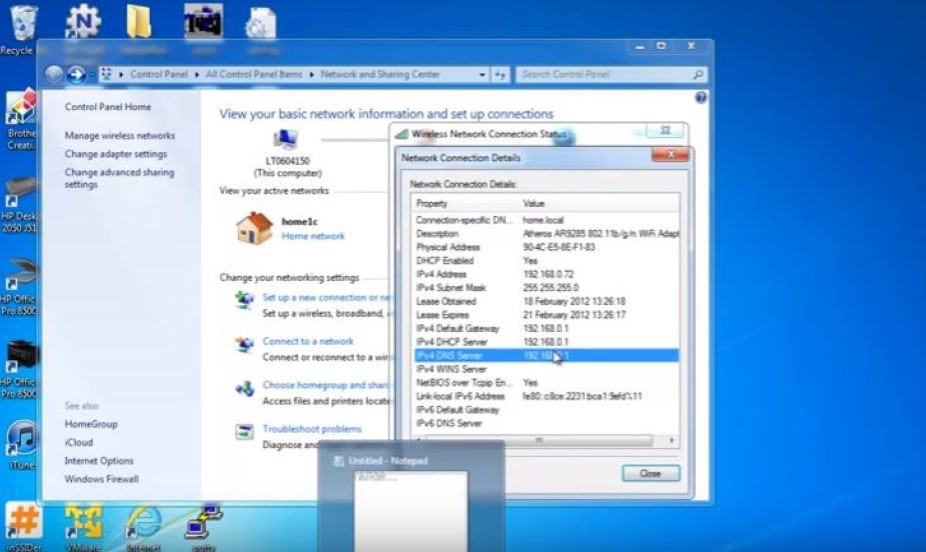

Proxies aren’t the simplest piece of technology in the world — they require you to dig into your OS settings, browser settings, or specific software settings in order to be used properly. Even then, once you’ve entered all the information correctly, they can be finicky.

If your goal is to simply browse the web like a normal user, but with a proxy IP in place of your own, it won’t be too difficult. You may have to troubleshoot a bit to make sure your internet speeds stay fast and your identity is truly protected, but you should be just fine.

Where it gets more complicated is when you want to do bulk scraping through search engines. Or, really, any massive amount of pings and requests with proxies, as all of those requests will end up going through search engines.

Search Engine Blocks

The most common scenario for this in the scraping community is loading up a software application like Scrapebox with proxies, setting the request intervals and URL destinations, and clicking “Scrape.” Except, checking back in ten minutes, you find all the harvesting has halted and there’s an issue.

Another scenario is when you’re trying to use proxies to grab large batches of Ticketmaster tickets or special edition Nike shoes with Nike proxies, or get the last .02 second bid on an eBay auction — in essence, when you’re using proxies to buy something.

However, you get stopped in your tracks. Pages won’t load, a plethora of captchas show up, and you’re locked out.

In both of these cases the first culprit is the search engine. In the second example it could be those individual sites, which are known to have powerful anti-proxy technologies in place specifically to stop this kind of activity.

The good news is that in either situation the remedies are pretty similar. They have to do with what proxies you’re using and how you’re using them. In most cases, any issues you have can be solved with one (or all) of the methods below.

Methods for Bypassing Blocked Search Engines

Dedicated Proxies

This step is a bit high-brow because it’s really hard to find a reliable source of dedicated IPs, and if you do, they may cost more than you want to pay.

That said, if you’re serious about scraping and really don’t want to be blocked by search engines, using dedicated IPs is one of the best things you can do.

Essentially, IP addresses are either assigned by an ISP, or purchased through a proxy provider. Most of these providers get proxies for data centers, massive buildings with huge servers. The datacenter IPs come in ranges, one IP after the other, a bit like the clone army in Star Wars.

Some advanced websites, like the ones mentioned at the beginning of this article, actually block entire ranges of IP addresses from data centers, and have ways of telling if an IP is a datacenter or residential IP address. The key is to find a quality dedicated proxy provider who won’t oversell IPs so they’ll be higher quality and not get banned by search engines.

Rotate Your Proxies

Yes, this means you’ll need a batch of proxies to rotate. If you thought you could do heavy scrapes of the internet or large buys on heavily protected sites with a few proxies, you are sorely mistaken.

Depending on your level of scraping and how often you are running the software (some people do it 24/7), the amount of proxies you need will be different.

In general, I recommend batches of 10 proxies for the low-end scraping, 50 for a middle range, and over 100 (and up) if you’re getting serious.

The key here is to use software or a plug-in that allows you to paste your entire load of

proxies at once, and then set a customizable randomizer.

For example, if you’re doing a medium amount of scraping and have 50 proxies to your name, tell the software to use 5-10 of those proxies at any given time. Then tell it to swap out new proxies, randomly, every three minutes.

This way your searches, which typically hit a website or search engine over and over and over, will rarely carry the same IP long enough to be traced or connected.

You could do the randomization manually, but it would take a very long time. Most scraping software includes this ability, as do certain browser extensions.

Rotating IP Providers

The next method in this step is to seek out a service that provides rotating proxies for you. This means that instead of buying a batch of 50 proxies, of which the IP addresses will generally stay the same, the provider will give you 50 new proxies every couple hours.

This has one incredible advantage: complete randomization. In the previous example, even if you were to use a randomizer in your software, you’d still be using the same 50 proxies, so there would be repeats.

If the proxy provider changes your proxies every couple hours it will be very hard for a search engine to legitimately block your proxies. This is even more true if you still randomize those random proxies, set their requests to a reasonable amount, and follow other rules of scraping.

This choice comes with two drawbacks:

-

Cost

Having frequent access to so many new proxies all the time will cost more than your typical service. This also assumes you’re already paying for proxies, instead of using free ones, which I’ll get into below.

- Ease of Use

While completely new IP addresses every couple hours sounds great, you will need to find a way to easily port these into the software application you’re using. This can be a simple copy and paste, but for those who love setting their programs to run for days at a time, this might not work.

On the other hand, if you’re a coder, you could probably figure out a way to make this automated.

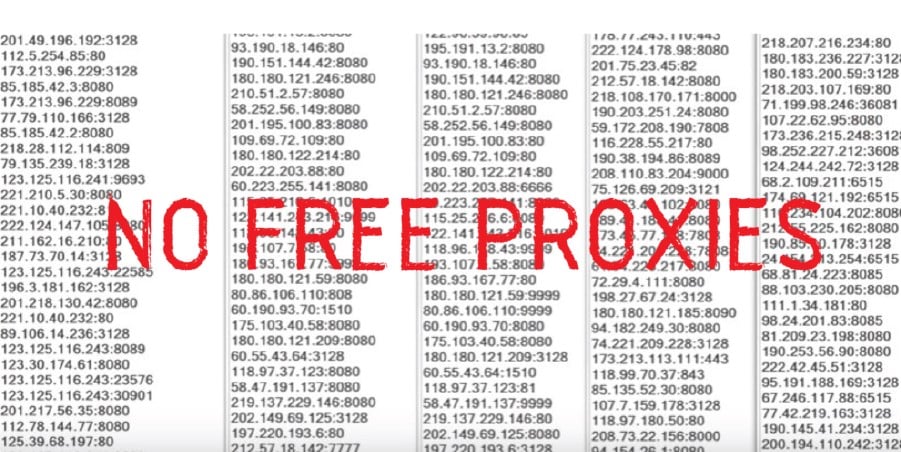

Don’t Use Free or Shared Proxies

You might have heard this bit of advice before, but in case you’re already not following it: don’t use free, shared or residential proxies.

Free Proxies

The above step, in which you rotate your proxies, can technically be done with free proxies you’re grabbing in batches off the web. In theory you could scrape massive lists of free proxies and put them in your randomizer, thereby making sure you don’t get hung up by search engines.

However, you’ll find that most of these proxies don’t work. You’ll get more blocks, time outs, and other issues with free proxies for a couple reasons.

First, they are overused. The fact that they’re free means anyone can use them, and that’s precisely who is using them at this moment. If one proxy has 15 people on it, all of whom are scraping, you’re not going to get through a search engine. It’s going to send up red flags, and your scrape will fail.

There are the rare gem free proxies with high security, the right country, quick speeds, and few users, but those will last for an hour or two. All good things come to an end.

If you’re serious about scraping over the long run, you will spend more time finding and switching out new free proxies than is worth the cost of paying for them.

Second, free proxies can contain malware, and typically alter your browser information through strange user agents and other methods. This means you might not get the information you need, and you can’t be certain of their security.

Shared Proxies

Shared proxies are the black sheep in the proxy world. You have to pay for them, but they are shared with other people. This means they cost less, but it also means they have an undetermined amount of random users, including you, all doing fairly private things.

It’s not entirely clear to me why someone would pay for shared proxies, unless they are really concerned about cost. Shared proxies are cheaper, but not so cheap that it makes them much better than private proxies.

Read more, The Difference Between Shared and Private Proxies.

The same issue present in free proxies — that they could be getting banned or badly associated by search engines and websites at any time without your knowledge — is true for shared proxies as well.

Even if you were to adjust your scrape settings perfectly to not get banned, three other people on the proxy might not, and therefore it gets blocked.

The overall gist: use private proxies if you want to stop getting blocked by search engines.

Randomize User Agents

Much like you need to randomize your IPs, you also need to randomize your user agents. User

agents are other identifiers that browsers send to servers; they contain information like your browser type and OS.

Generally speaking this isn’t a huge issue, but if you scrape heavily it’s important.

Let’s say you have 50 proxies and you’re rotating them well. You’re reaping massive amounts of data, and it throws up Google’s red flag. Google will look at the user agents transmitted with each of these proxy requests. If every single one of them contains your exact user agent, your proxies will get blocked.

You can switch your user agents in software or through extensions, just like your proxies. It’s a good, easy step you can take to assure even less information is being transmitted about you, and that your proxies look really different from each other.

Stop Using So Many Search Modifiers

This one has less to do with proxies, and more about the way in which you’re trying to search or scrape a search engine.

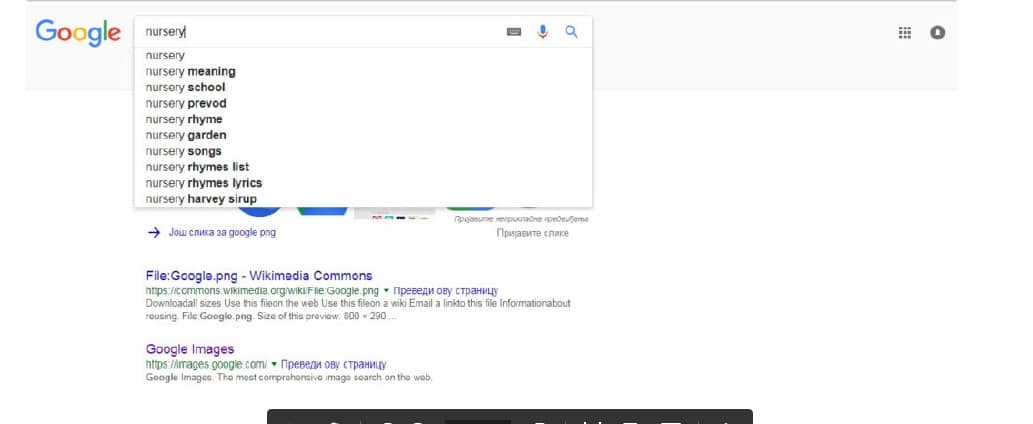

Today, search is incredibly intuitive. You can type “nursery” into Google and it will pull up a list of plant-based stores in your area, a map of those stores, the websites of said stores, and then, later, general information about gardening and nurseries.

This is a far cry from the early days, when Google search terms were more difficult, technical, and complex in order to get the results you wanted. “Nursery” would have pulled up definitions of the word, and examples of baby rooms, and possibly some gardening information.

The point is that Google now expects the general populace, a.k.a. normal humans, to search in this easy, carefree manner.

Scrapers like yourself don’t have any interest in these search terms. You’re going after search modifiers — anything that lets you refine search to a very specific and fine point, like keywords in URLs and keywords without other keywords.

You can perform these searches through modifiers . It’s a great way to get the results you want, and therefore the data you want.

However, Google and other search engines aren’t stupid. They recognize when someone (or something) is searching with modifiers, and they know that means it’s likely not a normal user. That puts up a small red flag, which grows every time you repeatedly make a request of the same term or the same modifier slightly tweaked.

All of this sets a search engine on your scent. If you’re not careful (and performing the other steps here), they’ll block you.

Go about searching like a normal human, or at least set your request times low enough that the red flag won’t be raised.

Visit the search engine’s homepage first

This final step is also about how you search, and less about your proxy usage. Many unsuspecting scrapers and coders don’t think about the humanity that is necessary to get around blocks.

In this case, think about how a human goes about browsing. They go to google.com, type in their phrase, and then look through the results. Or they type it into the web browser search bar, which is basically the same thing.

Most scraper programs are designed to ignore that first step of actually going to google.com, or bing.com, or yahoo.com, and instead search a term immediately.

This is super obvious to a search engine, especially when it’s repeated hundreds (or thousands) of times in a short time frame with similar IP addresses.

Hopefully your scraper has the ability to visit multiple URLs — if it does, always start at the search engine’s home page. If it doesn’t, you may have to code your own.

Blocks Begone

A blocked proxy is a sad, frustrating thing. The most common reason a search engine will block your request is due to the above issues — go through the list, make sure you’re operating as best as you can, and try again.

If you’re still getting blocked you should consult your proxy provider, and see if another provider may work better.