Do you want to learn how to build web scrapers using Python? Come in now and read our article on how to build a simple web scraper. Codes, together with explanations included.

Have you ever wondered how programmers build web scrapers for extracting data from websites? If you have, then this article has been written specifically for you.

It is no longer news that we live in a data-driven world, and much of the data required by businesses can be found only. By using automation bots known as web scrapers, you can pull required data from websites at high speed.

Google does it, so does Yahoo, Semrush, Ahref, and many other data-driven websites.

I am going to show you how you can start building a web scraper. No, it is not going to be high-end like that of Google. It can’t even be compared to many production-ready web scrapers.

But it is going to be a useful tool that you can use straight away. I choose to build this web scraper for this tutorial because it is something I can personally use – and it is simple to build. Let start with the problem definition.

Problem Definition

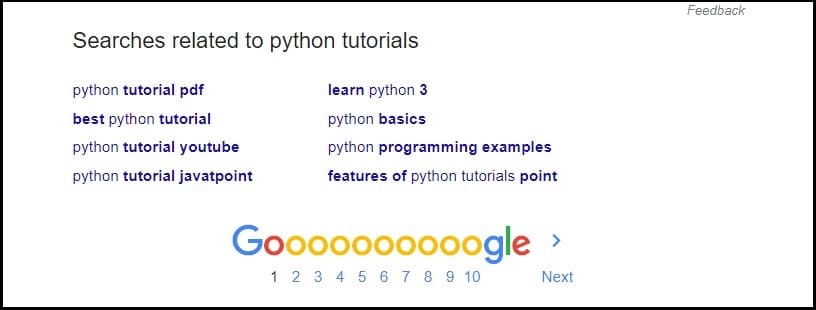

Build a web scraper that scrapes Google related keywords and write them into a text file. In essence, what we would be building is an SEO tool that accepts a search keyword as input and then scrapes the related keywords for you. Just in case you do not know, Google related keywords are keyword suggestions found below search engine listing.

The keywords are related to the search keyword you entered into Google search and can be incorporated into an article related to the keyword search for SEO purposes.

There are many paid tools that do this in the market but have other functionalities that our own do not. Because it has been built for a tutorial, I stripped out all the complexities, and this means no exception (error) handling. If you enter a keyword without related keywords, it will throw an exception, and the program will crash.

Requirements

Even though the tutorial is a beginner level tutorial, I expect you to know how to code a little bit in Python. You should know the Python data structures such as integer, string, list, tuple, and dictionary. You should also know how to loop through a list using the for-in loop.

Know how to create functions and classes as the code is written in Object-Oriented Programming (OOP) paradigm. You are also expected to know how to read and write HTML for the inspection of data to be scraped.

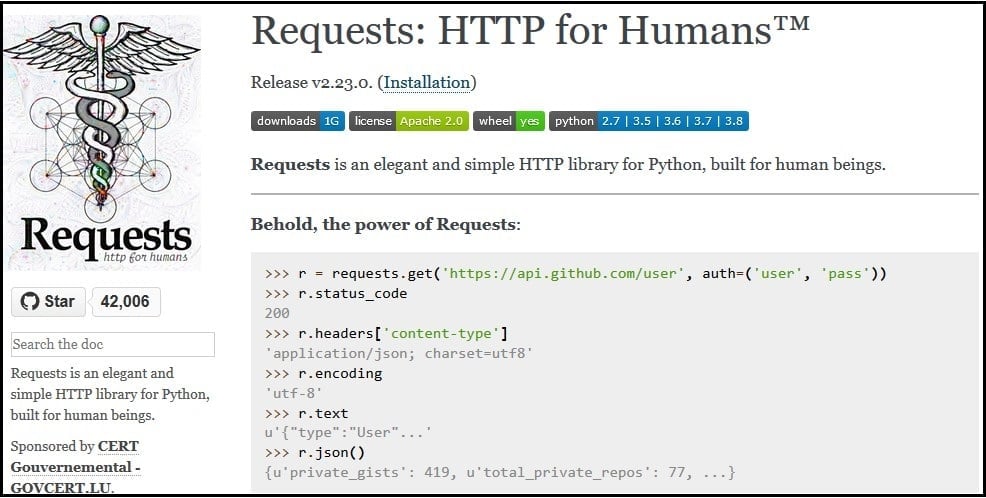

The required dependencies are only two – requests and BeautifulSoup.

This is an HTTP library for Python. This library is used for sending HTTP requests. While you can use the urllib module in the standard library, Requests is a better option. Use the “pip install requests” command to install this library.

BeautifulSoup is a HTML and XML documents parser for Python. With this library, you can parse data from webpages. Installing this one too is easy, just use the “pip install beautifulsoup4” command in your command prompt.

Without the above two libraries installed, you won’t be able to follow this tutorial. Install them before we continue.

Step By Step – Python Web Scraping Tutorial

Step 1: Inspect HTML of Google Search Engine Result Pages (SERP)

The first step in every web scraping exercise is to inspect the HTML of the page. This is because when you send an HTTP GET request to a page, the whole page will be downloaded. You need to know where to look for the data you are interested in. Only then can you extract the data.

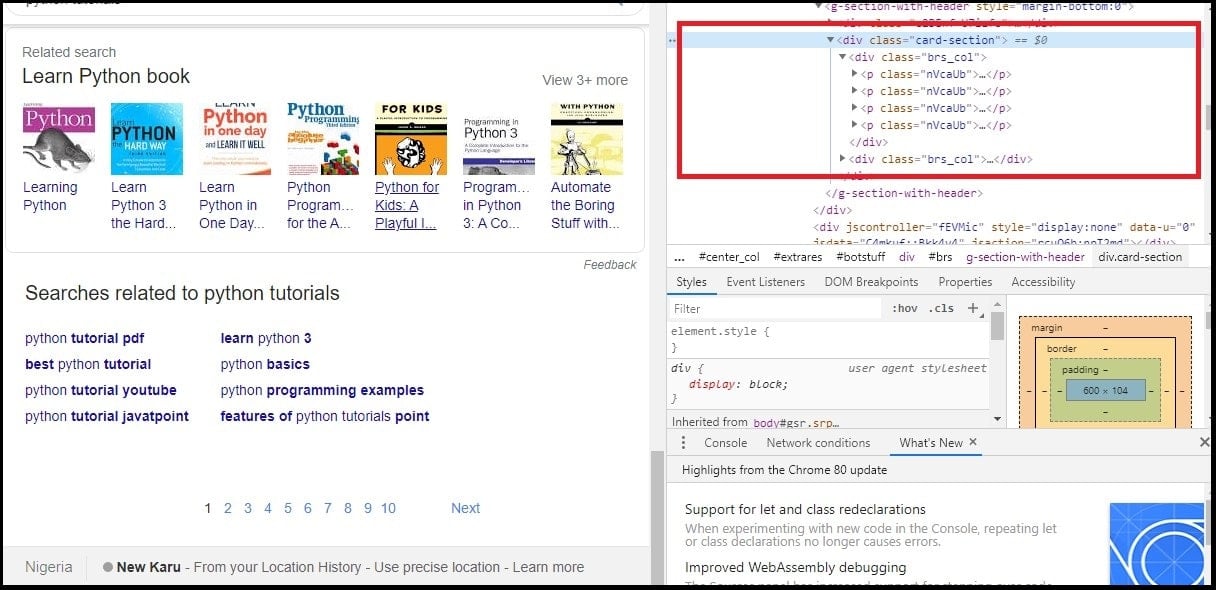

- Start by searching for the phrase “python tutorials” and scroll down to the bottom of the page where the list of related keywords is displayed.

- Right-click on the section of related keywords and select “Inspect Element.”

- You will see that the whole section of the related search keyword is embedded within a div element with a class attribute – card-section.

- Usually, the keywords in this section are eight (8) in numbers, divided into two (2) columns – each column consisting of four (4) keywords. Each of the two keywords is embedded each within a div element with a class attribute – brs-col.

- For each of the columns of 4 keywords, the keywords are embedded as anchor elements (<a>) within a paragraph element <p> with a class attribute – nVcaUb.

From the above, for you to reach any of the 8 keywords, you need to follow this path – div (class:card-section) -> div (class:brs-col) – > p (class:nVcaUb) – > a.

Step 2: Import required Libraries

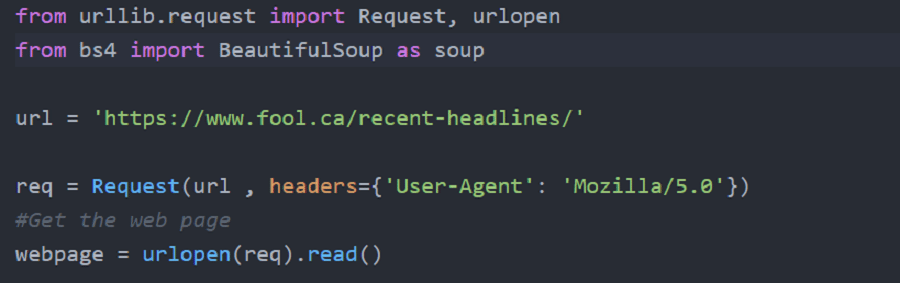

Launch your desired IDE. For me, PyCharm is a Python IDE of choice. But for this tutorial, I used the Python IDLE that comes when I installed Python on my system. After launching the IDLE, create a new python file (.py) and name it “KeywordScraper.py” then import the required modules.

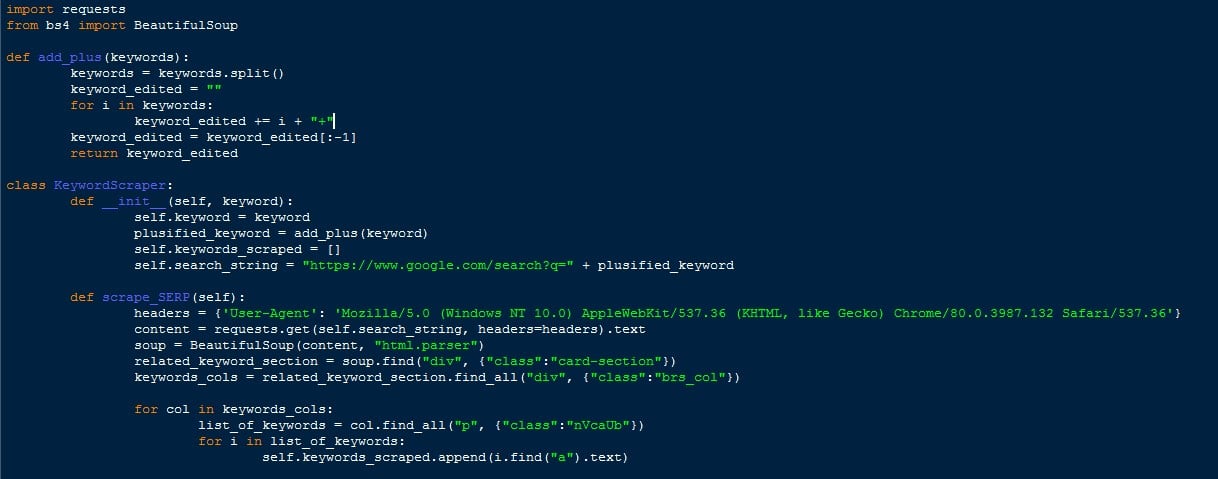

Step 3: Create a helper function for adding plus to keywords

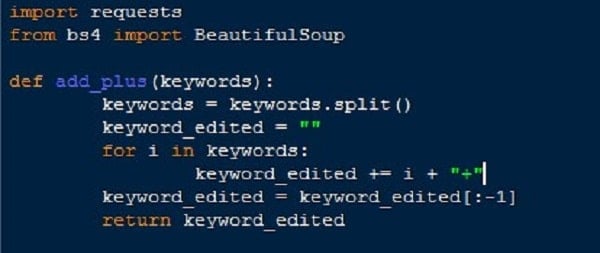

The search URL for the keyword “python tutorials” is https://www.google.com/search?q=python+tutorials. How this is generated by Google is simple. The search URL without a keyword is https://www.google.com/search?q=. The keyword is usually appended to the string immediately after the q=.

But before the keyword is appended, all the spaces between each word is replaced with a plus sign (+) so “python tutorials” is converted into “python+tutorials”. And then, the search URL becomes https://www.google.com/search?q=python+tutorials. Below is the helper function for that.

import requests

from bs4 import BeautifulSoup

def add_plus(keywords):

keywords = keywords.split()

keyword_edited = ""

for i in keywords:

keyword_edited += i + "+"

keyword_edited = keyword_edited[:-1]

return keyword_editedStep 4: Create a KeywordScraper Class and initialize it

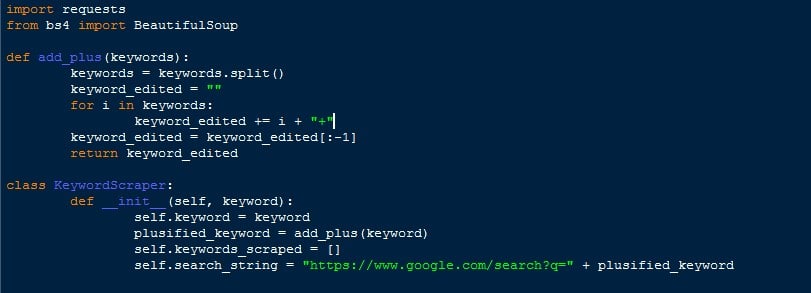

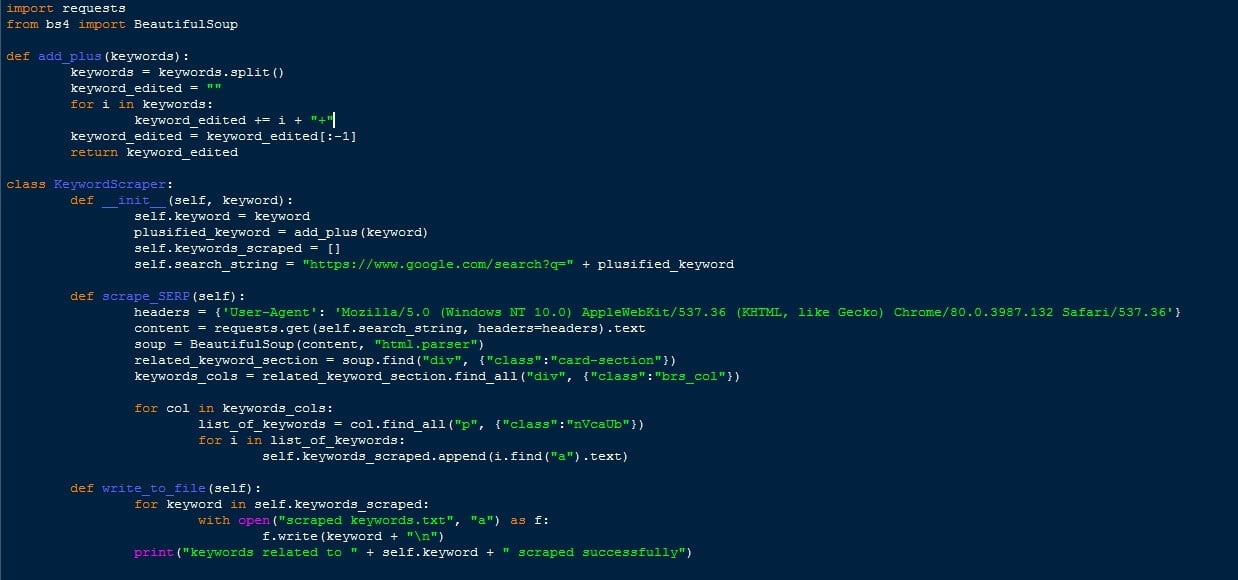

Create a KeywordScraper Class that accepts only one parameter – which is the keyword. After creating the class, initialize it with the following variables.

class KeywordScraper:

def __init__(self, keyword):

self.keyword = keyword

plusified_keyword = add_plus(keyword)

self.keywords_scraped = []

self.search_string = "https://www.google.com/search?q=" + plusified_keyword

- keyword – for storing the keyword to be searched

- plusified_keyword – for storing the keyword above, but with space between words converted to plus (+). As you can see from the screenshot below, the add_plus helper function was used in the conversion.

- keywords_scraped – an empty list meant for holding the scraped keywords. Initialize as an empty list ([]).

- search_string – holds URL of Google Search for your keyword. See how the “plusified” keywords were appended to form the full URL.

Step 5: Create Method for Scraping SERP within the KeywordScraper Class

The method name in the class is scrape_SERP. As you can see below, the first variable is a dictionary (dic) with the variable name – header. The string passed as value for User-Agent is the user agent of my browser. This is very important. Google serves different versions of its pages, depending on a user’s user agent.

I tried running the same code on my mobile IDE without the user agent, and it fails to pass because the HTML document delivered isn’t the same as the one I used in parsing. You can experiment with different headers to see which work for this code and which doesn’t.

def scrape_SERP(self):

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36'}

content = requests.get(self.search_string, headers=headers).text

soup = BeautifulSoup(content, "html.parser")

related_keyword_section = soup.find("div", {"class":"card-section"})

keywords_cols = related_keyword_section.find_all("div", {"class":"brs_col"})

for col in keywords_cols:

list_of_keywords = col.find_all("p", {"class":"nVcaUb"})

for i in list_of_keywords:

self.keywords_scraped.append(i.find("a").text)The content variable holds a string of the whole HTML of Google SERP for the keyword, “Python tutorials.” This was downloaded using the get method of the requests library – you can see the headers variable added as a parameter for the requests.get(). At this point, the page has been downloaded and stored in the content variable. What is required is parsing.

BeautifulSoup is used for parsing the downloaded page. To learn how to use BeautifulSoup, visit the BeautifulSoup documentation website. Looking at the code, you will see that BeautifulSoup takes two parameters – content to be parsed and the parsing engine to be used. After initializing it, you can start searching for the required data.

As you can see, the code first searched for the related keyword container (a div element with class card-section). After this, it then searched for the two div, each representing a column with the class name brs-col, housing 4 keywords each.

The code then loops through the two div, searching for p elements with class name nVacUb. Each of these houses an anchor element (link), which has the keyword as its name. After getting each keyword, it is added to the self.keywords_scraped variable.

Step 6: Create a Database Writing Method

From the above, some will argue that you have successfully scraped the required data. But I choose to say unless you save it in persistent storage, the tutorial is not complete. In what storage do you save your data? There are many options; you can save your data in a CSV file, a database system such as SQLite, or even MySQL. In this simple tutorial, we will be saving our data in a .txt file.

def write_to_file(self):

for keyword in self.keywords_scraped:

with open("scraped keywords.txt", "a") as f:

f.write(keyword + "\n")

print("keywords related to " + self.keyword + " scraped successfully"Look at the method below; the code opens a file known using the open function and passes the value “scraped keywords.txt” as an argument. If this file does not exist, the script will create it, and if it already exists, it will write each keyword on a separate line.

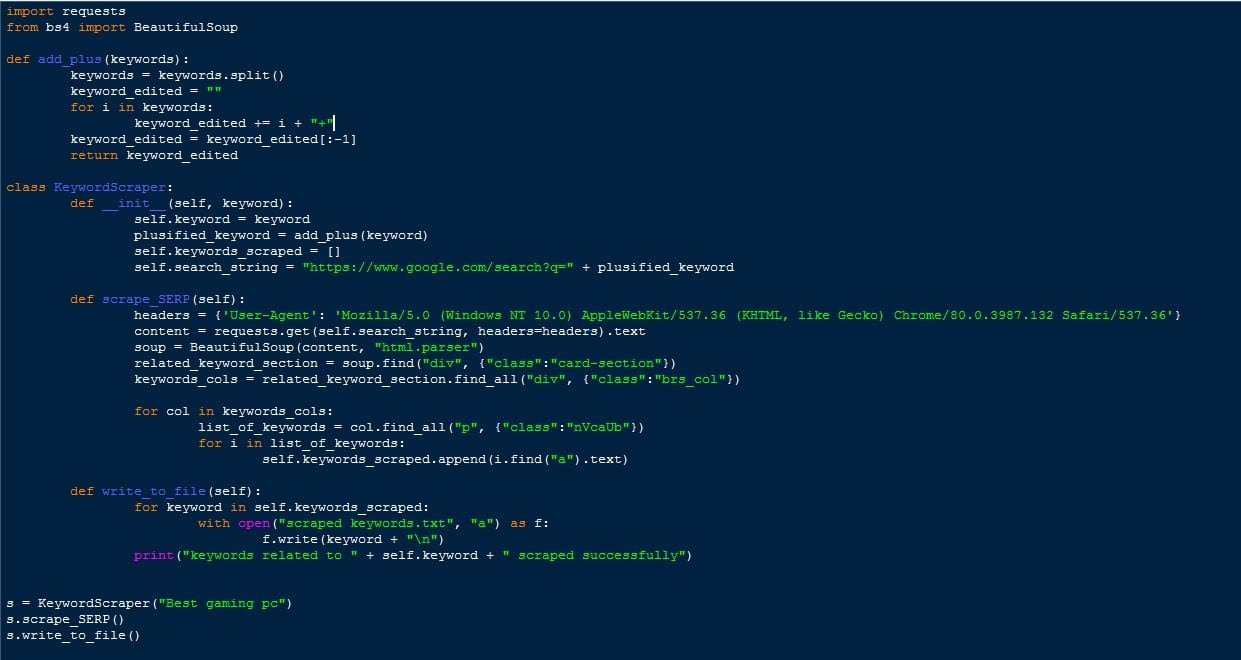

Step 7: Running the Code

To run the script, create an instance of the KeywordScraper Class – I named the variable “s” and passed the keyword “python tutorials” as a parameter. You can pass any meaningful keyword, such as “Best gaming pc”, and you will get keywords scraped for that keyword you pass as a parameter.

s = KeywordScraper("Best gaming pc")

s.scrape_SERP()

s.write_to_file()

After creating an instance of the class, call the scrape_SERP method then the write_to_file method. After doing this, you are done writing the code. It is time to run your code. Run it now, and if everything goes well, just check the folder where your script is located, and you will see a new text file with the name “scraped keywords.txt.” Open the file, and you will see the keywords scraped for you.

Click Here Now to Download the Full KeywordScraper.py Script.

How to Improve this Web Scraper

No doubt, this web scraper is not like the dummy scrapers you see in most tutorials, this can actually be useful for SEO. However, there’s a lot of room for improvement.

As I stated earlier, it does not handle exceptions – this should be the first improvement you should make to handle different cases of errors like keyword not having related keywords to be scraped. You can even go further to scrape related questions in addition to keywords. Making the web scraper multitask in other to scrape more pages at a time will also make it better.

The truth is, you cannot use this tool to scrape thousands of keywords as Google will discover you are using a bot and will block you. To prevent any form of a block, you should extend the bot to use proxies.

For Google, I will advise you to use residential proxies such as Luminati, Smartproxy, Stormproxies. I will also advise you to set up an alert system or a logging system to notify you if the structure of the page has changed, and such, the code is unable to work as expected. This is important because Google changes the structure of their pages every now and then.

- Proxies for Preventing Bans and Captchas When Scraping Google

- SEO Proxies to Master Google – Scraping Search Engines without Block and Captchas!

Conclusion

Building a simple web scraper isn’t a difficult task because you probably have one website to scrap, and the webpage is structured. It also does not require multithreading and certainly does not have to think of request limits if you aren’t going to be sending a huge number of requests per minute.

The main problem comes when you are developing a complex web scraper. Even then, with proper planning and learning, the problems can be overcome.