Are you looking forward to downloading images from web pages using Python? The process has been made easy thanks to the python language syntax and its associated libraries. Stay long enough on this page to learn how to use Python for scraping images online.

We are in a time when data has become more important than ever – and the quest for it will only increase in the future. the Internet has proven to be one of the largest sources of data. There is an enormous number of data ranging from text to downloadable files, including images on the Internet.

Many tutorials on the Internet focuses on how to scrape text and neglect the guides on how to scrape images and other downloadable files. This is understandable, though; most of the guides are not in-depth, and not many web scrapers have an interest in scraping images as most deals with text data. If you are one of the few interested in scraping images, then this guide has been written for you.

Image Scraping – It is Easier than you Think

For many beginners, they think image scraping is different from regular web scraping. In the actual sense, it is actually the same with little difference. In fact, except you are dealing with images that come in big file size, you will discover that all you need is your web scraping and file handling knowledge.

Your web scraping skill will help you scrape links of the images if you do not already have the links at hand. With links at hand, all that’s required is for you to send HTTP requests to the link to have the images downloaded and then create files to write them in.

While it is easy, I know a step by step guide will help you better in understanding how to get this done. For this, we will be working on a project – and by the end of the project, you will get an idea of what it takes to scrape images from web pages.

Project Idea One: Scraping Image from Static Sites

Some of the easiest websites to scrape images from are static sites. This is because when you send a web request to a server requesting static pages, all of the components are returned to you as a response, and all you need to do is to scrape the links and then start sending HTTP requests to each of the links.

For dynamic pages that rely on JavaScript to render images and other contents, you will need to follow a different approach to be able to scrap images on them.

To show you how to scrape images from a static page, we will be working on a generic image scraping tool that scrapes all images on a static page. The script accepts the URL of a page as a parameter and downloads all the images on the page into the script folder.

Requirements for Scraping Static Pages using Python

Python has made scraping very easy – and straightforward. There are a good number of tools for scraping images, and you will have to make your choices based on the use case, target site, and personal preference. For this guide, you will need the below.

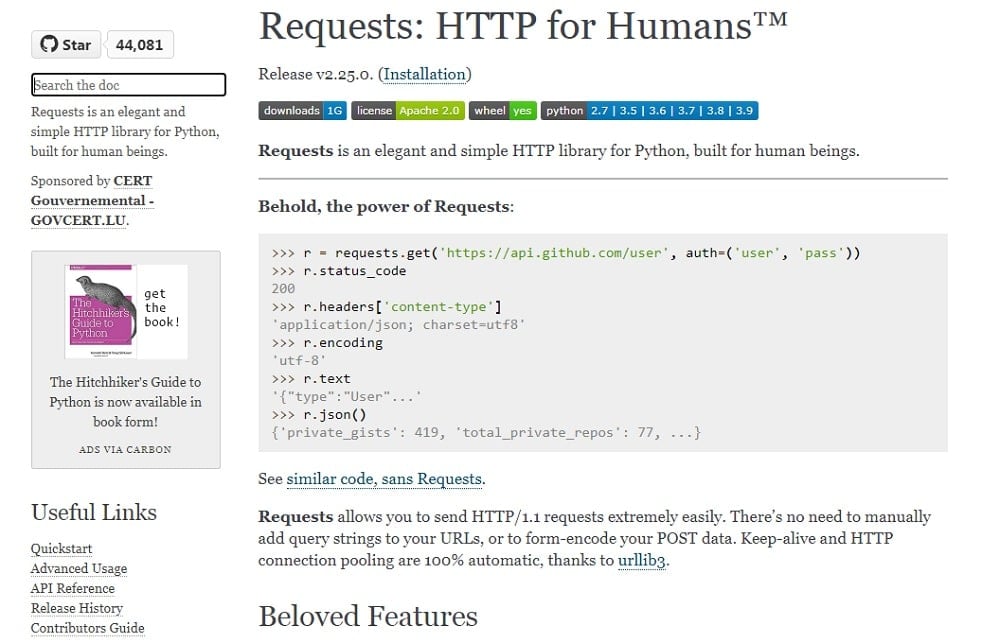

Requests is an elegant python library for HTTP requests. It is dubbed HTTP for Humans. As a web scraper, Requests is one of the tools you should be conversant with. While you can use the URL library that comes bundled in the standard library, you need to know that Requests make a lot of things simple and easy.

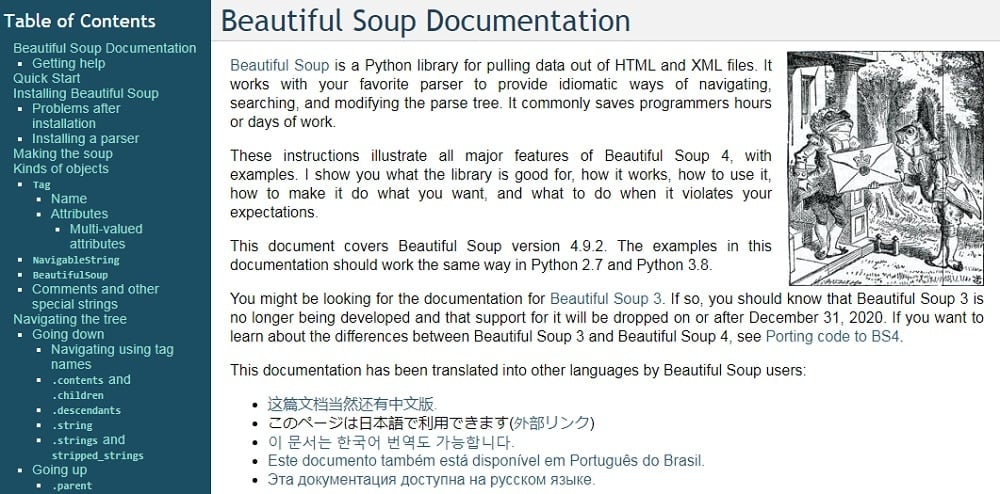

Parsing is one of the key aspects of web scraping, and this can either be difficult or easy, depending on how a page has been structured. With BeautifulSoup, a parsing library for Python, parsing becomes easy.

- Scrapy Vs. Beautifulsoup Vs. Selenium for Web Scraping

- What is Data Parsing and Parsing Techniques involved?

-

File Handling

Scraping images requires you to know how to handle files. Interestingly, we do not need any special library like the Python Imaging Library (PIL) since all we do is save images.

Coding Steps for Scraping Images

With the above requirements, you are set to start scraping images from web pages. If you have not installed Requests and BeautifulSoup, you will need to install them as they are third party libraries not bundled in the Python standard library. You can use the pip command to install them. Below are the commands for installing these libraries.

pip install requests

pip install beautifulsoup4

Now to the coding proper.

-

Import Required Libraries

The first step is importing the required libraries, which include Requests and BeautifulSoup.

from urllib.parseimport urlparse import requests from bs4 import BeautifulSoup

From the above, you can see that the

urlparse

library was also imported. This is required as we will need to parse out the domain from the URL and append it to URLs of images with relative URLs.

-

Scrape Links of the Images on a page

url = "https://ripple.com/xrp"

domain = urlparse(url).netloc

req = requests.get(url)

soup = BeautifulSoup(req.text, "html.parser")

raw_links = soup.find_all("img")

links = []

for iin raw_links:

link = i['src']

if link.startswith("http"):

links.append(link)

else:

modified_link = "https://" + domain + link

links.append(modified_link)

Looking at the code above, you will notice that it carries out 3 tasks – send request, parse URLs, and save the URLs in the links variable. You can change the url variable to any URL of your choice.

In the third line, Requests was used for sending HTTP requests – and in lines 4 and 5, BeautifulSoup was used for parsing out URLs.

If you look at the looping section, you will observe that only images with absolute paths (URLs) would be added to the link list. The ones with relative URLs need further processing,and the else section of the code was used for that. The further processing adds the domain name URL to the relative URLs.

-

Download and Save Images

for x in range(len(links)):

downloaded_image = requests.get(links[x]).content

with open(str(x) + ".jpg", "wb") as f:

f.write(downloaded_image)

print("Images scraped successfully... you can now check this script folder for your images")

All we did above is loop through the list of image URLs and download the content of each image using the Requests. With the content at hand, we then create a JPG file for each and write the content inside it. It is as simple as that. For the naming, I used numbers to represent each of the images.

This was done as the script has been written to be simple and a proof of concept. You can decide to use the alt value for each image – but have in mind that some images do not have any value for that – for these, you will have to come up with a naming formula.

-

Full Code

from urllib.parseimport urlparse

import requests

from bs4 import BeautifulSoup

url = "https://ripple.com/xrp"

domain = urlparse(url).netloc

req = requests.get(url)

soup = BeautifulSoup(req.text, "html.parser")

raw_links = soup.find_all("img")

links = []

for iin raw_links:

link = i['src']

if link.startswith("http"):

links.append(link)

else:

modified_link = "https://" + domain + link

links.append(modified_link)

# write images to files

for x in range(len(links)):

downloaded_image = requests.get(links[x]).content

with open(str(x) + ".jpg", "wb") as f:

f.write(downloaded_image)

print("Images scraped successfully... you can now check this script folder for your images")

Project Idea Two: Using Selenium for Image Scraping

Not all sites are static sites. A good number of modern websites are interactive and JavaScript-rich. For these sites, all content on a page does not get loaded upon sending an HTTP request – a good number of the content gets loaded via JavaScript events.

For sites like this, requests and beautifulsoup are useless since they do not follow the static site approach requests and beautifulsoup are meant for. Selenium is the tool for the job.

Selenium is a browser automation tool that was initially developed for testing web applications but has seen other usage, including web scraping and general web automation. With Selenium, a real browser is launched, and pages and JavaScript event-triggered to make sure all content is available. I will be showing you how to scrape images from Google using Selenium.

- Playwright Vs. Puppeteer Vs. Selenium: What are the differences?

- Headless browsers for automation testing

Selenium Requirements and Setup

For Selenium to work, you will have to install the Selenium package and download the browser driver for the specific browser you want to use. In this guide, we will be making use of Chrome. To install Selenium, use the code below.

pip install selenium

With Selenium installed, you can then visit the Chrome download page and install it if you do not have it installed on your system already.You need to also download the Chrome driver application.

Visit this page to download the driver for your Chrome browser version. The downloaded file is a zip file with the chromedriver.exe file inside. Extract the chromedriver.exe file to the folder of your selenium project. In the same folder, you placed the cghromedriver.exe file, create a new python file named SeleImage.py.

Coding Steps for Scraping Images using Selenium

I will work you through a step by step guide on how to code a Google Image scraper using Selenium and Python.

-

Import required Libraries

from selenium import webdriver from selenium.webdriver.chrome.optionsimport Options

The webdriver class is the main class we will be using from the Selenium package in this guide. The Options class is for setting webdriver options, including making it run in headless mode.

-

Request for Google Search Homepage and Enter Image Keyword to Search

keyword = "Selenium Guide"

driver = webdriver.Chrome()

driver.get("https://www.google.com/")

driver.find_element_by_name("q").send_keys(keyword)

driver.find_element_by_name("btnK").submit()

The code above is self-explanatory for any Python coder. The first line holds the search keyword we want to download images for. The second show, we will be using Chrome for the automation task. The third line sends a request for the Google homepage.

Using the element.find_element_by_name, we were able to access the search input element with the name attribute “q.” Using the send_keys method, the keyword was filled, and then we submitted the query using the last line. If you run the code, you will see Chrome will launch in automation mode, fill the query form, and take you to the result pages.

-

Switch to Images and Download first 2 Images

driver.find_elements_by_class_name("hide-focus-ring")[1].click()

images = driver.find_elements_by_tag_name('img')[0:2]

for x in range(len(images)):

downloaded_image = requests.get(images[x].get_attribute('src')).content

with open(str(x) + ".jpg", "wb") as f:

f.write(downloaded_image)

the code above is also self-explanatory. The first line locates the image search link and clicks it, moving the focus from all results to only images. The second image finds only the first two images. Using the for loop, the images are downloaded.

-

Full Code

import requests

from selenium import webdriver

keyword = "Selenium Guide"

driver = webdriver.Chrome()

driver.get("https://www.google.com/")

driver.find_element_by_name("q").send_keys(keyword)

driver.find_element_by_name("btnK").submit()

driver.find_elements_by_class_name("hide-focus-ring")[1].click()

images = driver.find_elements_by_tag_name('img')[0:2]

for x in range(len(images)):

downloaded_image = requests.get(images[x].get_attribute('src')).content

with open(str(x) + ".jpg", "wb") as f:

f.write(downloaded_image)

The Legalities of Scraping Images from the Web

Unlike in the past that there is no clear-cut judgment as to whether web scraping is legal or not, a court has ruled in favor of the legality of web scraping provided you are not scraping data behind an authentication wall, breaking any rule, or adversely affecting your target sites.

Another issue that could make web scraping illegal is copyright, and as you know, many images on the Internet have been copyrighted. This could end up putting you in trouble. I am not a lawyer, and you shouldn’t take what I said as legal advice. I will advise you to seek the service of a lawyer on the legalities of scraping publicly available images on the Internet.

Conclusion

From the above, you have discovered how easy it is to scrape images publicly available on the Internet. The process is straightforward, provided you are not dealing with large image files that would require streaming.

Another issue you are likely to face is the issue of anti-scraping techniques set up tomake it difficult for you to scrape web data. You also have to put into consideration the legalities involved, and I advise you to seek the opinion of an experienced lawyer in this regard.