Are you looking for open-source web scrapers to use for your next web scraping project? On this page, we list some of the best open-source web scrapers in the market.

Web scraping is the automated means of using computer programs to extract data from web pages. It is incredibly important for gathering data available online, and as you already know – the Internet is an enormous source of data. As a programmer, you can develop web scrapers from scratch, but that will be a hell of a work for you to do – and except you are experienced – you will have a bug-filled web scraper that is not upgradeable and scalable.

What then is the best option for you? My advice for you is to make use of web scraping libraries and frameworks that makes the development of web scrapers easy. While this means not inventing the wheel, it also means you will save development time.

One thing you will come to like about open source web scraping libraries and frameworks is that they are free to use. I have used a good number of them across multiple programming languages to help speed up development time and have a clean code that is easy to understand.

I know some of the best open-source web scrapers out there, and in this article, I will be discussing some of the best open-source web scrapers out there.

Scrapy (Python)

The Scrapy web scraping framework is arguably the most popular web scraping framework you can use to develop scalable and high-performing web scraper. This is because it is the number web scraping framework for developing scrapers and crawlers using the Python programming language – and Python is the most popular programming language among web scraper developers.

This framework is completely an open-source tool maintained by Scrapinghub, a popular name in the web scraping industry. Scrapy is fast, powerful, and incredibly easy to extend with new functionality. One thing you will come to like about this one is that it is a complete framework that comes with both an HTTP library as well as a parsing tool.

Pyspider (Python)

The Pyspider framework is another framework that you can use to develop scalable web scrapers. From the name, you can tell that it is also a python based tool. This framework was initially developed for writing web crawlers, but you can adapt it and use it for coding powerful web scrapers.

This tool comes with a WebUI script editor, project manager, task monitor, and result viewer, among other features. The Pyspider has support for a good number of databases. It is based on a distributed architecture and has the capability of crawling JavaScript pages – a feature the Scrapy framework lacks.

Heritrix (JavaScript)

Unlike the other tools described above, the Heritrix software is a complete crawler that you can use to crawl the Internet. It was developed by the Internet Archive for web archiving. This crawler was written in JavaScript.

Unlike the above, that you have the liberty of not respecting the robots.txt file directives, the Heritrix tool has been designed to respect it. This tool, just like the above, is completely free to use. It is open-source software, and you can contribute to it too. This one is battle-tested and tested for collecting a large amount of data – you will not have a performance problem using this tool.

Web-Harvest (Java)

The Web-Harvest library is a web extraction tool written in Java for Java developers to develop web scrapers for collecting data from web pages. This tool is a complete tool as it comes with an API for sending web requests and downloading web pages. It also comes with support for parsing content from a downloaded web document (HTML document).

This tool comes with support for file handling, looping, HTML and XML handling, conditional operations, exceptional handling, and variable manipulation. It is open source and perfect for writing Java-based web scrapers.

MechanicalSoup

The MechanicalSoup library is another Python-based tool for writing web scrapers. This tool can be used for automating tasks online, which makes it perfect for web scraping. The major setback it has is that it does not support JavaScript-based actions and, as such, not suitable for scraping from JavaScript-rich websites.

If you have used the duo of Requests and BeautifulSoup before, then you will find the MechanicalSoup library easy to use as its mimics their simple APIs. This tool comes with documentation that is easy to understand, making it easy for you to get started with the tool.

Apify SDK

The Apify SDK is a highly-scalable web scraper developed for the Node.js platform. JavaScript is the Internet's language and having a web scraper for it makes a lot of sense. Well, the Apify SDK fills the gap.

This library builds on popular tools like playwright, puppeteer, and Cheerio to deliver large-scale high-performance web scraping and crawling of any website. This library is not just a web scraper; it is a full-fledged automation tool that you can use to automate your actions on the Internet. You can run it on the Apify platform or have it integrated into your code. It is powerful and easy to use.

Apache Nutch

The Apache is a high-performing web scraper you can integrate into your project. If you are looking for a web scraper that gets updated regularly, then the Apache Nutch is a great choice. This web crawler is production-ready and has been around for a while, and can be seen as matured.

The Oregon State University is converting its searching infrastructure from Googletm to the open-source project Nutch. What makes this web scraper stands out is that it is from the Apache Software Foundation. It is completely free to use and open source.

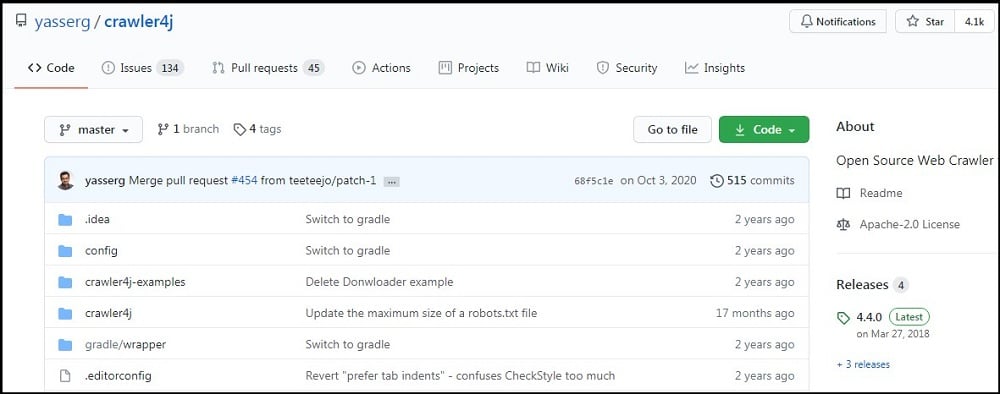

Crawler4j

All you need is to extend the WebCrawler class, which decides which URLs should be crawled and handles the downloaded page. They provide an easy-to-understand guide on how to use the library. You can check it out on GitHub. Because it is an open-source library, you could contribute to it if you feel the code base needs modification.

WebMagic

WWebMagic, at its core, is a core flexibility web scraper. It is a Java-based scraping tool downloaded using Maven. This works only for extracting data from HTML pages – if you want to scrape from JavaScript featured websites, then you will need to look elsewhere as the WebMagic does not have support for JavaScript rendering and, as such, not suitable for that.

The library has a simple API interface that makes it easy to integrate into your project. It covers the whole lifecycle of web scraping and crawling, which includes downloading, URL management, content extraction, and persistence.

WebCollector

The WebCollector is a rugged web scraper and crawler available to Java programmers. You can use it to develop high performing web scraper to help you collect data from web pages. One thing you will come to like about this library is that it is extensible via the use of plugins.

WebCollector integrates CEPF, a well-designed state-of-the-art web content extraction algorithm proposed by Wu, et al. This library is easy to integrate into your custom projects. Being an open-source library, you can access it on GitHub and add to its development there.

Crawley

The Crawley web scraping framework is a framework for developing web scrapers in Python. This framework is based on Non-Blocking I/O operations and built on Eventlet. The Crawley framework support both relational databases and their non-relational counterparts. With this tool, you can extract data using XPath or Pyquery.

Pyquery is a jQuery-like library for the Python programming language. Crawley comes with native support for cookie handling, which makes it a good scraping tool for websites that make use of cookies for session persistent such as websites you will need to login to.

Portia

Portia is the second tool coming from the desk of Scrapinghub that’s present on the list. The Portia web scraper is a different type of web scraper and developed for a different audience. While the others described in the article are developed for developers, the Portia tool has been developed for use even without coding skill.

Portia is an open source is a tool that allows you to visually scrape websites. With Portia, you can annotate a web page to identify the data you wish to extract, and Portia will understand, based on these annotations, how to scrape data from similar pages.

Juant

Juant is an open-source project developed for JavaScript programmers for the quick development of web automation tools. It comes with a headless browser that makes it possible to automate tasks without revealing itself as a non-browser.

With this tool, you can carry out web scraping tasks easily. You can see this tool as a browser without a GUI that would visit websites, download its content and parse out required data. One thing you will come to like about Juant is that it is built for the modern web and, as such, can be used for scraping JavaScript-rich pages as it can render and execute JavaScript.

Node-Crawler

Node-Crawler is another Node.js library for developing web crawlers and scrapers. This Node.js library can be seen as a lightweight library that comes packed with a lot of web scraping features.

It is suitable for a distributed scraping architecture, supports hard coding, and is developed for non-blocking asynchronous IO, which provides great convenience for the scraper’s pipeline operation mechanism. It uses Cheerio for querying DOM elements and parsing, but you can replace that with other DOM parsers. This tool is convenient, efficient, and easy to use.

StormCrawler

The StormCrawler is a Software Development Kit (SDK) developed for building efficient, high-performance web scrapers and crawlers. This is based on the Apache Storm and built for distributed web scraper development.

The SDK is battle-tested, and it has proven to be scalable, resilient, easy to extent, and efficient. While it has been built with the distributed architecture in mind, you can use it for your small-scale web scraping project, and it will work fine. Because of what it was designed to achieve, it has one of the fastest speeds when it comes to fetching data.

Conclusion

With open-source software, web scraping has been made easy, and you do not have to pay to make use of a library or framework. One thing you will come to like about this is that your workflow is improved.

You will also have the chance to view the code that powered these web crawlers and scrapers and even adds to the code base if you want to, provided it goes well with the maintainers.