Are you tired of the restrictions imposed by Cloudflare when trying to scrape a website? Are you looking for ways to bypass the Cloudflare WAF? Then this article is for you. Learn what it takes to bypass this CDN and security application ethically.

Businesses that wish to connect with customers anywhere in the world have a double challenge as a result of the internet's expansion to the farthest reaches of the globe. While some corporate websites could encounter lag due to geography, others might be vulnerable to hacking or security breaches.

The issues with latency and security breaches can be overcome, though. Content Delivery Networks (CDN) are one such method for addressing latency concerns on the internet. With a CDN, you must install numerous data centers all over the world and set up a DNS system to route your requests to the closest edge server.

On the other hand, adding a software layer or filter to screen online requests before they are actually handled by your systems is one technique to reduce security threats. These procedures take a lot of time and money. Where Cloudflare steps in is in this situation.

A Content Delivery Network (CDN) known as Cloudflare provides a variety of services, mostly focusing on networking and security. Before we can adequately examine what Cloudflare is and what they do, we must first acknowledge that some people—mostly web scrapers—are not impressed or satisfied with their services.

Gathering data from target websites is known as “web crawling. However, these crawlers are viewed as dangerous by bot detectors like Cloudflare. Therefore, it makes sense why some people would wish to avoid using their services, particularly their detection methods. In this post, we will discuss how to get around Cloudflare's bot detection technique.

What is Cloudflare?

Cloudflare is a US-based company that provides content delivery network (CDN) services, security, and a wide range of other services to speed up and secure websites. When it first began operating privately in 2010, Cloudflare wasn't what it is now. It started as a research initiative to identify the causes of email spam.

The founders of Project Honeypot, Lee Holloway and Matthew Prince, conceptualized it and introduced it in 2004. Their goal today is to aid in the development of a better internet. As a result, among the services provided by Cloudflare are DNS, load balancing, mobile page acceleration, cache options, Web Application Firewall (WAF), SSL/TLS support, DDoS protection, etc.

They own and run a sizable server network as a CDN and security company. These servers both speed up webpages and defend against harmful attacks like DDoS. Customers can use a Web Application Firewall provided by Cloudflare, which places an emphasis on security (WAF). A WAF can essentially protect applications from a variety of security risks, including credential stuffing, DDoS attacks, and cross-site scripting (XSS).

In general, websites that make use of services like Cloudflare are safer and provide a better surfing experience for their visitors. With well-known platforms like WordPress, Google Cloud, IBM Cloud, etc., they have strong integration. More than 26 million websites use Cloudflare, which processes more than 1 billion IP addresses every day.

In a word, Cloudflare is a worldwide network created to make whatever you connect to the Internet secure, private, fast, and trustworthy. This includes your websites, APIs, and web applications.

How does Cloudflare work?

Between a client and a server, Cloudflare serves as an intermediary, mirroring and caching websites through a reverse proxy. It represents the wall that visitors to your site must pass through more than anything else. Cloudflare also provides a degree of filtration for security through this intermediary architecture.

It can block spam, stop bot attacks, stop distributed denial-of-service attacks, and detect malicious traffic by putting itself in the way between the client and the hosting server. It achieves this, though, by scanning the requests that are made to your website or online application.

Based on questionable IP addresses, the types of resources accessed, the request payload, and the frequency of the requests, it searches for malicious content. It also takes into account a firewall with customer-defined rules. Thus, passive and active bot detection are the two broad categories into which Cloudflare divides its detection techniques.

Active bot detection methods depend on tests done on the client side, as opposed to passive bot detection methods, which use fingerprinting checks on the backend. Cloudflare uses a variety of passive bot detection methods, including botnet detection, IP reputation (risk or fraud score), HTTP request headers, and TLS fingerprinting. The active bot detection methods employed by Cloudflare, on the other hand, include CAPTCHAs, event tracking, canvas fingerprinting, and environment API querying.

How to bypass Cloudflare Web Application Firewall (WAF)

Cloudflare is known for its rigid code of conduct. They utilize both passive and active bot detection methods, as was already mentioned. However, the question is, can Cloudflare be bypassed? It may be challenging, but yes, Cloudflare can be bypassed. This is because not all crawlers are malicious. Many of these bots are on a mission to generate data from websites.

Nevertheless there are different techniques for achieving this, but in this session, we are going to look at a few of these.

1. Respect Robots.txt

The robots' exclusive standard or robot exclusion protocol is also referred to as the robots.txt. Websites with Cloudflare utilize it as a communication tool for web crawlers, scrapers, and other web automation bots. While certain online scraping companies, including some search engines like Google and Bing, respect a site's robots.txt file, the majority of web scrapers, like email harvesters, and numerous other bots, do not. Also, website owners have the authority to determine whether or not to permit the crawling or scraping of their site.

While other websites can scrape some web pages, some websites forbid bots from doing so. Web crawlers should respect a website's robot.txt file during scraping. You should abide by the guidelines in this file regarding the number of times you can scrape and the pages you can scrape. Try to refrain from constantly overwhelming websites with requests in a short amount of time.

2. Use quality proxies

One technique to bypass Cloudflare passively when trying to scrape a protected website is to use quality proxies. You need a way where your credentials are not given away, especially if you are engaging in scraping on a website that has Cloudflare integrated. Hence, most websites log the IP addresses of their visitors.

As a result, while carrying out the extensive operations of scraping on a significant website, you should retain a large number of IP addresses. For this purpose, residential proxy providers like BrightData are great proxy services. It has a massive pool of IP addresses for the job.

- Brightdat aresidential Proxy Network– Editor's choice

- Soax mobile Proxy Network – Most Stable choice

- Smartproxy residential proxy– Budget Choice

You can also look to private proxy providers like MyPrivateProxy to get the right pool of IP addresses that would properly mask your identity so as to bypass Cloudflare detection. For reliability's sake, residential and private proxies would work best, although they may cost you more than a penny. The whole essence of this is that proxies ensure that Cloudflare detection doesn't see you.

You maybe like to read,

3. Pay attention to HTTP Headers

Using HTTP request headers, Cloudflare can tell if you're a robot. A non-browser user agent, such as python-requests/2.22.0, makes it simple to identify a scraper as a bot. Through HTTP Request Header Modification Rules, you can change the headers of incoming HTTP requests. This collection of guidelines will allow you to do that. Set a literal string value as the HTTP request header's value first. Its previous value would be replaced with this, or the request would receive a new header.

Second, change the value of an HTTP request header by adding a new header or overwriting an existing one according to an expression. Luckily, it is possible to delete an HTTP header from the request. However, you can do it using the API or the dashboard to build an HTTP Request Header Modification Rule.

4. Change your crawling pattern.

For people who don't know how to crawl a website without getting blocked, getting blacklisted when scraping is a regular problem. Humans usually vary the task and do not perform it repeatedly. They demonstrate irrational behavior while scraping.

On the other hand, because they are designed to do so, bots always crawl in the same way. Unless specifically instructed to do so, they never alter the pattern. In essence, a crawling pattern describes how your crawler is set up to navigate the page.

If you continue to use the same crawling pattern, Cloudflare will identify you and block you. To make your crawling appear less predictable, you can add sporadic clicks, scrolls, and mouse movements. However, the behavior shouldn't be wholly unpredictable. Consider how a typical user would navigate the website when creating a crawling pattern, and then apply those principles to the tool properly.

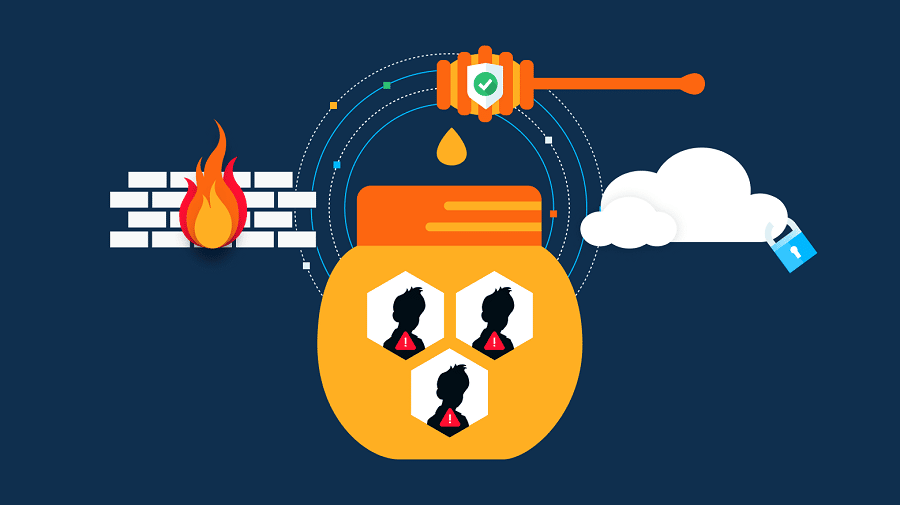

5. Be careful with honeypots

Websites take extreme precautions like integrating Cloudflare to prevent hackers. They put up honeypots to entice hackers and find out whether any hacking attempts have been made on the website. In the HTML code, links are called honeypots.

Although these links are hidden from organic users, web crawlers can see them. Due to the fact that only robots would click on that site, honeypots are employed to identify and prevent web crawlers. Setting honeypots involves a considerable lot of work.

Hence not many people choose this method. However, be aware that your target could be using honeypot traps if your request is denied and a crawler is found. It is clear that this detection is difficult, and it takes a lot of programming effort to complete it correctly. As a result, neither the server-side nor the bot or scraper-side of this technique is commonly used.

6. Use Headless Browsers

Using headless browsers is another way to get around Cloudflare's checking. The content that appears on websites varies based on the browser being used. The issue with this is that when performing any kind of data scraping, the JS code, rather than the server's raw HTML response, renders the content. A headless browser can be used in this situation to avoid restriction. Even utilizing Chrome in headless mode is one method to avoid Cloudflare bot detection.

Utilizing the undetected-chromedriver to set up the Chrome browsing context is one of the more effective approaches. Undetected-chromedriver is a Selenium Chromedriver patch that has been optimized such that it does not activate anti-bot services like Distill Network, Imperva, DataDome, or Botprotect.io. Try Selenium or puppeteer-extra-plugin-stealth for improved success rates.

7. Don't contradict the JavaScript Challenge

JavaScript elements that are nested make data difficult to obtain. Numerous JavaScript features are used by websites to show content in response to specific user activities. It's standard procedure to only show product photos in search fields after the user has entered some information.

Numerous other problems, including memory leaks, application instability, and occasionally full crashes, can also be brought on by JavaScript. Dynamic features are frequently a hassle. JavaScript should only be used in extreme cases.

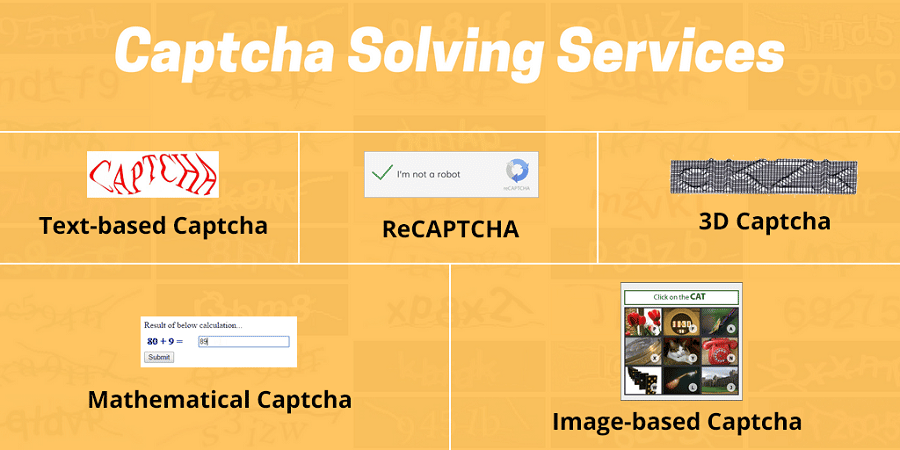

8. Use Captcha solving services

One of the most popular anti-scraping tools is a captcha. Websites ask users to complete a variety of riddles to verify whether they are human or not. Hence, crawlers typically can't get around captchas on websites. Even the most skilled web scrapers occasionally encounter a CAPTCHA.

However, bypassing Cloudflare detection with the usage of a Captcha solver is another option. So, to ensure that your scraping operations on a website with Cloudflare integration are not interfered with. There are various CAPTCHA-solving services available for the task. Solvers based on machine learning or humans are both appropriate.

The Google Chrome plugin 2Captcha Solver is one instance of one that carries out this automatically. Not all challenging CAPTCHAs can be resolved by it, though. On Cloudflare-protected pages, there is obviously no simple way to get around captchas. To do this, though, you must comprehend how the token is encrypted by the Javascript code. So you can inspect the callback function code, you can use the 2Captcha Solver plugin for Google Chrome.

- Captcha avoidance: How to Avoid Captcha More efficiently?

- The Best Captcha Proxies to Avoid Google Recaptcha

9. Beware of Website Layout change

Numerous websites can change their design in addition to Cloudflare's bot detection for a number of reasons. Scrapers may struggle with dynamic layouts on some websites since they frequently change. Designs can be seen on websites in unexpected places. Large websites also employ this technique.

To continue web scraping, your crawler must be able to recognize these continuing changes. You may easily accomplish this by keeping track of the number of successful requests per crawl. Using XPatga or CSS selectors, you may also scrape information from these websites.

Read more,

10. Don't pressurize the server during crawling.

Automatic solutions are becoming more and more common, enabling individuals to swiftly convert web data into spreadsheets for problem-solving as more and more people turn to web scraping for data acquisition. The target website is under more pressure as a result of the web scraping process. A website's server may fall down if a crawler sends an excessively high volume of requests to it without restraint.

Because of this, scrapers frequently receive bans. Anti-scraping methods are created to recognize this peculiar activity and block the IP. Slow some of your requests to avoid this. Python's time module is useful for this, although an intelligent anti-scraping tool can still spot it. Therefore, select the random option under the time module in order to simulate human behavior.

FAQs About Bypass Cloudflare

Q. Is there a way to bypass Cloudflare while scraping?

A site that uses Cloudflare as protection will have security checks that you cannot get around. Sometimes, failing these tests can result in access being denied. Requests coming from IP addresses with a bad reputation can cause you to be forwarded to the captcha challenge page.

There are techniques to avoid Cloudflare detection, nevertheless, in order to get rid of all of these. Some of the process involved in bypassing Cloudflare detection includes the use of Captcha solvers, the use of headless browsers, and paying attention to robots.txt and honeypots, among many others. To see others, review the topics in this article.

Q. Why should I bypass Cloudflare?

It is perfectly good if you do not need to get around Cloudflare detection. Additionally, Cloudflare's bot detection service is not terrible by itself. Simply said, it disfavors data mining tools and scraping activities. Other bot crawlers are identified as threats, aside from those that have been whitelisted. If you didn't get around it, your web scraping program wouldn't collect the kind and amount of data you need.

Q. Is it illegal to bypass Cloudflare?

It can be a very difficult subject when it comes to legalities. However, if you are lawfully bypassing Cloudflare on a website that you don't own or have authorization to conduct a DDoS attack on, you are breaking the law. To be clear, there are moral ways to avoid being detected by Cloudflare. Your acts may be deemed criminal if you default. So it would be wise to be aware of the legal implications of a bypass before implementing one.

Conclusion

Data has become the driving force for many people and organizations, hence the need for data scraping. While some website will allow it, others won't. On websites that are protected by web solutions like Cloudflare, scraping might be incredibly difficult. Although Cloudflare is not inherently negative, it only limits web crawlers.

As was made clear in this post, one method for carrying out your scraping tasks on these websites is to go beyond Cloudflare's detection mechanisms. You can get around Cloudflare in a few different ways that we've detailed. Look over them to see which might be most helpful for your bypass.