Are you interested in algorithm trading and you need to scrape financial data from Yahoo Finance? Stick on this page to discover how you can use Python and its associated library to extract financial data from Yahoo Finance – code inclusive.

In the financial and investment market, professionals do not just depend on their guts and emotions as that has proven not to yield desirable results in the long run. Instead, they depend on data to decide which asset to buy and which to sell. Data-driven investment has yielded more gains than gut-driven investment and as such, those that have taken the step have introduced the idea to others. The Yahoo Finance web service is one of the web services that provide financial data which investment companies and individuals can use to make decisions either manually or extracting a bunch of them and feeding their trading bot to make decisions on their behalf based on predefined trading models.

Yahoo Finance has an API that you can use to fetch financial data. However, this API is fragile, limiting, and does not provide you with all of the data you need. This is the reason there are many unofficial APIs for Yahoo Finance. In this article, we would be showing you how to develop a web scraper that will extract financial data from Yahoo Finance using Python.

Overview of Web scraper to Be Developed

A web scraper is a computer program developed to automate the process of collecting data from the Internet. This is done usually in an aggressive manner, sending many requests within a short period of time, making it possible to collect data across thousands of web pages within a few minutes.

In this guide, we would be developing a web scraper that would collect the summary of financial data of a stock listed on Yahoo Finance. The class accepts the ticker of the stock as an argument and pulls the summary of its data for you.

Requirement for Coding and Running the Script

We would be using the python programming language and its associated third-party libraries to code the Yahoo Finance scraper.

Python

You need to have Python installed on your PC. Many of the Operating Systems have Python pre-installed. However, the version is Python 2 and is no longer in active development. You will need to install the newer Python 3. Installing Python 3 is easy and only requires a few steps. Mac users can visit the official download page to download and install Python. If you are a Linux user, click here. For Windows users, follow the steps highlighted below.

- Visit the Windows download page and download the latest version of Python.

- Launch the installer and follow the prompt.

- Check the “Add Python 3.x PATH”

- Click on “Customise Installation”

- Make sure Pip is checked if not, check it.

PIP

The Pip package is pip is a package management system used to install and manage software packages written in Python. Many packages can be found in the Python Package Index (PyPI). If you follow the steps above as a Windows user, you already have pip installed. Linux and Mac users can read this official documentation to learn how to install pip.

With this package installed, all you need to install the other packages is a pip command like this pip install <package-name>.

Requests

The Requests library is a Python third-party library developed to make sending HTTP requests easier for coders. It is built on top of the messy urllib package that is difficult to use. Run the below in pip command in command prompt if you are a Windows user or Terminal for Mac users.

pip install requests

We would be using Requests for downloading the full web page that contain the data. Requests is easy to use and best for accessing data from web pages that do not depend on Javascript to render content. Read the Quickstart guide for Requests here.

Beautifulsoup

Requests only download the content of a page. With the help of Beautifulsoup, you can extract the required data from the page. It is not a parser but makes use of a parser under the hood to make the process of transversing and reaching data of interest easy as using regular parsing libraries can be difficult. Below is the pip command to run in command prompt or Terminal to install Beautifulsoup.

pip install beautifulsoup4

Click here to read Beautifulsoup’s official guide.

Related,

Step 1: Create a Web Scraper Class

We want to use the Object-Oriented approach to programming and as such, we would be housing all ion the logic and methods in a class known as YahooFiScraper. This class would have two custom methods – the _scrape_data() method and get_data() method. The _scrape_data() is a private method and written with the underscore convention to make it private. So, the only method expose to other classes and methods/functions is the get_data() method.

import requests

from bs4 import BeautifulSoup

class YahooFiScraper:

def __init__(self, ticker):

self.url = "http://finance.yahoo.com/quote/{0}?p={1}".format(ticker, ticker)

self.name = ""

self.current_price = ""

self.market_cap = ""

self.previous_close = ""

self.open = ""

self.bid = ""

self.fifty2_weeks_range = ""

self.volume = ""

self.average_volume = ""

self.beta = ""

self.pe_ration = ""

self.eps = ""

self.earning_date = ""

From the above, you can see the constructor. The self.url variable holds the URL to the page. You can see we used there {} formatter to add the ticker expected as a parameter when initially the class. The other variables will hold the information when scraped – their names are quite revealing.

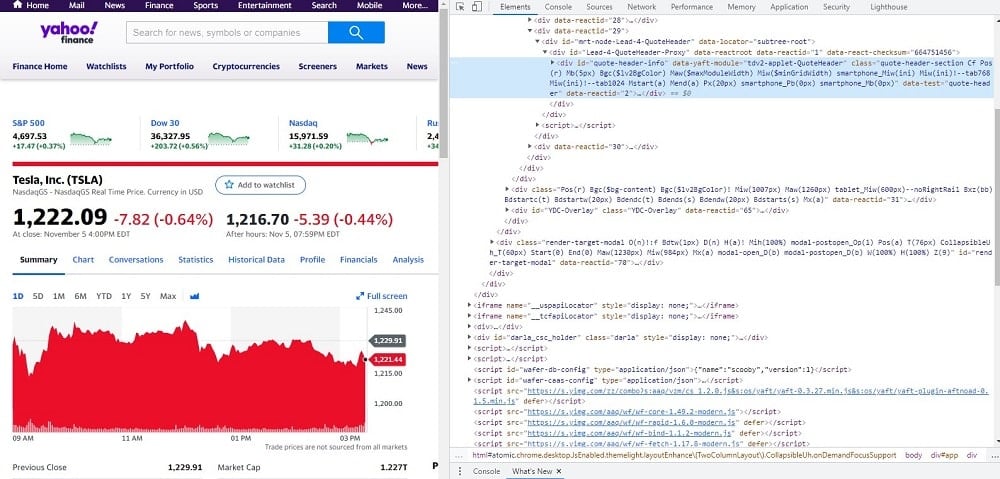

Step 2: Inspect Page Source for Element of Interest

Let go to the page of interest https://finance.yahoo.com/quote/TSLA?p=TSLA – this is for Tesla and let see the elements with the data we are interested in. We would be taking a look at the HTML of the page, looking for the HTML elements wrapping the data and their unique attributes that could be used to reach them. Open the page on your browser and view the page source. The exact process to do that would be determined by the browser you are using.

Fortunately for us, all of the data of interest are wrapped in elements with a property – reactid – each of which has a number attached to it as an attribute.

Step 3: Code _Scrape_data() Method

With the page source inspected and we knowing where each of the data of interest is located, it is now time to code the method for scraping the data. The below is the method for scraping the financial data on the page.

def _scrape_data(self):

x = requests.get(self.url).text

soup = BeautifulSoup(x, "html.parser").text

self.name = soup.find("h1", {"data-reactid": "7"}).text

self.current_price = soup.find("span", {"data-reactid": "31"}).text

self.market_cap = soup.find("span", {"data-reactid": "84"}).text

self.previous_close = soup.find("span", {"data-reactid": "43"}).text

self.open = soup.find("span", {"data-reactid": "48"}).text

self.bid = soup.find("span", {"data-reactid": "53"}).text

self.fifty2_weeks_range = soup.find("span", {"data-reactid": "66"}).text

self.volume = soup.find("span", {"data-reactid": "71"}).text

self.average_volume = soup.find("span", {"data-reactid": "76"}).text

self.beta = soup.find("span", {"data-reactid": "89"}).text

self.pe_ratio = soup.find("span", {"data-reactid": "94"}).text

self.eps = soup.find("span", {"data-reactid": "99"}).text

self.earning_date = soup.find("td", {"data-reactid": "103"}).text

Step 4: Code get_data() Method

From the above, the code is already complete. However, we need an easy method to return the data for the stock being scraped. This method will do that. All it does is return all of the data in a dictionary so you can use dictionary methods to get relevant values. Below is the code for this method.

def get_data(self):

return {

"name": self.name,

"price": self.current_price,

"marketcap": self.market_cap,

"previous_close": self.previous_close,

"open": self.open,

"bid": self.bid,

"52_weeks_range": self.fifty2_weeks_range,

"beta": self.beta,

"pe_ratio": self.pe_ratio,

"eps": self.eps,

"earning_date": self.earning_date

}

Full Web Scraper Code for Scraping Yahoo Finance

import requests

from bs4 import BeautifulSoup

class YahooFiScraper:

def __init__(self, ticker):

self.url = "http://finance.yahoo.com/quote/{0}?p={1}".format(ticker, ticker)

self.name = ""

self.current_price = ""

self.market_cap = ""

self.previous_close = ""

self.open = ""

self.bid = ""

self.fifty2_weeks_range = ""

self.volume = ""

self.average_volume = ""

self.beta = ""

self.pe_ratio = ""

self.eps = ""

self.earning_date = ""

self._scrape_data()

def _scrape_data(self):

x = requests.get(self.url).text

soup = BeautifulSoup(x, "html.parser").text

self.name = soup.find("h1", {"data-reactid": "7"}).text

self.current_price = soup.find("span", {"data-reactid": "31"}).text

self.market_cap = soup.find("span", {"data-reactid": "84"}).text

self.previous_close = soup.find("span", {"data-reactid": "43"}).text

self.open = soup.find("span", {"data-reactid": "48"}).text

self.bid = soup.find("span", {"data-reactid": "53"}).text

self.fifty2_weeks_range = soup.find("span", {"data-reactid": "66"}).text

self.volume = soup.find("span", {"data-reactid": "71"}).text

self.average_volume = soup.find("span", {"data-reactid": "76"}).text

self.beta = soup.find("span", {"data-reactid": "89"}).text

self.pe_ratio = soup.find("span", {"data-reactid": "94"}).text

self.eps = soup.find("span", {"data-reactid": "99"}).text

self.earning_date = soup.find("td", {"data-reactid": "103"}).text

def get_data(self):

return {

"name": self.name,

"price": self.current_price,

"marketcap": self.market_cap,

"previous_close": self.previous_close,

"open": self.open,

"bid": self.bid,

"52_weeks_range": self.fifty2_weeks_range,

"beta": self.beta,

"pe_ratio": self.pe_ratio,

"eps": self.eps,

"earning_date": self.earning_date

}

c = YahooFiScraper("TSLA")

print(c.get_data())

Conclusion

As a way of concluding this guide, I need to mention that the code above is nothing but a proof of concept. You cannot use it for scraping many stock data before getting blocked. If you also take a look at the code, you will see that exceptions are not handled and as such, cannot be used as a production-level script. As someone interested in coding a Yahoo Finance scraper, you should build on this and make it rugged and block-proof.