Concurrency and parallelism are very crucial techniques in computing used by an Operating System (OS) to execute multiple processes. In the article below, we have discussed what they are, their similarities and differences, as well as the possible drawbacks.

As there is a rise in the growth of computer science, the way programmers, developers, and more want to carry out processing has become advanced. However, the two most common terms that often resurface and surface are concurrency and parallelism.

Though they sound similar and confused with each other, their principles are distinct. What brought about this concept is the fact that tech-savvy yearn for systems that expand their processors and swiftly run multiple processes. To do that, more cores are created in the central processing unit (CPU). As a result, to utilize the cores to the maximum, there is a need to apply the concepts of concurrency and parallelism.

These two concepts are techniques used in modern-age computing to execute multiple processes or programs. This, in turn, will enhance the performance and responsiveness of systems. To understand these two OS processing techniques, some core key terms come into play.

These are multiprocessing, multithreading, multicore processors, pipelining, and distributed computing. In this article, we will examine the similarities between these two processing methods and, most essentially, compare them based on certain parameters. But before that, let's first explore what these techniques are.

An Overview of Concurrency and Parallelism

Concurrency

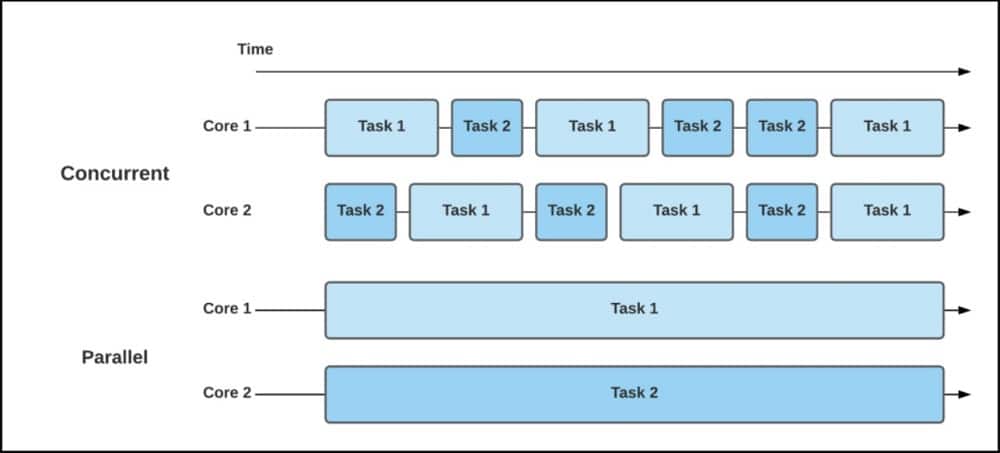

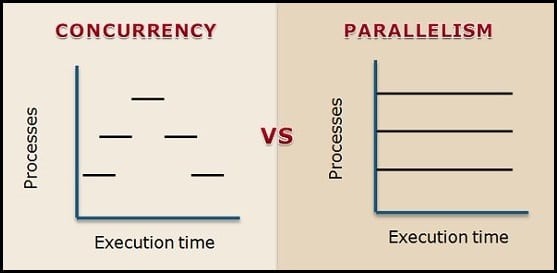

Concurrency is when multiple tasks work in overlapping periods without necessarily executing them at the same time. In concurrent processing, task processes are being done in a single-core CPU, thereby allowing the system to switch between them seamlessly.

What this means is that, for example, 4 tasks were put on execution in a system; all these tasks need attention, but there's only one processing unit available. In a concurrent system, these tasks will all be processed at the same time but interleaving with each other.

Meaning that, if task 1 runs, 2 will follow, 3 and 4, and back to one again. It mustn’t wait to finish one task before another will start its processing. They keep switching within each other until all task completes its runtime.

They are not simultaneous, but a single CPU can execute hundreds of tasks by multi-tasking within them and switches quickly. When creating a concurrent system, interrupts are built to avoid a specific assigned task taking too long in the CPU.

This is otherwise called Preemptive Scheduling. As such, there is a need for readjusting CPU time because two tasks cannot run at a time. Additionally, concurrency aims to manage multiple tasks. Thus, there must be time slicing. This technique is achieved by using multithreading.

Parallelism

Parallelism, on the other hand, involves the simultaneous execution of multiple tasks or processes at an instant or the same time. In parallel processing, tasks are broken down into smaller sub-tasks. This technique, unlike concurrency, works on multiple cores. Meaning that the task is executed independently and concurrently on multiple processing units, such as CPU cores. This parallel execution is often achieved to speed up computations and performance. Let us implement our previous examples.

While task 1 run its processes, task 2, 3, and 4 run simultaneously using the multicore infrastructure of the CPU. What that tells you is that the finish time of a parallel system is the same. Task one does not complete its task before task 2. They work in parallel, ensuring that the overall project is completed much faster. This technique uses multiprocessing, multicore, distributed, and even pipelining.

What you should simply understand is that there are advanced computers with up to 16 cores. Most importantly, the number of cores your central processing unit (CPU) has is the number of things that can be carried out in an instant. This is what parallelism simply represents. Therefore, the amount of core in your processor is crucial and matters as it is what defines your parallel operations.

Similarities Between Concurrency and Parallelism

Although concurrency and parallelism have distinct attributes, there are some similarities they share. Let’s outline what they are.

Handle multiple tasks: Both concurrency and parallelism involve multithreading and multiprocessing. Managing several tasks is one similarity both of these techniques have. a single core runs concurrency, while parallelism is by multicore. However, the point here is not by the number of their core, it is the task. Both of them can run and execute more than hundreds of tasks. This, in turn, is the thin line that makes them alike.

System responsiveness: Both concurrency and parallelism share similarities in terms of their response. Even though their goal is different, they both make sure task overlap in time and also achieve a specific speed. Concurrency is about usability, making sure everything is working. Parallelism looks at the speed and performance of the task. The connected dots here are that their programs execute swiftly, and multitasking weighs faster. Therefore, be it single-core or multicore, they both have good system responses.

Scalability: Oftentimes, systems that are designed with concurrency and parallelism in mind can easily be scalable to handle many tasks. What’s important is to understand how the two works. As the number of processing cores increases, the system can handle more tasks simultaneously. Also, maintaining responsiveness and performance. Concurrency and parallelism can easily complement each other, knowing they can both carry out multiple tasks on runtime.

Debugging: This term is not a playing ground when it comes to concurrency and parallelism. They can both handle and solve complex problems. In a concurrent system, task overlaps, but if you are not careful, one task will overshadow the other. This problem can also arise in parallelism if the program is not designed to have a stop time. As such, memory leakage and errors can occur.

Utilization: Programs attain maximum usage even if they are on waiting time or multiprocessing. Both concurrency and parallelism make sure to justify this concept. As a result, tasks are well-adjusted, and computation is effective in enhancing system performance.

Comparison Between Concurrency and Parallelism

There are often confusing grounds between concurrency and parallelism. Having seen the similarities of both and the thin line they share, in this section of the article, we will list out and explain some primary distinct parameters that differ in both of the processing techniques.

-

Multiple Tasks Management

Foremost, is the attribute of managing multiple tasks. Though both processes share a similarity here, the difference is more. Parallelism allows tasks to be completed faster by leveraging multiple processing cores. This core is different, leading to improved overall system performance. Whereas concurrency ensures that a system remains responsive and utilized. Multiple tasks are handled efficiently, thereby leading to the use of overlapping or time-slicing.

-

Simultaneous Execution for Speed

The goals of concurrency and parallelism are different. Concurrency targets usage to make sure there is no blockage, hence executing multiple processes at overlapping times. Parallelism ensures performance and speed. This further aids in running and executing multiple processes using multicore processors. In this case, more than one processor runs in parallel. Parallelism speeds up processes. What will interest you here is that concurrency has nothing to do with performance, even though it switches within a task very quickly. The way these two processing techniques work is different. While they can both carry out hundreds of tasks, concurrent systems execute the task within different time ranges. Parallelism executes simultaneously in an instant.

-

Utilization of Resource

Concurrency is majorly known to dwell more on usage. Nonetheless, parallelism optimizes the utilization of available resources by distributing tasks across multiple processors. Concurrency ensures that resources are utilized effectively by allowing tasks to progress, even if others are waiting for external events. What’s more is that, in a concurrent system, adjusting time is crucial. It helps prevent overshadowing a concurrent dominant task. The scenario never happens in a parallel system because everything is simultaneous.

-

Thread Interleaving

This concept is common with concurrency. In a concurrent system, task interleave such that one task finishes before another. let’s exemplify it for more clarity. If, for instance, there are task A-Z on runtime, all begins at once but switches within. That’s how it keeps processing very quickly, like in seconds. The interesting part of this is that task A must not finish before task Z starts. Parallelism uses multiprocessing, meaning it doesn’t wait, all programs have the same runtime and finish in an instant.

An application can be concurrent but not parallel. This means that more than one task can be in process, but two tasks cannot be executed in an instant. The same also goes for the parallelism. A program can be parallel but not concurrent. Meaning that multiple sub-tasks can be in process at the same time.

-

Non-deterministic Output

In terms of determining input and output in concurrency and parallelism, the difference is obvious. We understand that the parallelism system works simultaneously, so this concept might not likely be clear. However, let’s look at it in a concurrent system that uses a single-core CPU. Remember we said concurrent system switches, uses time slicing, and even interleaves within programs.

What this tells you is that the output cannot be determined. Take, for example, using the A-Z instance above; the first output for switching within the task after running to the end is likely to differ from the second output. This is why it is not expected of a concurrent system to have the same output. One task can overlap another and vice versa. Thus leading to specific output not being determined.

-

Complex Problem Solving

Debugging is often a challenging factor in computing, so it’s not something new. A concurrent and parallel system faces this as well. Though the two techniques are similar in this aspect, they both have complex debugging. But to look at them differently, concurrency is more complex to debug than parallelism. Parallelism is essential for solving complex computational problems. Whereas concurrency ensures that user interfaces remain responsive while these computationally intensive tasks are being performed. Concurrency makes sure no blockage in running any task. While parallelism does the task and ensures effective speed and performance is certain.

-

Asynchronous and Synchronous

Asynchronous simply means when something is not occurring at the same time. That is, controlling the timing of operations to avoid one task starving another and also deadlock. Synchronous is when a system executes programs or tasks at the same time or in an instant. No need to adjust processing time. However, a concurrent OS is asynchronous, whereas a parallel OS synchronizes and asynchronizes tasks.

Parallelism processes asynchronize tasks by executing specific concurrent programs simultaneously. To boost the performance level of your daily programs, these are terms to be conversant with and understand how their processes work. Parallelism might seem all-powerful even though concurrency is integral. In designing a parallel program environment, if you don’t know where to call its stop, errors and memory corruption can arise.

-

CPU Core

The central processing unit core is the major determinant of what brought about concurrency and parallelism in the first place. As we know, it is either single or multiple. Some system even has up to 16 cores. Unlike concurrency, which uses a single-core CPU, parallelism uses multicore. The key difference is that in a concurrent system, each task is dependent as they are all from one central processing unit. They have to wait and switch within themselves. Whereas in parallelism, each core works independently.

What to note is that the number of cores determines the number of tasks that can be executed at a time. Single core, even if multiple programs are being run, will execute one task at a time. And multicore CPU will be multiprocessing and execute multiple programs in an instant. This is simply how concurrent and parallel system works.

-

Flexibility

By use cases, concurrency is more flexible than parallelism. since a concurrent system works by switching between tasks, it provides flexibility in managing multiple tasks. thereby allowing the system to get used to different tasks and to know which one is more important than the other. Parallelism is more about performance in computing tasks. Speed is certain in running every sub-task, but since its runtime is synchronized, getting used to a dynamic working environment might be challenging.

Concurrency vs. Parallelism in web scraping

Concurrency and parallelism are two concepts that can be applied to web scraping to improve its efficiency and speed. Concurrency is about managing multiple tasks at once, even if they are not actually being executed simultaneously. It is achieved through interleaving operation of processes on the CPU or by context switching, creating the illusion of parallelism. Concurrency is more about the structure of a program and how it handles multiple tasks at once.

Parallelism, on the other hand, is about executing multiple tasks simultaneously. In a parallel system, tasks are divided into smaller sub-tasks that are processed simultaneously or in parallel, often on multiple processors or cores. This leads to an increase in the throughput and computational speed of the system. Parallelism is more about the execution of a program and how it can speed up processing by doing multiple things at the same time.

In web scraping, both concurrency and parallelism can be used to speed up the process. Concurrency can be achieved using libraries like asyncio in Python, which allows you to manage multiple tasks at once without actually executing them simultaneously. Parallelism can be achieved using libraries like concurrent.futures in Python, which allows you to execute multiple tasks simultaneously across multiple CPU cores or threads. By applying concurrency and parallelism to web scraping, you can significantly improve the efficiency and speed of your web scraping tasks.

Drawbacks of Concurrency and Parallelism

Having covered the various comparison parameters for concurrency and parallelism, let's take a look at the disadvantages these processing techniques can have.

Drawbacks of Concurrency

In this section, we will look at some disadvantages of creating a concurrent system

1. Deadlock and starvation: A major drawback that can occur in a concurrent system if not carefully designed is deadlock and starvation. Now, what does this mean? Deadlock can arise when two or more tasks are unable to be in process, each on waiting time. Task A is waiting for task B to start a task. That is why carefully adjusting time, leading to perfect slicing, is crucial in a concurrent system. Also, in terms of starvation, without proper overlapping, just a task might have multiple runtimes than others.

2. It is non-deterministic: Concurrent system interleave and switches within the task, and this can be a challenge sometimes. When task switches, the output switches also, hence, the first program runtime output might differ from the second, and so on.

3. Complexity in synchronization: Synchronization is often required to manage a concurrent task, but this concept is complex to implement in a concurrent system. In fact, while trying to set up these syncing benefits to prevent conflicts within tasks, other issues like deadlock can occur.

4. Too many applications or tasks: Running too many tasks can sometimes be a disadvantage for a concurrent system. This is because it can slow down performance, and multiple programs have to be protected from one another.

5. Allocation of resources: Optimum resource allocation can be difficult for the OS to manage the time if not well adjusted to enable easy running and switching. When this happens, even errors will become very complex to locate and debug.

Drawbacks of Parallelism

We have looked at some of the drawbacks that may discourage engineers and programmers from designing a concurrent system. Now, we will also examine the disadvantages of parallelism as well.

1. Memory corruption, leaks, and error: Designing a parallel system needs careful attention. You need to know the time frame and when the program should stop. If all the necessary attributes are not properly put in place, errors can occur. These errors can further lead to entire memory corruption.

2. Difficulty in debugging parallel tasks: Due to the simultaneous execution attributes of parallelism, it can be challenging to solve issues of task. since, most times, it divides tasks into sub-tasks, spotting the underlying problem to be debugged can be difficult.

3. Complexity in decomposing tasks: In parallelism, tasks are broken down into sub-tasks. As such, careful analysis and design must be put in place because partitioning can be challenging. Also, if there is a mistake here, speed and performance will be reduced.

4. Expensive to design: A parallel system is not cost-effective to design compared to a concurrent system. Parallelism requires specific hardware like multicore processors and interconnected computers. This multicore processor is not pocket friendly, the more the CPU core, the higher the price. There is an overhead associated with using parallel processing. Though this might increase its execution time, the cost is extra.

5. Synchronization: In parallelism, task needs to communicate with each other, and to do this call for synchronization. This process is usually complex and can be an issue if not well designed, and it needs a careful and perfect setup to run, else performance will be tempered.

There are more drawbacks to concurrency and parallelism. Nevertheless, these are the major ones that can easily disorganize these processes if not well designed.

FAQs

Q. Can a System on Concurrency Start a New Task Before the First One Finish Running?

Yes, a concurrent system can release a new resource before one finishes its runtime. The idea of concurrency is that of managing multiple tasks. Hundreds of tasks can be on a run but interleave with each other. Meaning that a concurrent system is not a simultaneous system, even if it looks like one. Many confuse concurrency and parallelism together because of how quickly within seconds concurrent systems multi-task. But no system on concurrency can execute two tasks at a time. Though they are all running, one finishes before another, but a task can still be running while another one starts its journey.

Q. What are the Main Differences Between Concurrency and Parallelism?

Concurrency deals with managing multiple tasks at an overlapping time frame. While parallelism, on the other hand, involves executing multiple tasks simultaneously or at the same time. A concurrent system uses multithreading, whereas a parallel system uses multiprocessing. Concurrency is crucial for problems with user interfaces that need to respond to user interactions. Parallelism is mostly for solving computational problems that are often divided into sub-tasks. While concurrency is all about how a program be properly utilized without roadblocks, parallelism is about speed and performance. Additionally, the specific challenges faced such as synchronization issues and debugging, to mention a few, also set their differences.

Q. Is Multithreading Parallelism or Concurrency?

No, the concept of multithreading is not related to parallelism. multithreading is a concurrent execution of more than one thread of instruction. How this works is that you can have just a single code that can spread into multiple threads. Parallelism is multicore, while threading runs and executes through a single core, hence, why it is a concurrent attribute. Multithreading is what a concurrent system uses to execute tasks. parallel systems since it is a multicore processor that uses multiprocessing. On top of that, parallelism can be used with pipelining and distributed computing because they are all multicore processing.

Q. Is It Possible to Have Concurrency But Not Parallelism?

Yes, it is very possible to have concurrency and not parallelism, just as it is also possible for it to be otherwise. What I mean is that a program can be concurrent but not parallel in the sense that more than one task processes at the same time. But mind you, these tasks cannot execute at the same time, they execute at different intervals. Also, a program can be parallel but not concurrent, be it that multiple processes of a single task will be broken into sub-tasks and executed at the same time. This is how concurrency and parallelism work. One is simultaneous, and the other is not.

Conclusion

With all we have walked you through in this article, what to note is that both of these processing techniques are crucial in modern computing. Because of the efficient and responsive systems, the processes are unique with specific features. However, both have their pros and cons. While concurrency allows systems to manage multiple tasks efficiently, ensuring responsiveness, parallelism leverages multiple processing units to achieve top-notch and fast speeds for complex and flexible computations.

As such, understanding and harnessing these processing concepts are essential for building robust, efficient, and high-performing software systems in the world of digital infrastructure. In simple terms, one can say that a parallel system is always concurrent, but a concurrent is not parallel.