Are you looking for a method to scrape important data points buried in HTML files and documents on the web? Then you are on the right page as the article below describes the methods to get that done.

The Internet is a huge library of data that is important to businesses, researchers, and governments. Data ranging from customer reviews of products to human sentiments on societal issues, and even some IoT-generated data can be found online.

In fact, the Internet is currently the largest source of data accessible to all. However, the data is not available in the way you might want it. They are usually buried within HTML documents which is the document format for web pages.

You will need to download these documents and parse them out. If the documents have been well written and structured, then extracting data from them via scraping is easy.

However, there are some complexly written HTML pages that are complicated and messy, and extracting data from them is not an easy task. Regardless of how messy an HTML document has been written, you can scrape data from them with the right skill and tools. In this article, you will be shown how to scrape HTML data.

What is HTML Data Scraping?

HTML data scraping is the process of extracting important data points from HTML web pages. This involves using specialized web automation bots known as web scrapers to download the raw HTML of the pages and then use parsers to traverse and extract important data points of interest to you from them.

Data and information on web pages are usually enclosed in HTML documents in structures known as HTML elements. While the process of scraping data from HTML pages sounds easy in theory, it can be difficult and complicated in practice.

This is because most websites have anti-bot systems that discourage bot access and prevent scraping. If your target website is protected by any form of anti-bot system, then you will need to know how to evade such an anti-bot system in other to succeed in scraping its data.

In the past, you will need to be a coder to be able to scrape data from HTML pages. This is no longer the case as there are no-code scraping tools you can use for doing that effortlessly. There are also professional data services that specialize in web scraping.

Ways to Scrape HTML Data

There are many methods you can use to scrape HTML data. As a coder, you can develop a custom scraper or use an already-made one that you can integrate into your code. If you are not a coder, there are no-code scraping tools you can use to scrape data. There is also the option of delegating the task to a data service.

How to Scrape HTML Data for Coders

If you are a coder, there is a good number of options available to you when it comes to scraping HTML data. This is because there is a good number of tools available to you. Some provide full-fledged scraping options while others are just a tool you need to make use of. Let's take a look at each of these below.

Web Scraping Libraries and Framework

Most programming languages can be used to scrape data from the web. All that is required is a way to send HTTP requests and a way to parse data out of raw HTML data. If you can find out a way to get these two things done in your programming languages, then you will be able to scrape HTML data.

Interestingly, popular programming languages provide libraries and frameworks that make it easy for you to scrape data from the web. One thing with these libraries and frameworks is that they are language-dependent.

So, the libraries available to Python developers are not the same as what a Java coder has access to. Because of this, it is not possible for us to exhaust the list of libraries and frameworks for all programming languages.

However, we can take a look at popular libraries and frameworks for a few popular programming languages.

Python Libraries and Framework for Web Scraping

Python is the most popular language for web scraping because of its simple syntax, easy-to-learn nature, and huge library support for web scraping. Below are some of the popular web scraping tools you can use to scrape data from HTML.

1. Requests and Beautifulsoup

These are actually two tools. The requests library is an easy-to-use library for sending HTTP requests. This is used for downloading HTML web pages. The Beautifulsoup library is the extraction library. It is built on a parser and makes it easy for traversing HTML elements for extracting important data points. These two are the easiest option to learn and use.

An Easy sample here,

To scrape HTML data, you will need to use a web scraping library or framework, such as BeautifulSoup, lxml, or Selenium. Here is an example of how to use the BeautifulSoup library to scrape HTML data from a webpage:

- Install the BeautifulSoup library using pip:

pip install beautifulsoup4

- Import the necessary modules in your Python script:

import requests

from bs4 import BeautifulSoup

- Use the requests module to make a GET request to the website you want to scrape data from. For example:

url = "http://www.example.com"

response = requests.get(url)

- Use the BeautifulSoup module to parse the HTML content of the response. For example:

soup = BeautifulSoup(response.content, "html.parser")

- Use the BeautifulSoup object to extract the data you want. For example, if you want to scrape all of the links on the page, you could use the find_all() method like this:

links = soup.find_all("a")

- Use a for loop to iterate through the list of links and print out the text and URL for each link. For example:

for link in links:

print(link.text, link["href"])

This is just a basic example, but it should give you a good starting point for scraping HTML data using the BeautifulSoup library. For more information and examples, you can refer to the official documentation for BeautifulSoup.

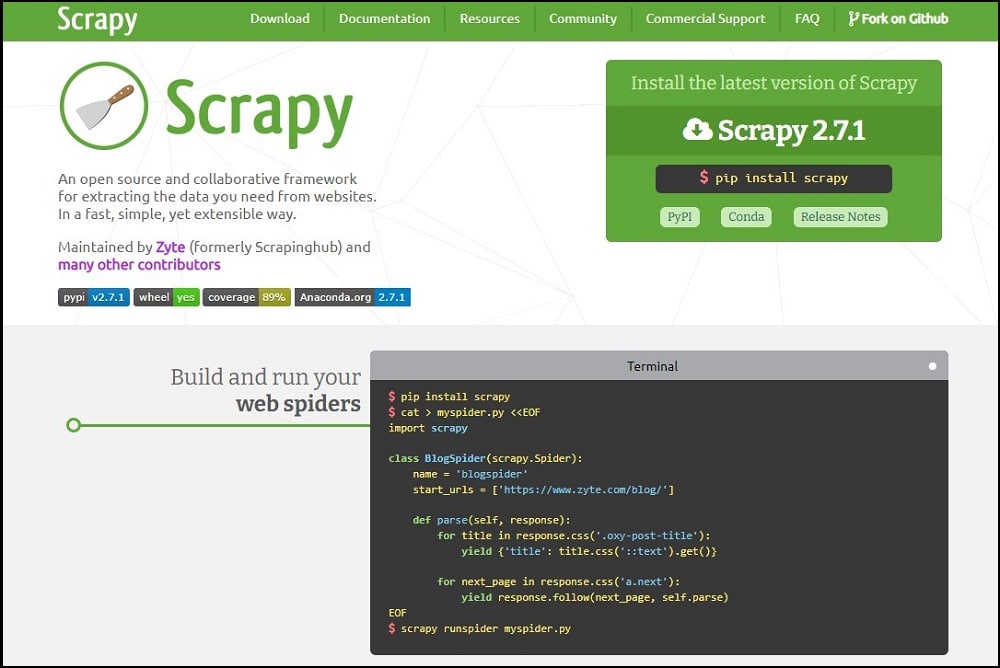

2. Scrapy

Scrapy is a full-fledged framework for web scraping. It comes with an HTTP library, parser, and other tools necessary for web scraping. This can be difficult to learn for beginners but provide the most tools. It is the fastest tool for developing scalable web scrapers and crawlers.

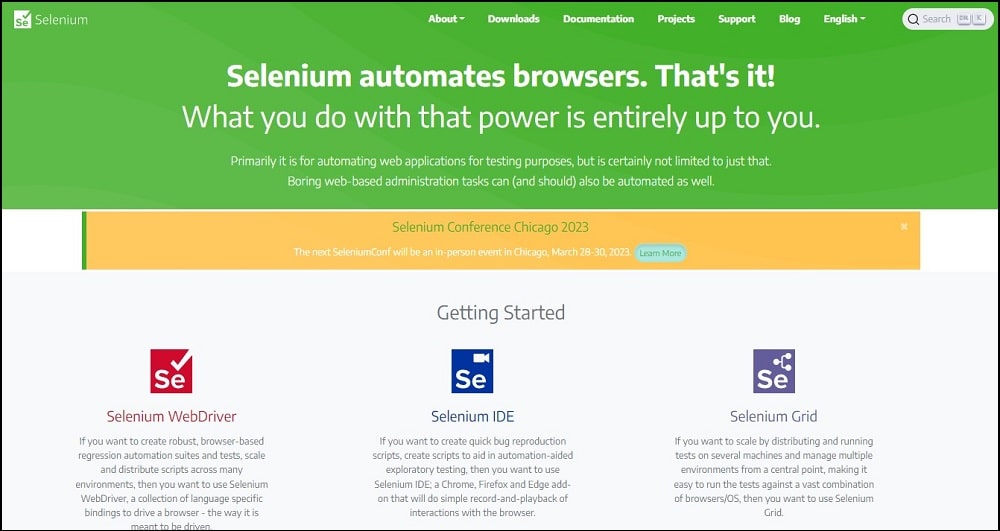

3. Selenium

One thing you need to know about the 2 options above is that they do not support scraping from JavaScript-heavy pages. If you need to scrape from heavy Javascript pages, then you will need a tool that can automate web browsers. And Selenium is the tool for that in Python. You can use it to automate popular web browsers, access web pages of target, render the JS, and then extract required data. It is the slowest of the 3.

NodeJS Libraries and Framework for Web Scraping

NodeJS is also one of the popular options for web scraping and there are tools to make it easy. In fact, when it comes to scraping, it seems better to use NodeJS since JavaScript is the language of the web, having utility in both the frontend and backend.

Below are some of the best libraries for scraping HTML data.

1. Axios and Cheerio

Just like Python has Requests and. Beautifulsoup, NodeJS has Axios and Cheerio. Axios is for sending HTTP requests to download HTML pages while Cheerio is for extracting data from the downloaded HTML document.

These two are very fast but should only be used for scraping HTML pages. If JavaScript needs to be rendered, then they are not the tool for the job.

2. Puppeteer

For pages that require JavaScript rendering to display content, Puppeteer is the tool for the job. Puppeteer is a high-level API for automating the Chrome browser. Using it, you can open web pages, render JS and scrape required content.

Other alternatives to this include Playwright which supports other browsers. Selenium is also another alternative. Selenium is the only tool that supports multiple programming languages and browsers.

Java Libraries and Framework for Web Scraping

Java is not popular among beginner web scrapers. However, the performance you get in terms of scraping speed is unparalleled compared to what you get from Python and NodeJS. Below are some of the tools available for scraping

1. Jsoup

This library is a starter that does the job. Many Java developers find the HTTP library adequate for their scraping needs. This means that downloading HTML pages is not a problem. What is a problem for them is extracting important data points and that is what JSoup does quite well. It gives you a jQuery-like interface for using CSS selectors to extract data.

2. Selenium

A repetition here. If you need to scrape HTML pages with the need for Javascript rendering, the HTTP library provided by Java won’t help that much. You can use Selenium to automate any of the popular browsers of your choice in other to render content for scraping.

Web Scraping APIs for Developers

As a coder, there are already-made web scrapers you can use to scrape important data from web pages. Some of them are available as web scraping libraries too while others are available as web scraping APIs. For the web scraping APIs, all you need is to send a web request and get back a response.

You will not need to worry or handle blocks as they deal with proxies, headless browsers for JS rendering, and bypass captchas. If you need a specialized web scraper for your target website, you can check GitHub, there are a good number of them especially for scraping popular websites such as Google, Facebook, Twitter, Instagram, Amazon, eBay, Booking, Reddit, and their likes.

In this section, our focus is on web scraping APIs. This makes web scraping extremely easy. With them, you will not experience blocks as you will when developing custom web scrapers as you will have to deal with blocks and captchas. Below are some of the popular web scraping APIs in the market for that.

-

ScraperAPI — Best Web Scraping API

The ScraperAPI is arguably the best web scraping API in the market. It has got the strongest anti-block support, enabling it to scrape even web pages that are protected by Cloudflare and PerimeterX.

The service use datacenter, residential, and mobile proxies under the hood depending on the option you choose and your website of target. ScraperAPI also renders JS. However, it does not provide you with a parser and can’t be used for scraping Facebook and Instagram.

-

ScrapingBee — Best ScraperAPI Alternative

ScrapingBee shines in areas ScraperAPI falls short. You can use ScrapingBee for scraping both Facebook and Instagram. It is also much more than a proxy API. It also comes with extraction support which enables you to scrape data using CSS selectors.

However, its anti-blocking system is not as effective as that of ScraperAPI and as such, when dealing with difficult-to-access websites, you could experience some blocks.

-

WebScrapingAPI — Fastest Scraping API

The WebScrapingAPI is quite similar to ScrapingBee. However, it has one major advantage and this is speed. Currently, it is the fastest web scraping API — even faster than ScraperAPI.

Even though it is fast, it is also quite effective and keeps blocks at the minimum. It does not support mobile IPs for the time being. Its pricing can be linked to that of both ScrapingBee and ScraperAPI and you only get to pay for successful requests.

How to Scrape HTML Data for Non-Coders

You do not need to be a coder to be able to scrape data from the web. There are tools for non-coders that you can use to scrape HTML data without writing a single line of code. These tools are known as no-code tools and are becoming increasingly popular because of the increase in data-driven decision-making processes.

There are basically two types of no-code scraping tools. The first class is the visual web scrapers with point and click interface while the second class belongs to the specialized web scrapers.

Let's take a look at these two.

-

Visual General-Purpose Web Scrapers

There are many visual web scrapers out there you can use. These tools provide you an in-browser software and a point-and-click user interface. The browser is for accessing web pages while the point-and-click interface is for identifying and selecting important data points. If you click on a data let's say the name of a product on an Amazon product search page, all other product names on the page will be highlighted.

They also do have support for pagination. Some of the popular visual web scrapers with point-and-click interfaces include Octoparse, ParseHub, ScrapeStorm, WebHarvy, and Helium Scraper. All of these are paid except for their highly limited trial offer.

-

Specialized Web Scrapers for Non-coders

For visual web scrapers, they are for general purposes and can be used for all kinds of websites. If you do not want to deal with the point-and-click operations and instead need a simpler tool, then looking out for a specialized web scraper is the option left to you. These specialized web scrapers are targeted at specific websites and as such, the prices of setting them up are quite easier.

Take, for example, let's say you want to scrape Amazon, there are specialized web scrapers that all you need to provide is the product ASN code and you get the details of the product. The same procedure goes for scraping tweets, social profiles, and pages, among others.

The Bright Data’s Data Collector is one of the best tools for this. Another option is the Phantom Buster. These tools are easy to use and quite affordable for your data need.

FAQs About HTML Data Scraping

Q. Do I Need Proxies for Scraping HTML Data?

Yes, you need proxies for scraping HTML data. Without proxies, you will quickly exceed the request limit set by websites and when that happens, you will instantly get blocked from further blocking. While you can use proxies of your choice, I will recommend rotating residential proxies as they are the most undetectable proxies out there.

Bright Data, Smartproxy, and Soax are the popular providers of this. However, if you only need to scrape a few pages, you can set delays between requests to scrape without necessarily using proxies. Proxies are also required for scraping geo-targeted web data.

Q. Is Scraping HTML Data Legal?

Scraping HTML data is legal provided the data of interest is publicly available on the web and not hidden behind passwords or paywalls. However, when it comes to scraping HTML data behind pages protected by passwords, doing so can be illegal.

You are advised to seek legal advice from a competent legal practitioner as nothing written here should be taken as legal advice. You can refer to the HiQ Lab Vs LinkedIn case to know more about the legalities of scraping data from sources online.

Q. How to Avoid Getting Blocked While Scraping HTML Data?

Some already-made web scrapers will help you avoid blocks without you doing anything on your behalf. These include web scraping APIs and specialized web scrapers for non-coders. For the rest, you will need to deal with avoiding blocks yourself.

Visual web scrapers use many techniques under the hood but still require you to configure proxies and sometimes, anti-captcha tools. If you are developing a custom web scraper yourself, you will have to handle how to avoid blocks yourself. Things like using undetectable rotating proxies to set delays between requests, and spoof user agents, among others, will help avoid blocks.

Conclusion

Unlike in the past, the availability of data is not an issue — thanks to the Internet. There is an enormous among of data on the Internet and all you have to just do is collect them. Web data are contained in HTML documents and with the right tools like the ones described above, you can scrape the required data.

As you can see from the above, whether you are a coder or not, there is a tool available for you to use for scraping HTML data. Before you do it though, it is recommended you look out for the legal implications and please, be nice.