Are you looking forward to scraping data from a website to Google Sheets? Then, you are on the right page, as the article below provides you with an easy guide on how to get that done.

Most times, when we hear of web scraping, our mind goes to scripts written to collect code or full-fledged programs meant or collect data from the web. And more often, the collected is meant to be stored in. Google Sheet file. Interestingly, except your scraper requires some complex logic integrated, you might not have the need to use some of the full-fledged options or custom develop a scraper. You can just use Google Sheets to pull data from websites you want — and the support for this is native.

In this article, I will show you all you need to know about pulling data from websites with Google Sheets. This includes how to get it done, the benefits of using Google Sheets to collect data, and why you will want to use it. You will also learn the scenarios when the functions provided might not be efficient enough for you — in which case, you will have to make use of a custom script for scraping or use one of the already-made options in the market.

Pulling Data from Websites Using Google Sheets — an Overview

Google Sheets is a web-based spreadsheet application available for free that you can use to manipulate data and do some computations. Data is presented in rows and columns and has support for some simple and advanced mathematical and statically calculations. Traditionally, that is what it is meant to do.

However, in recent times, businesses have found themselves required to obtain data from remote sources on the Internet and use that in making their business decisions. Google Sheets got hold of this and provided support for some tools that make it easy for them to do so. Scraping data using Google Sheets is done using Google Sheets Functions — the same one you enter in the input bar to do some computations and data manipulations. However, there are specific syntaxes you need to follow for this to become possible.

It is important to repeat that if your web scraping task is complex, then this would be inefficient. And moreover, you will still need proxies if your tasks are sending too many web requests to a website, as Google Sheets still presents your real IP address to your target website.

Benefit of Using Google Sheets to Scrape Data from the Internet

-

Data Is Fresh and Updated

One of the main advantages of using Google Sheets to scrape data from the Internet is that the data you scrape is fresh and updated. So far, you keep the tab open, and you are connected to the Internet; it will refresh the data by sending a web request to get the updated data for you. This is a step further compared to the other methods of data collected and would be a good fit for real-time data analysis as opposed to collecting data once-off.

-

Easy to Use

You do not require coding knowledge to use the Google Sheets tool for web scraping. All you need is to learn how to use the importing functions to collect data from your target. You might need to learn some programming concepts a little such as XPATH or HTML elements. But this is something easy to do and does not require you to learn a programming language afresh.

-

Good for Simple Scraping Tasks

Sometimes, all you need is to get the table from a page or the data from a CSV file. This does not warrant you launching your IDE as a developer to start writing codes. You can just make use of the Google Sheets scraping functionalities and get that done. With just a function, the URL of the page, and the path to the element or the element name, you get the data scraped.

Functions to Scrape Data Using Google Sheets

The Formula Bar, which is found at the top of the spreadsheets, is the toolbar you will use to scrape data from websites of interest. The method is simple and straightforward that even a non-coder can learn. You won’t need to learn any programming language to get started. This is because what it uses is functions. You need to enter the functions in the function bar and then run it, and it will get the scraping Dione for you automatically. There is no one function but a group of functions for web scraping. Each function has what it is specifically meant for. Let’s take a look at some of the popular Google Sheets functions for web scraping.

IMPORTHTML

The IMPORTHTML is the most popular function for scraping data from web pages to Google Sheets. The syntax for using this function is simple and straightforward.

=IMPORTHTML(url, query, index)

Where the URL is the URL of the page that contains the data you want to scrape, the query contains the data type you want to scrape, and the index specifies the specific table you want as it appears on the page. The first table is 1, the second table is 2, and so on.

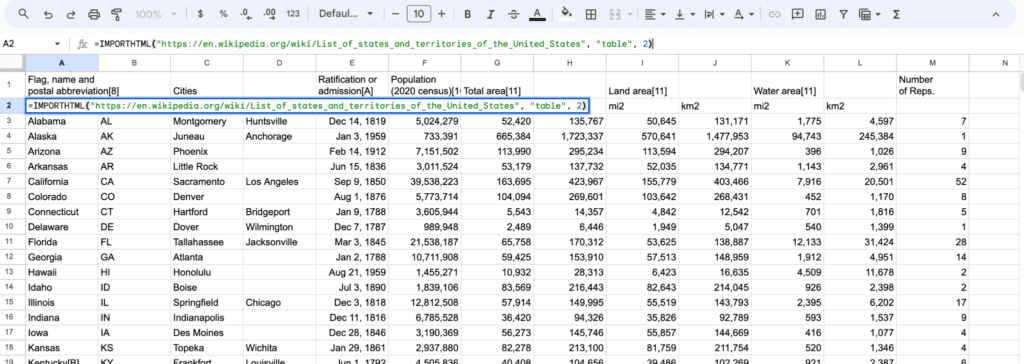

The IMPORTHTML function is meant for scraping data from elements like tables, lists, and many other data types on a page. If the element type is table or list, you will not need to worry about cleaning, as Google Sheets does that for you. Let's take a look at how to make use of this function to collect data for the table below.

=IMPORTHTML(“https://en.wikipedia.org/wiki/List_of_states_and_territories_of_the_United_States”, “table”, 2)

If you run the above command correctly, you will get the spreadsheet popular with the list of US states and their associated data, as shown on Wikipedia below.

The function will only scrape text for you and will disregard the hyperlinks and images. Let’s show you what to expect if you run the code above. Below is a screenshot as it appears when I ran the code.

IMPORTXML

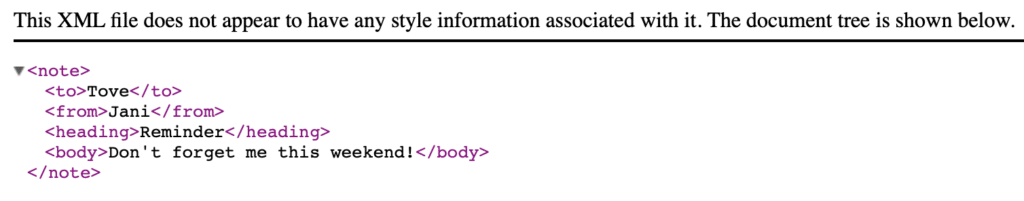

The above method is meant for scraping data from HTML pages. And over 95 percent of the cases, your target web pages would be written in HTML as that is the de facto standard. However, there are instances where your target site will be written in XML. In most cases though, XML files are used as data storage or format rather than used to define the structure of a page. In this case, the above function won’t work, you will have to use the IMPORTXML function. Below is the syntax of the IMPORTXML function.

=IMPORTXML(url, xpath_query)

As you can see, the parameters here are URL and xpath_query. The URL is already self-explanatory. But the XPATH Query needs some explanation. XPATH is a language that uses path-like syntax to locate an element in an XML document. In the example I will give below for IMPORTXML, the XPATH to get who a note was sent to is (“//note/to”). Below is the content of the page as of the last time I viewed the page.

As you can see above, the XML file contains a note with details such as to, from, heading, and body. The XPATH to scrape the user to whom the note was sent to is (“//note/to”). You will need a guide on XPATH to learn how to use it comprehensively. Here is a good guide you can get started with on the W3Schools website. The command to scrape the recipient of the note above is as follows.

=IMPORTXML(“https://www.w3schools.com/xml/note.xml”, “//note/to”)

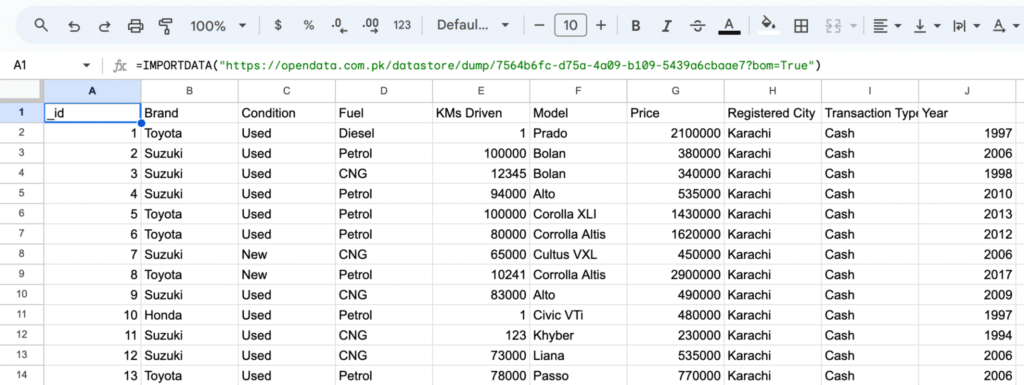

IMPORTDATA

The IMPORTDATA is one easy-to-use function. It is meant for scraping data from CSV or TSV files. If you have the URL of a CSV file that contains the data you want to copy to Google Sheets, you can use the IMPORTDATA function to get that done. The function is the simplest among all of the functions for scraping data in Google Sheets.

This is because it takes just the URL of the file as an argument. Below is the syntax of how to use the IMPORTDATA function to pull CSV or other like data from another website to Google Sheets.

=IMPORTDATA(url)

Let's say I want to scrape the details of cars as presented on this page (https://opendata.com.pk/dataset/pakistan-used-cars/resource/7564b6fc-d75a-4a09-b109-5439a6cbaae7). With the way it is presented here, one could use the IMPORTHTML and then pass the element you want to scrape as a table, and that should do the magic. But here, what I want to show you is how to scrape CSV files. So, click on the download button on the top-right side of the page and then right-click on the CSV option and copy the link. Now pass it as the argument for IMPORTDATA as seen below.

=IMPORTDATA(“https://opendata.com.pk/dataset/pakistan-used-cars/resource/7564b6fc-d75a-4a09-b109-5439a6cbaae7“)

If you run the above, you should see the data in your Google Sheets document, as seen below.

Limitations of Using Google Sheets for Web Scraping

-

Non-Scalable

If you are conversant with the above, you can tell it can go a long way in helping you pull data from websites. But can it help you in all situations? What happens if you need to collect tables from hundreds of pages? This will involve you doing manual tasks, right? Well, that is one of the downsides of this method. It is meant for scraping one-off data. If you have to scrape from one page to another, you will have to manually copy the URLs and scrape the pages one after the other. This makes it a non-scalable web scraping solution.

-

Only GET Request Supported

If you are conversant with the HTTP methods, you will know there are the GET, POST, DELETE, and PUT methods. The Google Sheets IMPORT series of functions support only GET. If you need to send a POST request before you access your target, then Google Sheet scraping is not for you. You will need to custom-develop a web scraper with a programming language or use an already-made scraper.

-

Custom Header and Proxy Not Supported

Remember I mentioned you can only do simple tasks with it? When you need to customize the request header, then this is not an option, as there is no support for that. It sends its own user agent string which can get blocked. To make things worse, there is no support for proxies. This means your IP address can get blocked if you scrape too much from a target. This lack of customisable is one reason you might want to opt-in for a more flexible web scraping solution than sticking to this.

-

No Complex Logic

For many, when scraping tasks, reaching your target is easy, and you don’t have to do much. If that is your target and you need just a GET request to access your data point then there is no problem. But the moment you have the need to write complex logic, fill forms, press buttons, mimic human interactions, and so on, then it is time to seek for a different alternative.

Best Alternatives to Google Sheet Data Scraping

As stated, scraping data from the Internet using Google Sheets is only possible for simple scraping tasks. For the complex ones or those that you need to scrape many pages, you can’t use Google Sheets. What are the alternatives, then?

-

Custom Develop a Scraper

If you are a coder, you can develop a web scraper to scrape any data on the Internet with the programming language you are most proficient in. However, if you are looking for recommendations, then Python is quite popular and easy to learn. There are libraries and frameworks that will ease your work. Requests and BeautifulSoup are the go-to options. But if you need to scrape data behind Javascript interaction, then they won’t work, Selenium will do the work. For a full-fledged scraping framework, you can use Scrapy.

-

Use No-Code Scrapers

If you are not a coder, you can use no-code scraping tools. These are web scrapers you can use without writing a single line of code. The likes of Octoparse, ScrapeStorm, and WebScraper.io extension are the popular option. With any of these, you are provided with a point-and-click interface with which you can identify your target data points to train the tool on the data you want to collect. Some of them are even AI-powered and can identify important data points for you on a page automatically.

-

Use Web Scraping APIs

Sometimes even as a developer, you might not want to go through the stress of developing a web scraper and managing it. In this case, you can make use of a web scraping API. This is a web scraper that will handle proxies, captchas, headless browsers, and all forms of blocks for you. You just send a web request, and it returns the content of the page for you to parse. Some even come with support for parsing, where you define the selector of your target data. Others are meant for a website, and as such, there is no need to define the selectors — they return important data in JSON for you. The likes of ScraperAPI, ScrapingBee, and Smartproxy scraping APIs are the major players here.

-

Use a Professional Data Service

If any of the above alternatives do not work for you, then the last option for you is to pay professional data services to help provide you with the data you need. There are different platforms where you can find developers and data professionals ready to provide you with the data you need. You can make use of freelance platforms such as Upwork or Fiverr or use a professional data service like the one provided by Octoparse.

FAQs About Google Sheet Scraping

Q. Is Scraping Data with Google Sheets Ethical?

The way the tool is developed for scraping in itself makes it somehow ethical — the other part is left to you. First, you can’t overload it with requests, except you automate that using some form of script (App Script) or browser extension. Aside from this, there is no support for customizing the request headers — websites can tell you are accessing them via Google Sheets. There is also no support for proxies which means a website can decide to block you — but there is a walk-around method to fix this.

Q. What are the Common Errors Associated with Using Google Sheet Scraping Functionality?

One of the most common errors, especially when using the IMPORTXML function, is “result too large.” If you get this, it is because Google Sheets scraper can’t pull data that is as large as the data you want to scrape. You can use the XPATH function to reduce the size of the data so that it can scrape it chunk by chunk. Another error type is the #REF. If you get this error, it means you need to add more cells, and the result should be scrapped. There are a few other functions known as volatile functions that you need to learn.

Q. Can I Scrape Data Using Google App Script?

Google App Script is the scripting language of Google Sheets and you can use it to scrape data from the Internet. It is actually one of the alternatives to using the Google Sheets scraping functions. The only problem here is you will need to learn the language as it is a programming language, and if you want to develop for Google Sheets or a browser extension, the language might not be one you will want to learn.

Conclusion

From the above, you can see how easy it is to pull data from the Internet using Google Sheets. With all of its downsides, its simplicity is one thing that makes it stand out and will always appeal to a good number of users. Learning how to use it, the areas you should use it, and aspects you should avoid using but use an alternative instead will help you a lot. I covered all of that in the article above.