If there is content you need to scrape on the Internet Archive Wayback Machine website, then stick around this page to discover some of the best web scrapers you can use and how to develop your own custom wayback machine scraper if you have coding skills.

The Internet or more specifically, the content on web pages on the Internet do not remain the same over time. The content is being modified in most cases and in others – they are basically lost as the website goes offline or the admin deletes them.

Fortunately for us, the unavailability of a web page or even a full website is not an issue if the website existed in the past as the Internet Archive Wayback Machine and other related websites crawl and cache websites on the Internet so that we can always access the content on any website provided the website has been crawled and cached before. There is a caveat though – the web snapshots are historical.

Aside from web pages, the platform is a huge library of books, audio files, videos, computer software, and images, among others. The huge amount of information available on the website has given it a unique use case in the IM world. And that is, Internet Marketers and researchers use it as a library of data.

However, if the data you are interested in spans hundreds and thousands of pages, extracting them manually would be time-wasting, tasking, error-prone, and overall inefficient. Instead, you use an automated method known as web scraping. In this article, we would be showing you how to create a custom web scraper for Wayback machine – or you can make a choice from the recommended already-made scraper described in the later part of the article.

Internet Archive Wayback Machine Scraping

Internet Archive Wayback Machine scraping or more specifically archive.com scraping is the process of using computer bots known as web scrapers to extract content such as web pages, text, audio files, videos, books, and even a full website from the archive.org website. This is the best method to collect data from archive.org especially if the data span multiple pages and manual extraction would be stressful.

So far the process is replicable, then you can get a web scraper that would replicate the process in an automated manner to make it more efficient and time-saving. One thing with web scraper for archive.org is that they can be quite basic and still get the job – some would need to be complicated and come with advanced features.

It might interest you to know that not only historically websites can be scraped from archive.com. some marketers and newbie scrapers find it difficult to scrape certain websites because of their strict anti-scraping system. For these websites, if the content you are scraping is not time-sensitive, you can simply scrape their content from archive.com and prevent yourself from the struggle of scraping a website that does not want to be scraped. The good thing about the Internet Archive Wayback Machine is that it supports scraping.

Yes, the Internet Archive itself strives on scraping websites and it is one of the most extensive scrapers in the world and as such, it does not see anything wrong with it being scraped. For some scraping tasks, it even offers an API to make your scraping task easy.

How to Scrap Internet Archive wayback machine Using Python, Requests, and Beautifulsoup

It might interest you to know that creating a custom scraper for archive.org whether to scrape books, images, or information from web pages is not difficult if you have the skill of programming. If you are not a coder, I will advise you to go to the next section to make a choice from the list of recommended web scrapers for archive.org as this section is for those that have coding skills. When it comes to coding a web scraper, you can use any programming language provided it provides you a library for sending HTTP requests and a library for parsing. In this guide, we would be using Python since it is easy to understand even for non-python programmers and it provides easy-to-use libraries for scraping.

For scraping Internet Archive there are many libraries available for you. The library you use would be determined by what you intend to scrape. If you intend to carry out tasks and would only work if Javascript is executed, then you will need to use Selenium which is a browser Automator. However, if Javascript is not required, then Requests and Beautifulsoup would get the job done. Requests is a Python third-party library for sending HTTP requests. Beautifulsoup on the other handis a high-level library that utilizes parsers to help you transverse and extract data from HTML pages. We would be using the duo of Beautifulsoup and Requests in our sample code below.

One thing you will come to like about scraping archive.org is that you will not have to deal with some of the difficulties associated with general web scraping. As stated earlier, some newbie web scrapers would rather scrape website data from archive.org than do that directly. That is because while they will have to deal with anti-blocks and other anti-scraping measures on the websites, they will not when scraping from archive.org. however, you need to verify URLs when your scraping tasks involve scraping URLs to avoid scraping the wrong URL.

-

Sample Code for Scraping Wayback machine

To show you how to scrape Internet Archive Wayback Machine, we would be recommending a well-detailed article on how to scrape Internet Archive using Python and Selenium. The guide is written by Analytics Vidhya – you can read the article here. It walks you through a step-by-step guide on how to scrape Internet Archive Wayback Machine using Python, Requests, Beautifulsoup, and other third-party Python libraries.

- Scrapy Vs. Beautifulsoup Vs. Selenium for Web Scraping

- Playwright Vs. Puppeteer Vs. Selenium: What are the differences?

Best Wayback Machine Scrapers

You do not have to create an Internet Archive Wayback Machine scraper in other to scrape archive.org. this is because there are already-made web scrapers in the market that has been developed for such. In this section of the article, we would be recommending some of the best web scrapers you can use to scrape archive.org. While some of them can be used by non-coders as they do not require you to write a single line of code, others are meant for coders.

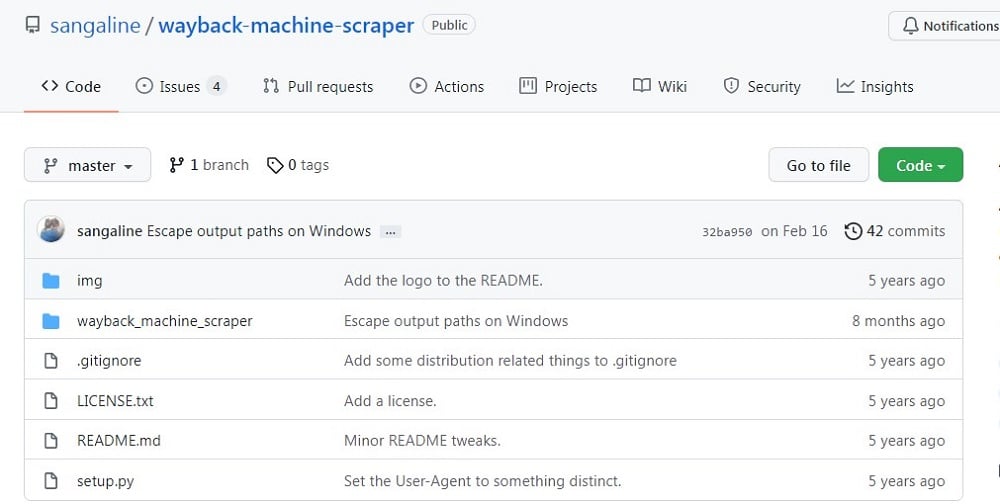

Wayback Machine Scraper by Sangaline

- Pricing: free open source software

- Free Trials: completely free to use

- Data Output Format: CSV, JSON

- Supported Platforms: CLI application

The Wayback Machine Scraper is a CLI application developed as a Scrapy middleware for scraping time-series data from the archive.org website. Being a Scrapy middleware, you can tell that it is a Python-based web scraper and as such, only coders coding in python can make use of it. This is an open-source Internet Archive scraper that you can download from Github.

You are not required to make any payment even when used for commercial usage. If you are looking to download a full website as it appears on the archive.org website then this is the web scraper for you. One thing you will come to like about is that it is highly configurable. You can use the pip command (pip install wayback-machine-scraper) to install it.

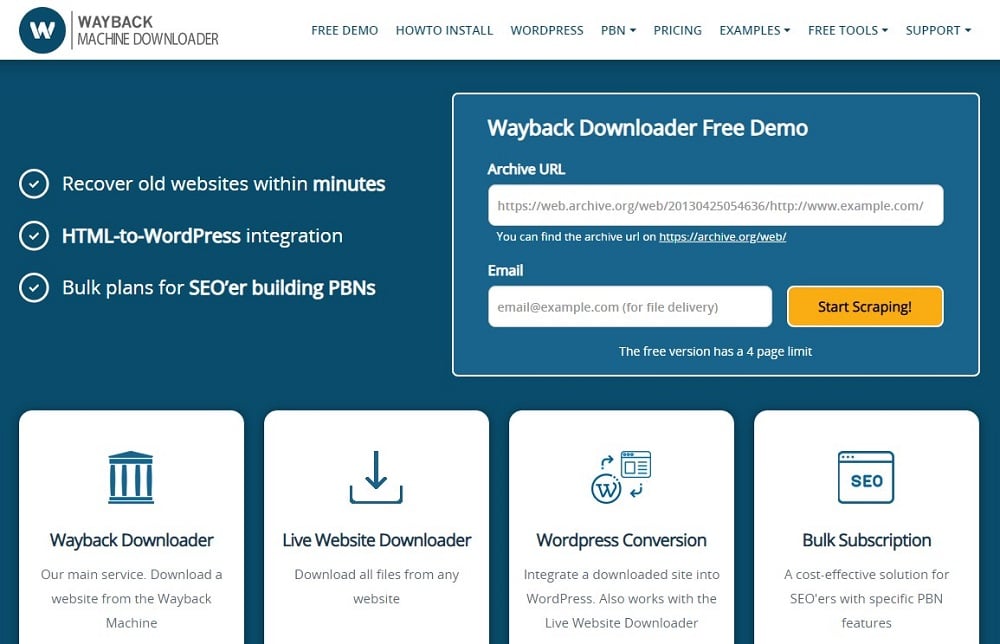

Wayback Machine Downloader

- Pricing: Starts at $15

- Free Trials: Free trial available

- Supported Platforms: Desktop

While the above has been developed to be used by coders, the Wayback Machine Downloader has been developed to be used even by non-coders. This service is very specialized in its approach. While a general scraper for archive.org can scrape everything, its only task is to download copies of pages of a website or a full website depending on what you want for the purpose of restoring a page.

It even has support for restoring to WordPress if the website is initially available as a WordPress website. As with most services of its nature, the Wayback Machine Downloader is a paid tool but does offer a free trial to new users.

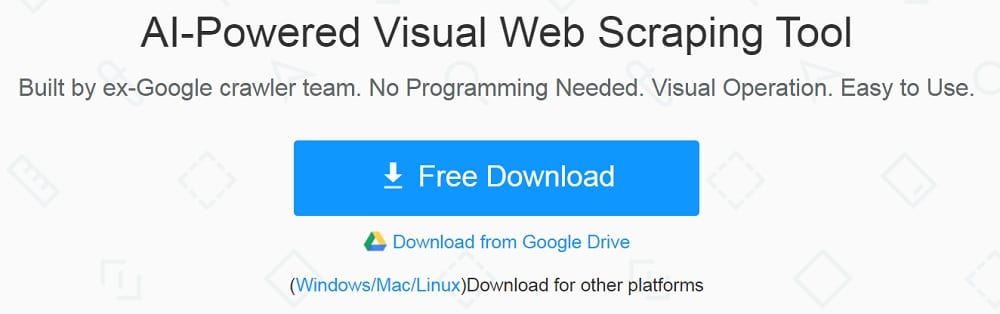

ScrapeStorm

- Pricing: Starts at $49.99 per month

- Free Trials: Starter plan is free – comes with limitations

- Data Output Format: TXT, CSV, Excel, JSON, MySQL, Google Sheets, etc.

- Supported Platforms: Desktop, Cloud

ScrapeStorm has consistently been praised as one of the best web scrapers out there. It also made it to our list of recommended web scrapers for scraping the Internet Archive Wayback Machine for web pages, documents, books, audio files, and many more. This tool also does not require you to write a single line of code. All it requires is for you to know how to point and click the data of interest on the archive.org/web website and you are good to go. The software is a generic web scraper that aside from the Internet Archive Wayback Machine, you can use it to scrape all kinds of websites. It is one of the most advanced tools that use AI to automatically identify data of interest on a page without human interaction.

WebScraper.io Extension

- Pricing: Freemium

- Free Trials: Freemium

- Data Output Format: CSV, XLSX, and JSON

- Supported Platform: Browser extension (Chrome and Firefox)

If you are the type that likes to make use of browser extensions, then you might as well want to take a look at the Chrome browser extension provided by WebScraper.io. It works just like other visual web scrapers by providing you with a point and clicks interface for identifying data of interest.

One thing you need to know about this web scraper, ScraoeStorm, and Octoparse is that they are not efficient at downloading full websites but for parsing out specific data out of a page which is quite useful in scenarios where a historical website holds the data you are interested in. This web scraper is free and you can get started with only a few clicks.

Octoparse

- Pricing: Starts at $75 per month

- Free Trials: 14 days of free trial with limitations

- Data Output Format: CSV, Excel, JSON, MySQL, SQLServer

- Supported Platform: Cloud, Desktop

The Octoparse web scraper is also another web scraper you can use if there are specific data points you are interested in on web pages available in the archive.org library. Octoparse is a web scraper tool that is quite easy to use and when it even comes to scraping the Internet Archive, then it becomes even easier since you face less hassle than scraping regular websites that have strict anti-scraping systems that detect and block scrapers that you will need to bypass. Octoparse comes with advanced features such as cloud server support for saving your scraping tasks, schedule scraping, and much more. It is a free tool but new users can use it for 14 days for free.

Conclusion

If you take a look at the list above, you can see that there is a kind of grouping even though it is not obvious. The most obvious one is Wayback Machine Scraper by Sangaline is for coders while the rest are for non-coders. For the non-coders web scrapers, ScrapeStorm, WebScraper.io, and Octoparse are meant for scraping specific data from a web page on archive.org. if what you need is to unload the full web page or a whole website, then Wayback Machine Downloader is the web scraper you need.

You maybe like to read,