This page has been written to provide you with information on scraping data from pdf documents. You will be learning about some of the best pdf scrapers in the market and how you can create your own if you are a coder.

There is no standard data format that data should be available in. Data can be available in any format and it is your duty as a data scientist to fetch that data from the format it is to a format that you can use for your research work.

Unlike data available in databases and on web pages that are easy to extract and use, data available in PDF files are not easy to be collected. If the data you are interested in is in PDF format then you must be worried about how you would collect the data in an automated manner to make the process of collecting the data fast and efficient for you.

The process of extracting data from PDF documents is known as PDF scraping. The process involves using a computer bot known as a PDF scraper to load the full content of a PDF page then use a parser to traverse and locate the data to be scrapped. After the data must have been collected, the data is either stored or use directly in the PDF scraper if the scraper being used is a custom one.

Some simple use Optical Object Recognition (OCR) technology to identify the content of a document. In this article, we would be showing you how to extract data from PDF documents. We would start. With how to develop your own custom scraper using Python then provide you recommendations on the best PDF scrapers you can use.

How to Scrape PDF Files Using Python

If you are a programmer, you can develop your own PDF scraper to help you extract data from PDF documents. One thing you will come to like about this approach is that unlike in the case of scraping web that you will have to deal. With blocks, there are no blocks in PDF scraping. Does it mean there are no challenges when scraping PDF documents?

Well, there are and one of the most important of such is in the area of parsing especially if the data you are interested in is buried within the content. Let say you want to scrape email addresses buried within a text – that is a major problem that you will need to use regular expression.

In essence, if you want to scrape PDF to extract data, there is more skill you need than the skill of copying the content of the file. You will also need the skill of processing text to parse out the data you want.

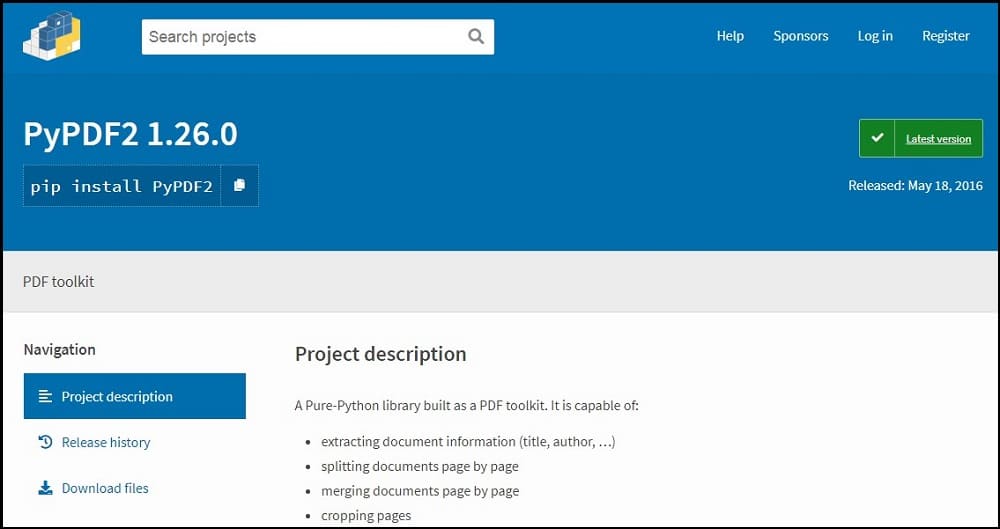

If you are a Python programmer, there are many libraries available to you that you can leverage to scrape PDF documents. If the data you want is available in tabular form, then the tabula-py library is the tool for the job. Else, you can simply use the PyPDF2 library.

Remember, regardless of the library you use, you will need the skill of processing text using regular expression if you need to scrape data buried within the text. You can use the Regular Expression module embedded in the Python standard library. Below is a small script that shows how you can use the PyPDF2 library to scrape the content of a PDF document.

import requests

import PyPDF2

x = requests.get("http://codex.cs.yale.edu/avi/db-book/db4/slide-dir/ch1-2.pdf").content

with open("my_pdf.pdf", 'wb') as my_data:

my_data.write(x)

open_pdf_file = open("my_pdf.pdf", 'rb')

s = PyPDF2.PdfFileReader(open_pdf_file)

print(s.getPage(1).extractText())

Read more,

Best PDF Scrapers in the Market

It might interest you to know that you do not need to know how to code in other to extract data from PDF documents. Thanks to computer programs that have been developed specifically for extracting data from PDF files. There are a good number of these applications in the market. However, we would be discussing some of the best ones below.

Amazon Textract

- Pricing: Starts at $139 for a single user license

- Free Trials: Not available

- Data Output Format: TXT, CSV, Excel, JSON, XML. TSV, etc.

- Supported Platforms: Desktop

The Amazon Textract is arguably one of the best methods to scrape data from PDF documents and other document formats. It automatically extracts text and handwriting from any document you provide. It can automatically identify tables, forms, and dense text using AI and all of these without you writing a single line of code at your end.

How this is possible is because it makes use of OCR technology which can be used to identify printed texts and handwritings in documents. Amazon Extract is quite easy to use and interesting, they have got. The free tier allows you to analyze 1K pages monthly for 3 months.

Nanonets

- Pricing: Starts at $0.0015 for a page

- Free Trials: 100 pages monthly

- Data Output Format: multiple formats supported

- Supported Platforms: Web

The Nanonets service is one of the best serves you can use to extract data from PDF. One thing you will come to like about this software is that it does not only have support for extracting data from PDF documents but would conveniently extract written data from images using OCR technology.

PDF documents do not have to be structured for this tool to extract data from and let face it, a large percentage of PDF documents are not structured and do not even follow any standard. While some PDF readers might find it difficult to read and extract data from unstructured PDF files, Nanonets find it easy to do that.

Docparser

- Pricing: Starts at $39 for 100 – 500 pages monthly

- Free Trials: 30 – 150 pages monthly

- Data Output Format: CSV, Excel, and XML.

- Supported Platforms: Web

From the name of this tool, you can tell that it is a document parser for extracting data from documents. But what are the file types supported? Docparser has support for scraping data from word documents, PDF files, and even images. One thing you will come to like about this software is that it does have a template to make the process of data collection easy. Some of the templates it has include invoices, purchase orders, and bank statements, among others.

All you need to do is to upload the files, define the rules and data you would need to scrape, and then get it to do the extraction then download the files. In terms of data export format, you can use the standard popular formats such as Excel, CSV, or XML. It also allows you to connect cloud applications such as Zapier.

FineReader PDF

- Pricing: Starts at $199 for one-off payment

- Free Trials: Available

- Data Output Format: CSV, Excel, and JSON

- Supported Platforms: Windows, Mac, iOS, and Android

It also has a web-based version if you do not want to install any software on your computer. In terms of pricing, this tool can be bought as a one-off payment tool. According to the information available on the website of this tool, it has been installed over 100 million times and currently has over 17K corporate customers.

Docsumo

- Pricing: Starts at $25 monthly

- Free Trials: Available

- Data Output Format: CSV, JSON, XML

- Supported Platforms: Web

Docsumo is one of the best PDF scrapers in the market. The Docsumo tool provides you with a smarter way to process documents including PDF files. All you need to do to scrape data present in a PDF document is to upload the document, define the rule for extraction, and then review the extracted. The tool also has support or API integration.

With the Docsumo application, you can automate your decision-making by automatically extracting data from unstructured documents. This tool comes equipped with an OCR technology which makes it possible to extract the content of not just documents but also images. It is a better alternative to manual data reentry as it saves time, is more efficient, and is less error-prone.

Conclusion

From the above, you can see that even with the unstructured nature of PDF documents, they can still be scrapped. While some of the tools for scraping PDF documents use the conventional methods of scraping data from PDF documents, many of the recommended tools above use OCR technology to get that done.

The above 5 are just some of the few applications you can use to scrape data from PDF documents. There are many others in the market that you can use. However, you can see the list above as a list of tested and trusted solutions for PDF data extraction.